The crew at Novasky, a ”collaborative initiative led by college students and advisors at UC Berkeley’s Sky Computing Lab,” has performed what appeared not possible simply months in the past: They’ve created a high-performance AI reasoning mannequin for beneath $450 in coaching prices.

Not like conventional LLMs that merely predict the subsequent phrase in a sentence, so-called “reasoning fashions” are designed to know an issue, analyze completely different approaches to unravel it, and execute the most effective resolution. That makes these fashions tougher to coach and configure, as a result of they have to “motive” via the entire problem-solving course of as an alternative of simply predicting the most effective response primarily based on their coaching dataset.

That’s why a ChatGPT Professional subscription, which runs the newest o3 reasoning mannequin, prices $200 a month—OpenAI argues that these fashions are costly to coach and run.

The brand new Novasky mannequin, dubbed Sky-T1, is akin to OpenAI’s first reasoning mannequin, referred to as o1—aka Strawberry—which was launched in September 2024, and prices customers $20 a month. By comparability, Sky-T1 is a 32 billion parameter mannequin able to operating domestically on residence computer systems—offered you could have a beefy 24GB GPU, like an RTX 4090 or an older 3090 Ti. And it’s free.

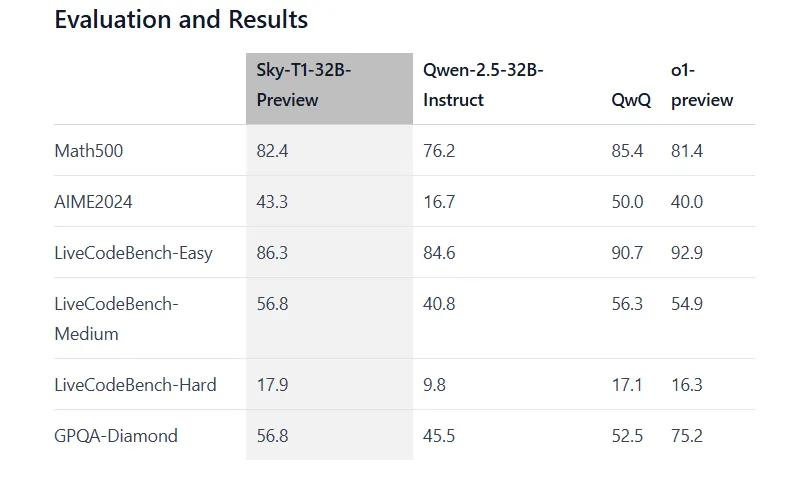

We’re not speaking about some watered-down model. Sky-T1-32B-Preview achieves 43.3% accuracy on AIME2024 math issues, edging out OpenAI o1’s 40%. On LiveCodeBench-Medium, it scores 56.8% in comparison with o1-preview’s 54.9%. The mannequin maintains robust efficiency throughout different benchmarks too, hitting 82.4% on Math500 issues the place o1-preview scores 81.4%.

The timing could not be extra fascinating. The AI reasoning race has been heating up currently, with OpenAI’s o3 turning heads by outperforming people on basic intelligence benchmarks, sparking debates about whether or not we’re seeing early AGI or synthetic basic intelligence. In the meantime, China’s Deepseek v3 made waves final 12 months by outperforming OpenAI’s o1 whereas utilizing fewer assets and likewise being open-source.

🚀 Introducing DeepSeek-V3!

Greatest leap ahead but:

⚡ 60 tokens/second (3x sooner than V2!)

💪 Enhanced capabilities

🛠 API compatibility intact

🌍 Absolutely open-source fashions & papers🐋 1/n pic.twitter.com/p1dV9gJ2Sd

— DeepSeek (@deepseek_ai) December 26, 2024

However Berkeley’s strategy is completely different. As an alternative of chasing uncooked energy, the crew centered on making a strong reasoning mannequin accessible to the plenty as cheaply as attainable, constructing a mannequin that’s simple to fine-tune and run on native computer systems with out dear company {hardware}.

“Remarkably, Sky-T1-32B-Preview was educated for lower than $450, demonstrating that it’s attainable to copy high-level reasoning capabilities affordably and effectively. All code is open-source,” Novasky stated in its official weblog submit.

Presently OpenAI doesn’t provide entry to its reasoning fashions totally free, although it does provide free entry to a much less subtle mannequin.

The prospect of fine-tuning a reasoning mannequin for domain-specific excellence at beneath $500 is very compelling to builders, since such specialised fashions can probably outperform extra highly effective general-purpose fashions in focused domains. This cost-effective specialization opens new prospects for centered purposes throughout scientific fields.

The crew educated their mannequin for simply 19 hours utilizing Nvidia H100 GPUs, following what they name a “recipe” that the majority devs ought to have the ability to replicate. The coaching information seems to be like a best hits of AI challenges.

“Our remaining information comprises 5K coding information from APPs and TACO, and 10k math information from AIME, MATH, and Olympiads subsets of the NuminaMATH dataset. As well as, we preserve 1k science and puzzle information from STILL-2,” Novasky stated.

The dataset was diversified sufficient to assist the mannequin suppose flexibly throughout various kinds of issues. Novasky used QwQ-32B-Preview, one other open-source reasoning AI mannequin, to generate the info and fine-tune a Qwen2.5-32B-Instruct open-source LLM. The consequence was a strong new mannequin with reasoning capabilities, which might later change into what Sky-T1.

A key discovering from the crew’s work: greater remains to be higher in terms of AI fashions. Their experiments with smaller 7 billion and 14 billion parameter variations confirmed solely modest positive aspects. The candy spot turned out to be 32 billion parameters—giant sufficient to keep away from repetitive outputs, however not so huge that it turns into impractical.

If you wish to have your personal model of a mannequin that beats OpenAI o1, you’ll be able to obtain Sky-T1 on Hugging Face. In case your GPU isn’t highly effective sufficient however you continue to need to attempt it out, there are quantized variations that go from 8 bits all the way in which right down to 2 bits, so you’ll be able to commerce in accuracy for pace and take a look at the subsequent smartest thing in your potato PC.

Simply bear in mind: The builders warn that such ranges of quantization are “not really useful for many functions.”

Edited by Andrew Hayward

Typically Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.