Prior to now weeks, researchers from Google and Sakana unveiled two cutting-edge neural community designs that would upend the AI trade.

These applied sciences goal to problem the dominance of transformers—a kind of neural community that connects inputs and outputs based mostly on context—the know-how that has outlined AI for the previous six years.

The brand new approaches are Google’s “Titans,” and “Transformers Squared,” which was designed by Sakana, a Tokyo AI startup identified for utilizing nature as its mannequin for tech options. Certainly, each Google and Sakana tackled the transformer drawback by learning the human mind. Their transformers principally make the most of completely different levels of reminiscence and activate completely different skilled modules independently, as an alternative of participating the entire mannequin without delay for each drawback.

The online end result makes AI programs smarter, sooner, and extra versatile than ever earlier than with out making them essentially larger or costlier to run.

For context, transformer structure, the know-how which gave ChatGPT the ‘T’ in its identify, is designed for sequence-to-sequence duties akin to language modeling, translation, and picture processing. Transformers depend on “consideration mechanisms,” or instruments to know how vital an idea is relying on a context, to mannequin dependencies between enter tokens, enabling them to course of knowledge in parallel reasonably than sequentially like so-called recurrent neural networks—the dominant know-how in AI earlier than transformers appeared. This know-how gave fashions context understanding and marked a earlier than and after second in AI growth.

Nevertheless, regardless of their exceptional success, transformers confronted vital challenges in scalability and adaptableness. For fashions to be extra versatile and versatile, additionally they have to be extra highly effective. So as soon as they’re skilled, they can’t be improved until builders provide you with a brand new mannequin or customers depend on third-party instruments. That’s why at this time, in AI, “larger is best” is a normal rule.

However this may increasingly change quickly, because of Google and Sakana.

Titans: A brand new reminiscence structure for dumb AI

Google Analysis’s Titans structure takes a distinct method to bettering AI adaptability. As an alternative of modifying how fashions course of info, Titans focuses on altering how they retailer and entry it. The structure introduces a neural long-term reminiscence module that learns to memorize at take a look at time, much like how human reminiscence works.

At the moment, fashions learn your total immediate and output, predict a token, learn every thing once more, predict the following token, and so forth till they provide you with the reply. They’ve an unimaginable short-term reminiscence, however they suck at long-term reminiscence. Ask them to recollect issues outdoors their context window, or very particular info in a bunch of noise, and they’re going to most likely fail.

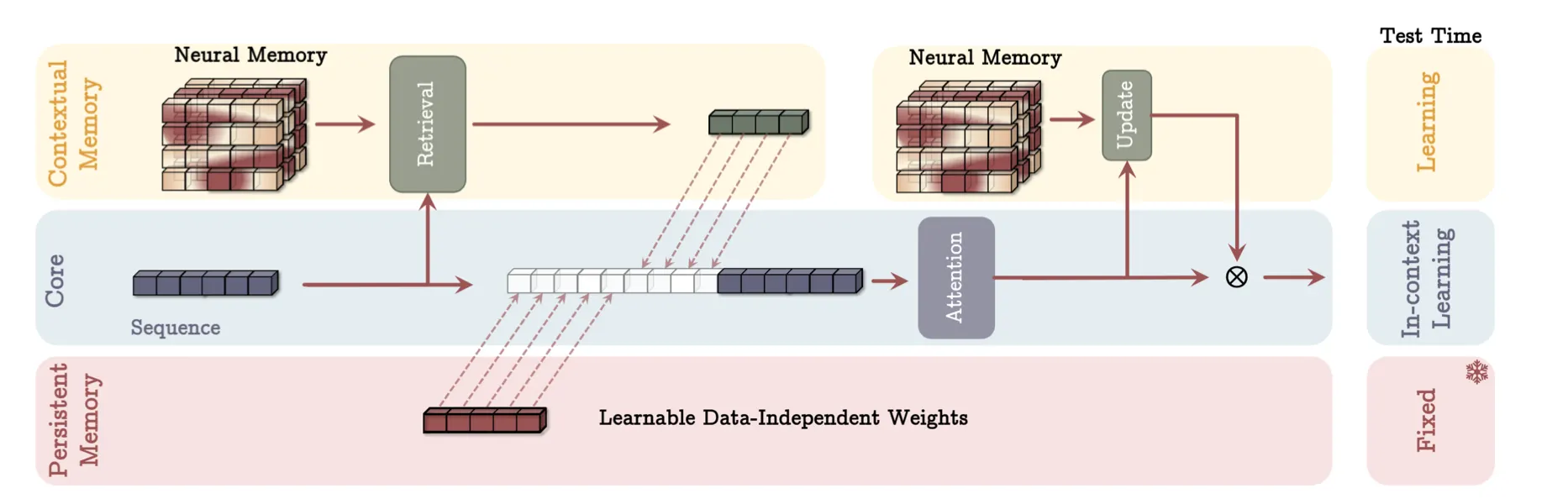

Titans, alternatively, combines three kinds of reminiscence programs: short-term reminiscence (much like conventional transformers), long-term reminiscence (for storing historic context), and protracted reminiscence (for task-specific data). This multi-tiered method permits the mannequin to deal with sequences over 2 million tokens in size, far past what present transformers can course of effectively.

In accordance with the analysis paper, Titans reveals vital enhancements in varied duties, together with language modeling, commonsense reasoning, and genomics. The structure has confirmed notably efficient at “needle-in-haystack” duties, the place it must find particular info inside very lengthy contexts.

The system mimics how the human mind prompts particular areas for various duties and dynamically reconfigures its networks based mostly on altering calls for.

In different phrases, much like how completely different neurons in your mind are specialised for distinct features and are activated based mostly on the duty you are performing, Titans emulate this concept by incorporating interconnected reminiscence programs. These programs (short-term, long-term, and protracted reminiscences) work collectively to dynamically retailer, retrieve, and course of info based mostly on the duty at hand.

Transformer Squared: Self-adapting AI is right here

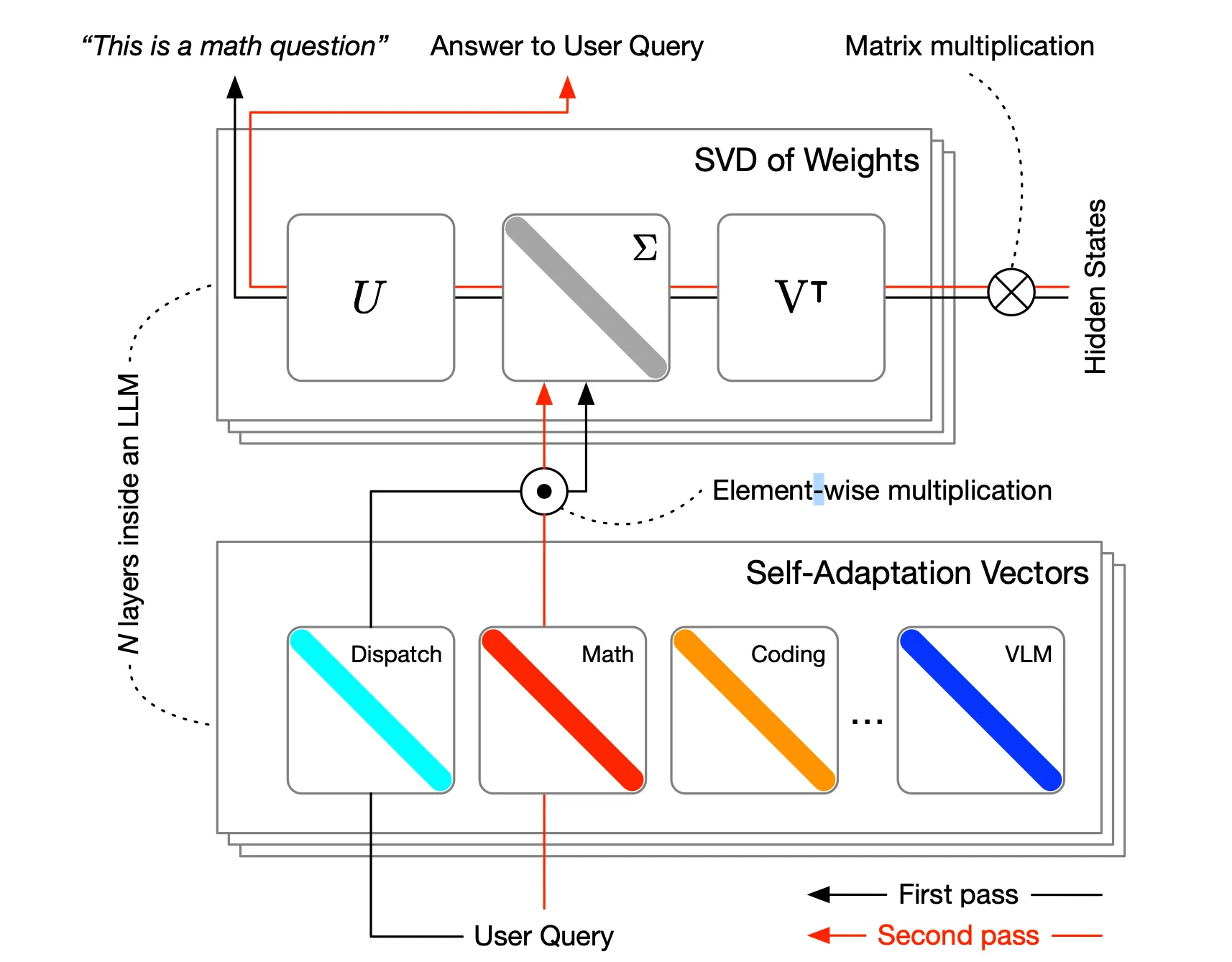

Simply two weeks after Google’s paper, a crew of researchers from Sakana AI and the Institute of Science Tokyo launched Transformer Squared, a framework that enables AI fashions to switch their conduct in real-time based mostly on the duty at hand. The system works by selectively adjusting solely the singular parts of their weight matrices throughout inference, making it extra environment friendly than conventional fine-tuning strategies.

Transformer Squared “employs a two-pass mechanism: first, a dispatch system identifies the duty properties, after which task-specific ‘skilled’ vectors, skilled utilizing reinforcement studying, are dynamically combined to acquire focused conduct for the incoming immediate,” in response to the analysis paper.

It sacrifices inference time (it thinks extra) for specialization (realizing which experience to use).

What makes Transformer Squared notably revolutionary is its capacity to adapt with out requiring in depth retraining. The system makes use of what the researchers name Singular Worth Nice-tuning (SVF), which focuses on modifying solely the important parts wanted for a selected activity. This method considerably reduces computational calls for whereas sustaining or bettering efficiency in comparison with present strategies.

In testing, Sakana’s Transformer demonstrated exceptional versatility throughout completely different duties and mannequin architectures. The framework confirmed explicit promise in dealing with out-of-distribution purposes, suggesting it may assist AI programs turn out to be extra versatile and attentive to novel conditions.

Right here’s our try at an analogy. Your mind varieties new neural connections when studying a brand new ability with out having to rewire every thing. While you study to play piano, as an illustration, your mind would not have to rewrite all its data—it adapts particular neural circuits for that activity whereas sustaining different capabilities. Sakana’s thought was that builders don’t have to retrain the mannequin’s total community to adapt to new duties.

As an alternative, the mannequin selectively adjusts particular parts (by means of Singular Worth Nice-tuning) to turn out to be extra environment friendly at explicit duties whereas sustaining its normal capabilities.

Total, the period of AI corporations bragging over the sheer dimension of their fashions might quickly be a relic of the previous. If this new technology of neural networks positive factors traction, then future fashions gained’t have to depend on huge scales to attain larger versatility and efficiency.

At the moment, transformers dominate the panorama, typically supplemented by exterior instruments like Retrieval-Augmented Era (RAG) or LoRAs to reinforce their capabilities. However within the fast-moving AI trade, it solely takes one breakthrough implementation to set the stage for a seismic shift—and as soon as that occurs, the remainder of the sector is bound to comply with.

Edited by Andrew Hayward

Typically Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.