Howard Lutnick, the Commerce Secretary nominee who testified earlier than the U.S. Senate Tuesday, claimed DeepSeek cheated when its revolutionary new AI mannequin spanked prime western AI firms this week.

“They stole issues, they broke in, they’ve taken our IP,” Lutnick mentioned, reflecting broader U.S. authorities considerations about Chinese language firms doubtlessly misappropriating U.S. expertise.

The White Home joined a rising backlash in opposition to DeepSeek on Wednesday, assessing potential nationwide safety dangers tied to its AI. Critics, together with Western corporations, accuse the Chinese language upstart of rule-breaking, espionage, and market manipulation.

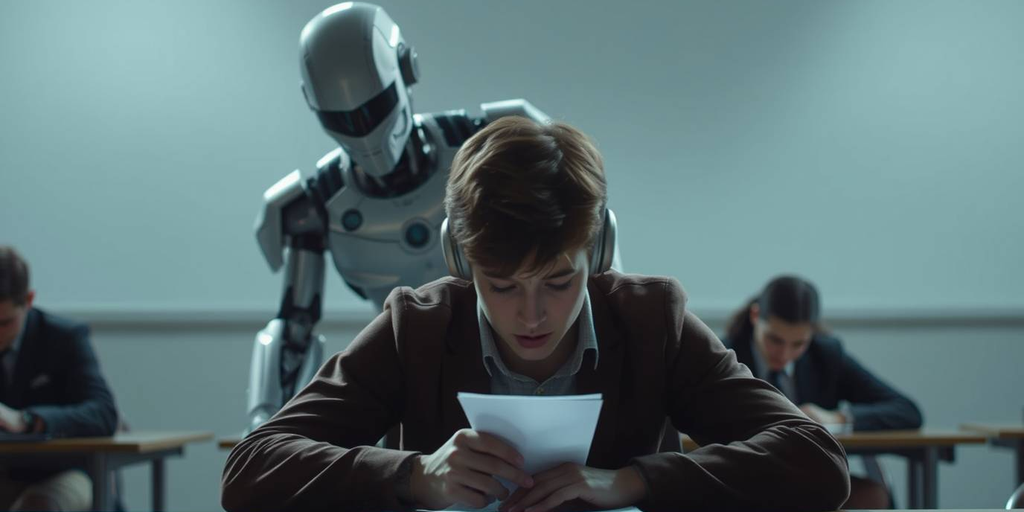

It began late Monday after a grotesque day in tech shares when OpenAI floated the concept that DeepSeek had used a way known as “distillation” (utilizing a giant mannequin for example to information the solutions offered by smaller fashions) to extract information from its fashions—a violation of their phrases of service.

David Sacks, Donald Trump’s AI adviser, piled on, including that there was “substantial proof” of DeepSeek “sucking information” out of OpenAI’s expertise.

The ChatGPT maker confirmed that they’re investigating the problem.

“We’re conscious of and reviewing indications that DeepSeek could have inappropriately distilled our fashions, and can share data as we all know extra,” an OpenAI spokesperson advised Axios.

The pushback got here in response to DeepSeek’s impression on Nvidia’s market cap, erasing near $600 billion—a report wipeout—on Monday after launching a brand new type of AI mannequin final week that supposedly matches U.S. capabilities at a mere fraction of the associated fee.

Hedge fund supervisor Invoice Ackman—the financier who jokingly in contrast Trump to God—steered that DeepSeek’s founders might need launched the mannequin without spending a dime to brief Nvidia inventory. DeepSeek is backed by a quant hedge fund, in spite of everything.

“What are the possibilities that DeepSeek AI’s hedge fund affiliate made a fortune yesterday with short-dated places on Nvidia, energy firms, and many others.? A fortune might have been made,” Ackman tweeted.

Although President Donald Trump initially known as DeepSeek’s mannequin a “wake-up name” for the business and a “constructive factor,” the U.S. Navy has already reached a distinct conclusion.

In line with CNBC, the army department ordered personnel late final week to avoid DeepSeek’s expertise “in any capability,” citing “potential safety and moral considerations related to the mannequin’s origin and utilization.

In an e mail to the troops Friday, the Navy mentioned it’s “crucial” for any workforce member to keep away from DeepSeek “for any work-related duties or private use.”

In the meantime, throughout the Atlantic, Italian regulators are additionally taking a tough have a look at DeepSeek.

The nation’s Knowledge Safety Authority gave DeepSeek 20 days to elucidate precisely what private information they acquire, the place they retailer it, and the way they use it to coach their AI system—regardless of all of these solutions being publicly accessible in DeepSeek AI’s Privateness Coverage.

For its half, DeepSeek has to date remained silent and has not replied to Decrypt (or anybody else) for remark.

“Karma’s a Bitch.”

You don’t want synthetic intelligence to foretell the Web’s swift and cruel response to the entire affair:

“OpenAI is sad that DeepSeek skilled on their information with out consent or compensation,” tweeted Toby Walsh, Chief Scientist on the College of New South Wales’s AI Institute. “Oh, the irony for all us authors sad with OpenAI for coaching on our information with out consent or compensation!” he posted on X.

“DeepSeek could nicely have damaged OpenAI’s Phrases of Service and distilled their mental property with out permission. OpenAI could nicely have carried out analogous issues to YouTube, New York Occasions, and numerous artists and writers,” AI researcher and author Gary Marcus tweeted. “Karma is a bitch.”

And in a prolonged rant on his weblog, tech business critic Edward Zitron mentioned the controversy was symptomatic of deeper points in American tech.

He argued it revealed basic issues with U.S. tech firms. “This is not about China — it is a lot fucking simpler if we let it’s about China,” Zitron fulminated. “It is about how the American tech business is incurious, lazy, entitled, directionless and irresponsible.”

Far calmer was Perplexity CEO Aravind Srinivas—who already tailored and built-in DeepSeek into his search engine.

Srinivas supplied a technical protection of DeepSeek to allegations that it had copied OpenAI. “There’s numerous false impression that China ‘simply cloned’ the outputs of OpenAi,” he tweeted. “That is removed from true and displays an incomplete understanding of how these fashions are skilled within the first place.

“DeepSeek R1 has discovered (Reinforcement Studying) fine-tuning… The primary motive it is so good is as a result of it discovered reasoning from scratch reasonably than imitating different people or fashions.”

In different phrases, DeepSeek did the work.

Certainly, Zitron, clearly unnerved by the insinuations that DeepSeek did one thing dishonest, pointed to OpenAI and Anthropic as examples of what he known as “the antithesis of Silicon Valley” and being extra involved with advertising than innovation.

His conclusion? The push to border DeepSeek as a Chinese language menace conveniently masks a extra uncomfortable reality: U.S. tech firms have misplaced their drive to create significant options.

“Personally, I genuinely need OpenAI to level a finger at DeepSeek and accuse it of IP theft, purely for the hypocrisy issue,” Zitron mentioned. “It is a firm that exists purely from the wholesale industrial larceny of content material produced by particular person creators and web customers, and now it’s nervous a couple of rival pilfering its personal items? Cry extra, Altman, you nasty little worm.”

Edited by Josh Quittner and Sebastian Sinclair

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.