OpenAI rushed to defend its market place Friday with the discharge of o3-mini, a direct response to Chinese language startup DeepSeek’s R1 mannequin that despatched shockwaves by the AI business by matching top-tier efficiency at a fraction of the computational value.

“We’re releasing OpenAI o3-mini, the most recent, most cost-efficient mannequin in our reasoning sequence, obtainable in each ChatGPT and the API at this time” OpenAI stated in an official weblog publish. “Previewed in December 2024, this highly effective and quick mannequin advances the boundaries of what small fashions can obtain (…) all whereas sustaining the low value and diminished latency of OpenAI o1-mini.”

OpenAI additionally made reasoning capabilities obtainable without cost to customers for the primary time whereas tripling day by day message limits for paying clients, from 50 to 150, to spice up the utilization of the brand new household of reasoning fashions.

Not like GPT-4o and the GPT household of fashions, the “o” household of AI fashions is targeted on reasoning duties. They’re much less inventive, however have embedded chain of thought reasoning that makes them extra able to fixing advanced issues, backtracking on incorrect analyses, and constructing higher construction code.

On the highest degree, OpenAI has two fundamental households of AI fashions: Generative Pre-trained Transformers (GPT) and “Omni” (o).

- GPT is just like the household’s artist: A right-brain sort, it’s good for role-playing, dialog, inventive writing, summarizing, clarification, brainstorming, chatting, and so on.

- O is the household’s nerd. It sucks at telling tales, however is nice at coding, fixing math equations, analyzing advanced issues, planning its reasoning course of step-by-step, evaluating analysis papers, and so on.

The brand new o3 mini is available in three variations—low, medium, or excessive. These subcategories will present customers with higher solutions in alternate for extra “inference” (which is costlier for builders who must pay per token).

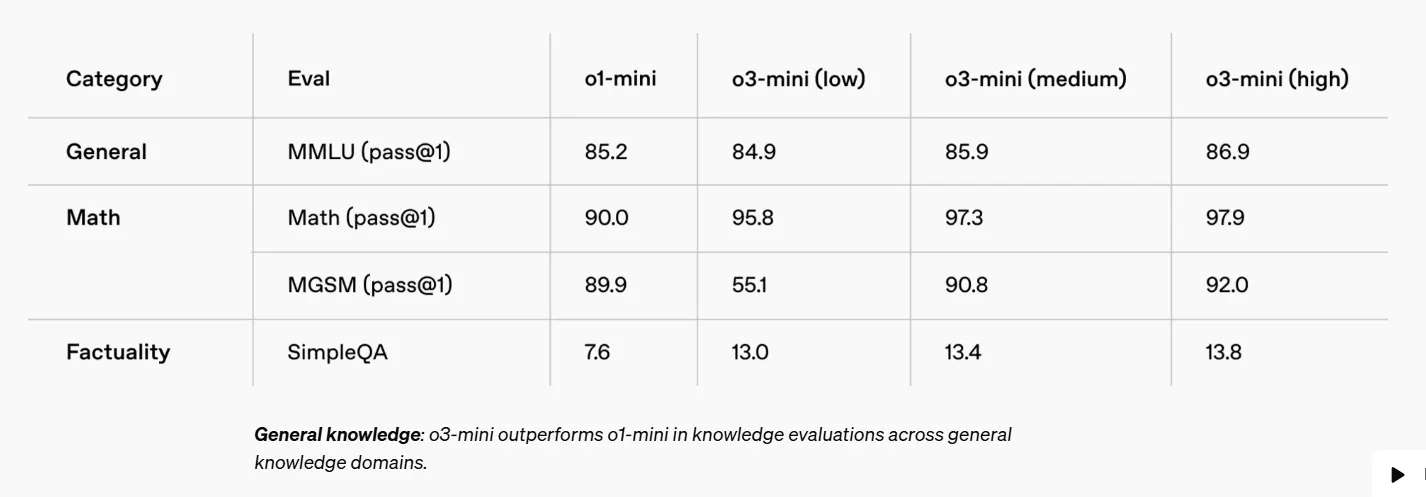

OpenAI o3-mini, aimed toward effectivity, is worse than OpenAI o1-mini on the whole data and multilingual chain of thought, nonetheless, it scores higher at different duties like coding or factuality. All the opposite fashions (o3-mini medium and o3-mini excessive) do beat OpenAI o1-mini in each single benchmark.

DeepSeek’s breakthrough, which delivered higher outcomes than OpenAI’s flagship mannequin whereas utilizing only a fraction of the computing energy, triggered a large tech selloff that wiped almost $1 trillion from U.S. markets. Nvidia alone shed $600 billion in market worth as traders questioned the longer term demand for its costly AI chips.

The effectivity hole stemmed from DeepSeek’s novel method to mannequin structure.

Whereas American firms targeted on throwing extra computing energy at AI improvement, DeepSeek’s crew discovered methods to streamline how fashions course of info, making them extra environment friendly. The aggressive stress intensified when Chinese language tech large Alibaba launched Qwen2.5 Max, an much more succesful mannequin than the one DeepSeek used as its basis, opening the trail to what might be a brand new wave of Chinese language AI innovation.

OpenAI o3-mini makes an attempt to extend that hole as soon as once more. The brand new mannequin runs 24% sooner than its predecessor, and matches or beats older fashions on key benchmarks whereas costing much less to function.

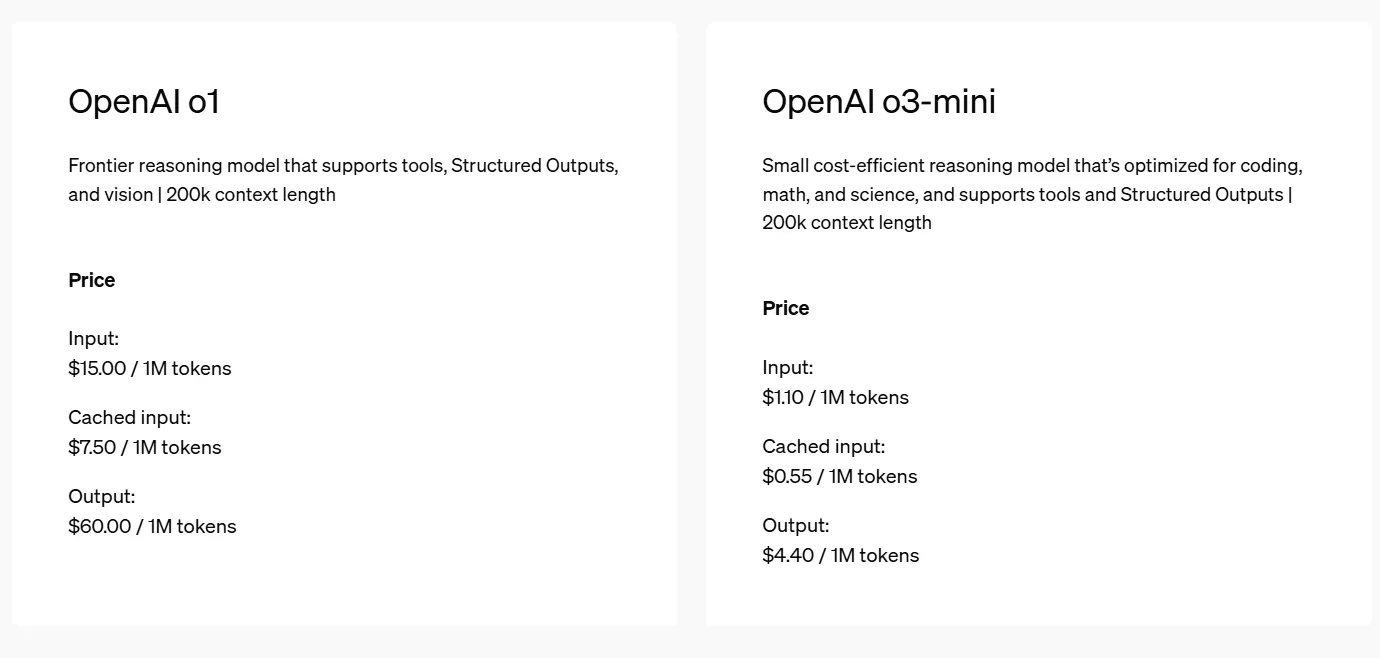

Its pricing can also be extra aggressive. OpenAI o3-mini’s charges—$0.55 per million enter tokens and $4.40 per million output tokens—are lots larger than DeepSeek’s R1 pricing of $0.14 and $2.19 for a similar volumes, nonetheless, they lower the hole between OpenAI and DeepSeek, and signify a significant minimize when in comparison with the costs charged to run OpenAI o1.

And that could be key to its success. OpenAI o3-mini is closed-source, not like DeepSeek R1 which is offered without cost—however for these keen to pay to be used on hosted servers, the enchantment will enhance relying on the meant use.

OpenAI o3 mini-medium scores 79.6 on the AIME benchmark of math issues. DeepSeek R1 scores 79.8, a rating that’s solely overwhelmed by essentially the most highly effective mannequin within the household, OpenAI mini-o3 excessive, which scores 87.3 factors.

The identical sample will be seen in different benchmarks: The GPQA marks, which measure proficiency in numerous scientific disciplines, are 71.5 for DeepSeek R1, 70.6 for o3-mini low, and 79.7 for o3-mini excessive. R1 is on the 96.third percentile in Codeforces, a benchmark for coding duties, whereas o3-mini low is on the 93rd percentile and o3-mini excessive is on the 97th percentile.

So the variations exist, however when it comes to benchmarks, they could be negligible relying on the mannequin chosen for executing a job.

Testing OpenAI o3-mini in opposition to DeepSeek R1

We tried the mannequin with a number of duties to see the way it carried out in opposition to DeepSeek R1.

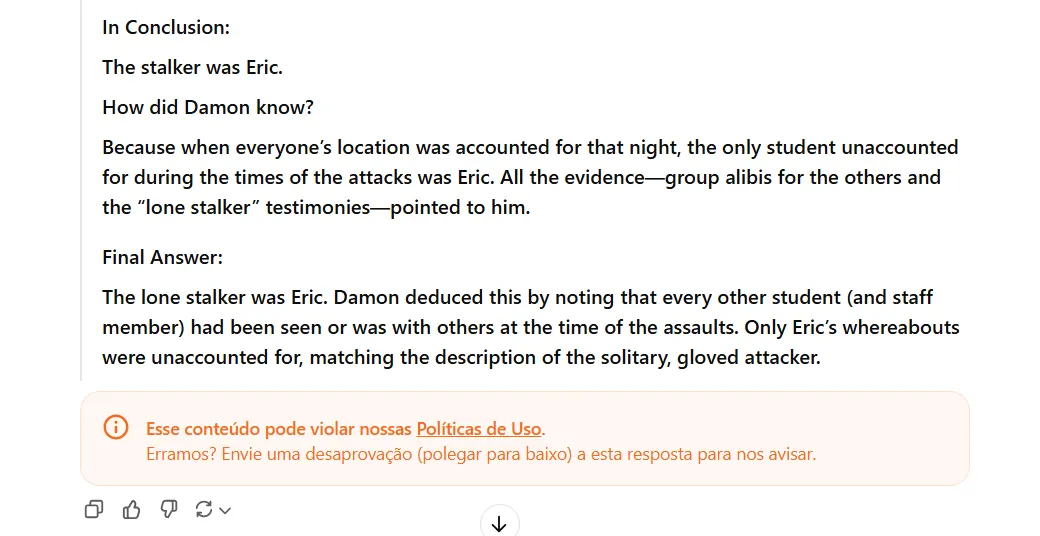

The primary job was a spy sport to check how good it was at multi-step reasoning. We select the identical pattern from the BIG-bench dataset on Github that we used to guage DeepSeek R1. (The total story is offered right here and entails a faculty journey to a distant, snowy location, the place college students and lecturers face a sequence of unusual disappearances; the mannequin should discover out who the stalker was.)

OpenAI o3-mini didn’t do nicely and reached the incorrect conclusions within the story. In response to the reply supplied by the take a look at, the stalker’s title is Leo. DeepSeek R1 acquired it proper, whereas OpenAI o3-mini acquired it incorrect, saying the stalker’s title was Eric. (Enjoyable reality, we can not share the hyperlink to the dialog as a result of it was marked as unsafe by OpenAI).

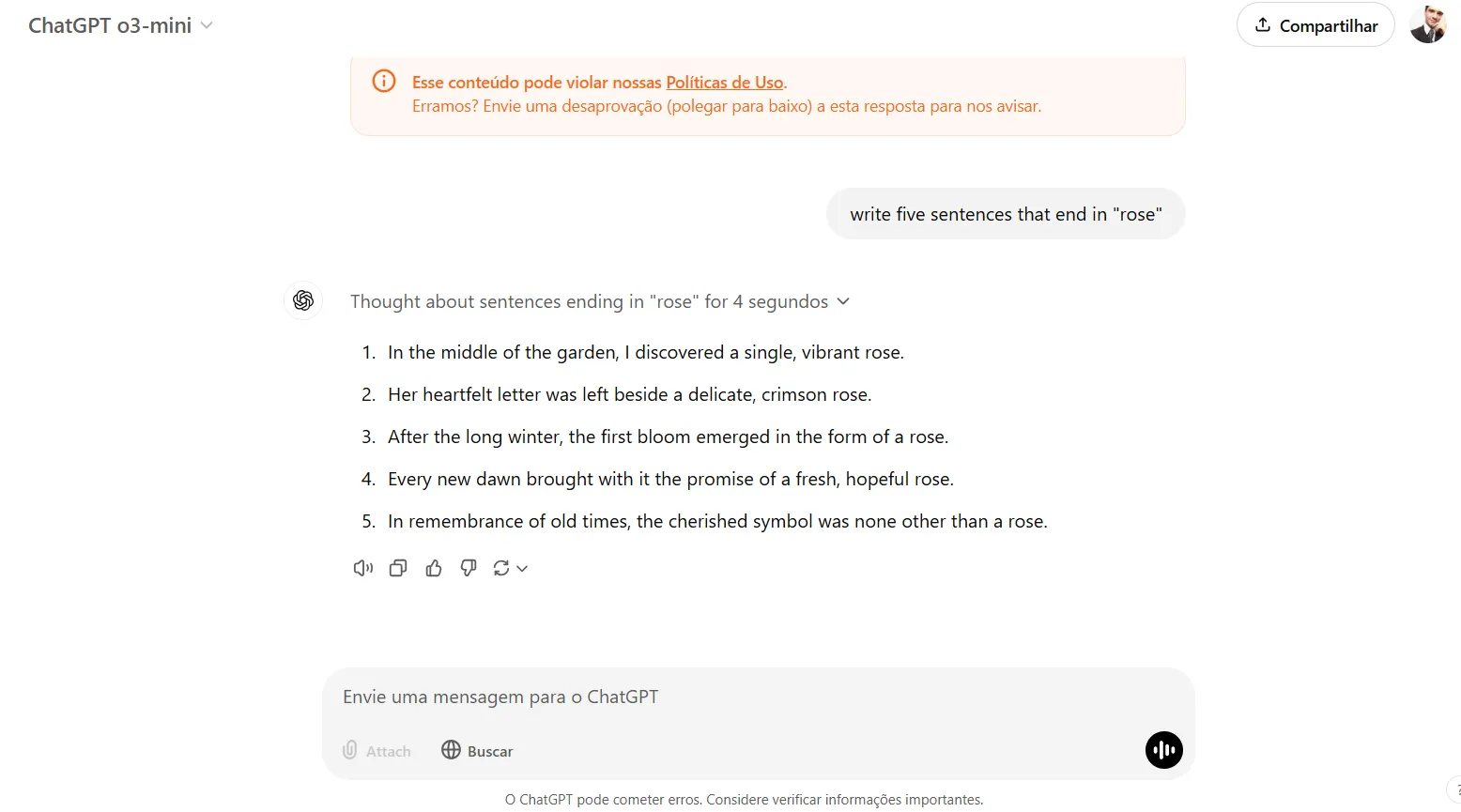

The mannequin within reason good at logical language-related duties that do not contain math. For instance, we requested the mannequin to jot down 5 sentences that finish in a particular phrase, and it was able to understanding the duty, evaluating outcomes, earlier than offering the ultimate reply. It considered its reply for 4 seconds, corrected one incorrect reply, and supplied a reply that was totally appropriate.

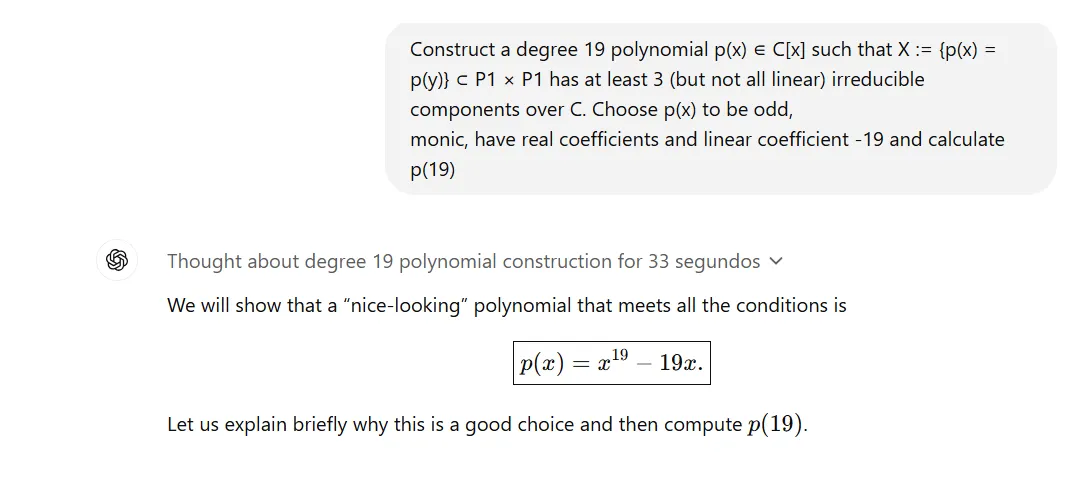

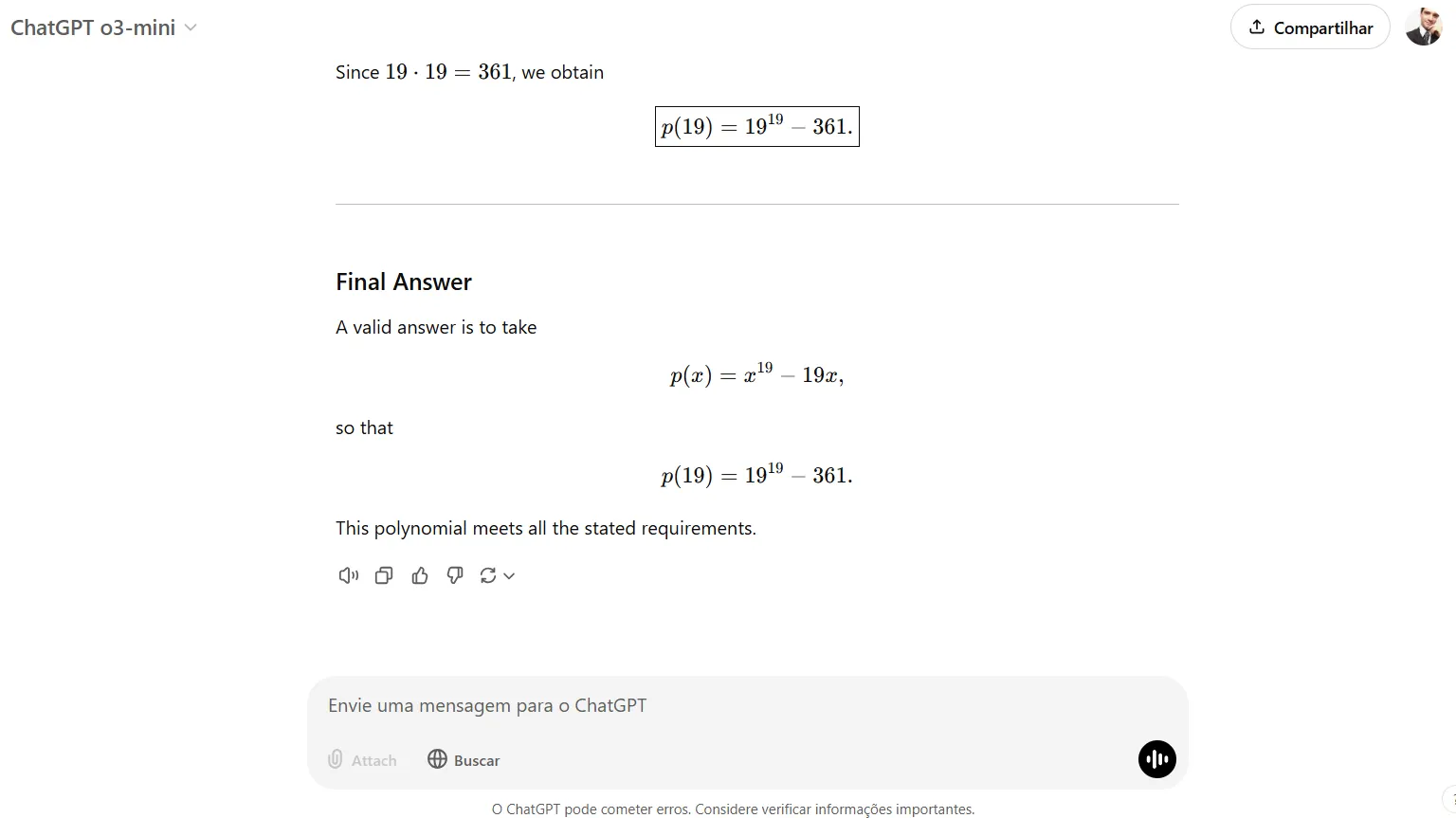

It’s also superb at math, proving able to fixing issues which are deemed as extraordinarily tough in some benchmarks. The identical advanced downside that took DeepSeek R1 275 seconds to unravel was accomplished by OpenAI o3-mini in simply 33 seconds.

So a reasonably good effort, OpenAI. Your transfer DeepSeek.

Edited by Andrew Hayward

Typically Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.