Elon Musk introduced that the subsequent technology of his firm’s AI chatbot Grok could also be simply weeks away from launch, describing it as “scary sensible” and claiming it had already outperformed each different AI mannequin in testing.

The xAI CEO made these remarks through the World Governments Summit in Dubai on February 13.

“At instances, I believe Grok-3 is form of scary sensible,” Musk mentioned. “It comes up with options that you simply would not even anticipate—, not apparent options.”

The chatbot builders utilized distinctive coaching strategies for Grok-3. As a substitute of utilizing real-world information like ChatGPT, Grok-3 relied on artificial information and employed a self-correcting mechanism to take care of logical consistency. It bought so correct, Musk claimed, that even when it encountered incorrect data, the system mirrored on the information and eliminated content material that did not match actuality.

The computational calls for for coaching Grok-3 had been huge. Consultants calculate that it required 200 million GPU hours, dwarfing its Chinese language competitor DeepSeek-V3’s 2.7 million hours. It ran on xAI’s Colossus supercluster with 100,000 Nvidia H100 GPUs—ten instances extra computing energy than its predecessor. Even with out fine-tuning, Musk claimed the bottom mannequin carried out higher than Grok-2.

Grok-3’s integration with X, Musk’s social media platform, gave it the benefit of with the ability to scrape the social media app in actual time as a substitute of counting on shopping the net. The system can pull real-time information from X, and options what the corporate referred to as “Unhinged Mode”— which, based on xAI’s personal FAQ, is “supposed to be objectionable, inappropriate, and offensive.”

The system isn’t fairly prepared for prime time, although. Musk in contrast the remaining work to ending a home: “That final 5% the place you do the drywall and do the portray and the trimming—regardless that it is not a lot work, it transforms the home.”

Nonetheless, it might be launched ahead of OpenAI’s GPT-4.5, a minimum of, which Sam Altman mentioned might be launched in weeks or months.

“In all probability (Grok-3) will get launched in a couple of week or two,” Elon mentioned. He didn’t make clear whether or not the brand new model could be publicly accessible or put behind a subscription, as occurred with Grok-2 at first.

Competitors within the AI area has intensified. Whereas ChatGPT dominated the market share in 2024, Chinese language open-source mannequin DeepSeek-V3 emerged as a severe contender, outperforming each GPT-4o and Meta’s Llama 3.1 regardless of utilizing far fewer assets.

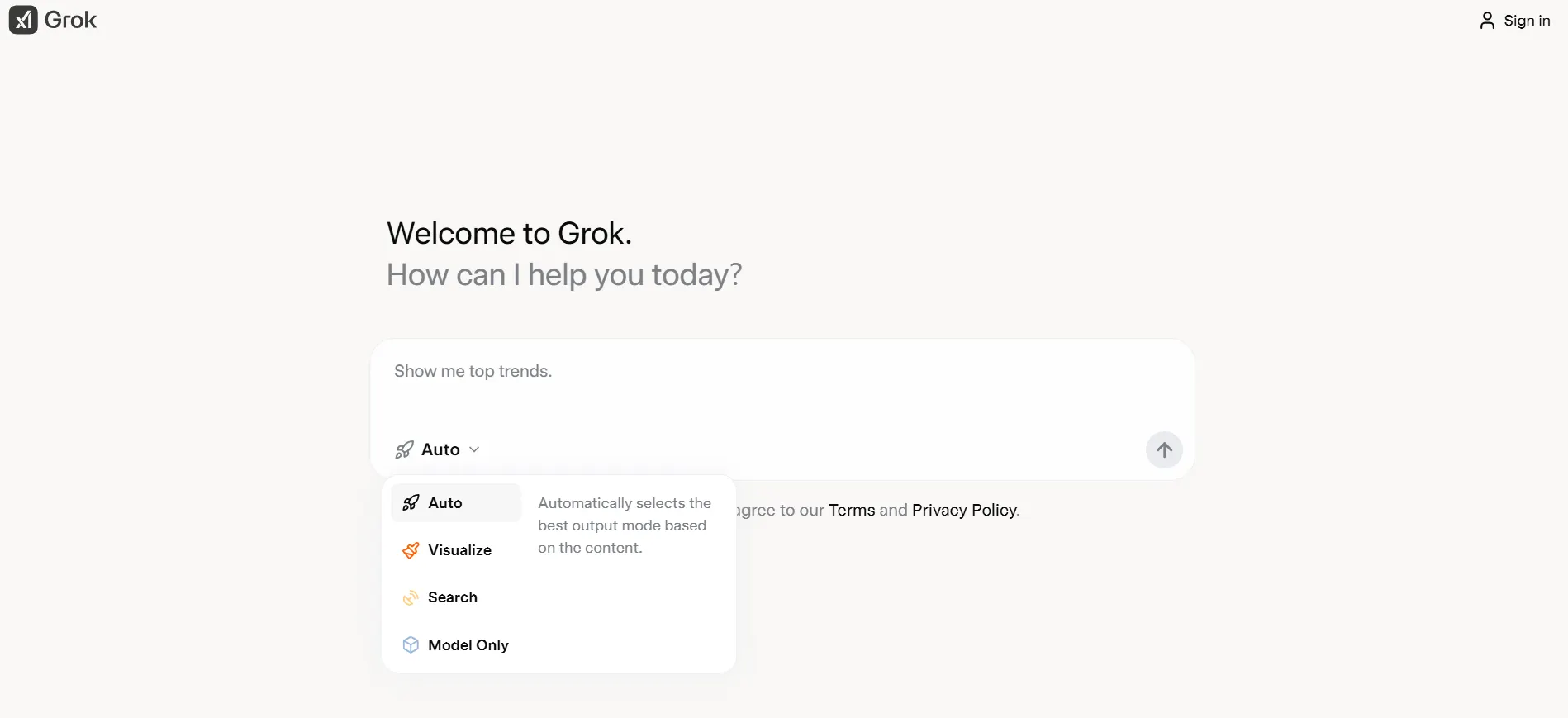

Grok was first made accessible on X Premium, which considerably restricted its availability. It was later launched free to all customers of Musk’s social media platform, with a brand new standalone web site now accessible for everybody else.

xAI enters reasoning AI battle

Main AI gamers are switching focus to reasoning fashions, growing AI fashions which might be capable of mirror on particular issues and discover methods to resolve them after a protracted and intensive chain of thought reasoning.

The thought was first explored by Matt Schumer, again when Reflection 70b was introduced. The mannequin was educated to include Chain of Thought reasoning, and was alleged to beat Claude 3.5 Sonnet at complicated duties regardless of being only a Llama 70b finetune.

I am excited to announce Reflection 70B, the world’s high open-source mannequin.

Educated utilizing Reflection-Tuning, a method developed to allow LLMs to repair their very own errors.

405B coming subsequent week – we count on it to be the perfect mannequin on this planet.

Constructed w/ @GlaiveAI.

Learn on ⬇️: pic.twitter.com/kZPW1plJuo

— Matt Shumer (@mattshumer_) September 5, 2024

That did not work, however just some weeks later, OpenAI introduced its “OpenAI o1” reasoning mannequin, making use of that very same idea successfully. That mannequin marked a brand new commonplace when it comes to the logical capabilities AI fashions can exhibit, and was seen as OpenAI’s moat to dominate the AI business.

However the launch of DeepSeek turned the world the wrong way up. A crew of Chinese language researchers constructed a mannequin that was higher than o1 at a fraction of the fee—and made it open supply, too.

Since then, OpenAI introduced that its future fashions could be merged into one jack-of-all-trades AI that leaves the standard GPT structure behind and focuses on deep reasoning first.

xAI seems to be following the markets.

“Grok-3 has very highly effective reasoning capabilities,” Elon Musk mentioned.

He didn’t disclose extra details about the mannequin’s construction. The present model of Grok-2 is positioned within the 18th place within the LLM Area, effectively under rivals like GPT, Claude, Gemini, Qwen or DeepSeek.

Wanting forward, xAI plans to scale its computing infrastructure to 1 million GPUs for future fashions with “trillions of parameters.” The final word aim, based on Musk, is to advance in the direction of synthetic common intelligence via more and more subtle fashions.

Edited by Andrew Hayward

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.