OpenAI unveiled GPT-4.1 on Monday, a trio of latest AI fashions with context home windows of as much as a million tokens—sufficient to course of whole codebases or small novels in a single go. The lineup consists of commonplace GPT-4.1, Mini, and Nano variants, all focusing on builders.

The corporate’s newest providing comes simply weeks after releasing GPT-4.5, making a timeline that makes about as a lot sense as the discharge order of the Star Wars motion pictures. “The choice to call these 4.1 was intentional. I imply, it is not simply that we’re dangerous at naming,” OpenAI product lead Kevin Weil stated throughout the announcement—however we’re nonetheless looking for out what these intentions had been.

GPT-4.1 exhibits fairly fascinating capabilities. In accordance with OpenAI, it achieved 55% accuracy on the SWEBench coding benchmark (up from GPT-4o’s 33%) whereas costing 26% much less. The brand new Nano variant, billed as the corporate’s “smallest, quickest, least expensive mannequin ever,” runs at simply 12 cents per million tokens.

Additionally, OpenAI will not upcharge for processing large paperwork and truly utilizing the a million token context. “There is no such thing as a pricing bump for lengthy context,” Kevin emphasised.

The brand new fashions present spectacular efficiency enhancements. In a dwell demonstration, GPT-4.1 generated an entire net software that would analyze a 450,000-token NASA server log file from 1995. openAI claims the mannequin passes this take a look at with practically 100% accuracy even at million tokens of context.

Michelle, OpenAI’s post-training analysis lead, additionally showcased the fashions’ enhanced instruction-following talents. “The mannequin follows all of your directions to the tea,” she stated, as GPT-4.1 dutifully adhered to complicated formatting necessities with out the same old AI tendency to “creatively interpret” instructions.

How To not Rely: OpenAI’s Information to Naming Fashions

The discharge of GPT-4.1 after GPT-4.5 appears like watching somebody depend “5, 6, 4, 7” with a straight face. It is the newest chapter in OpenAI’s weird versioning saga.

After releasing GPT-4 it upgraded the mannequin with multimodal capabilities. The corporate determined to name that new mannequin GPT-4o (“o” for “omni”), a reputation that might be even be learn as “4 zero” relying on the font you utilize

Then, OpenAI launched a reasoning-focused mannequin that was simply referred to as “o.” However don’t confuse OpenAI’s GPT-4o with OpenAI’s o as a result of they don’t seem to be the identical. No one is aware of why they picked this identify, however as a common rule of thumb, GPT-4o was a “regular” LLM whereas OpenAI o1 was a reasoning mannequin.

Just a few months after the discharge of OpenAI o1, got here OpenAI o3.

However what about o2?—Effectively, that mannequin by no means existed.

“You’ll assume logically (our new mannequin) perhaps ought to have been referred to as o2, however out of respect to our mates at Telefonica—and within the grand custom of open AI being actually really dangerous at names—it is going to be referred to as o3,” Sam Altman stated throughout the mannequin’s announcement.

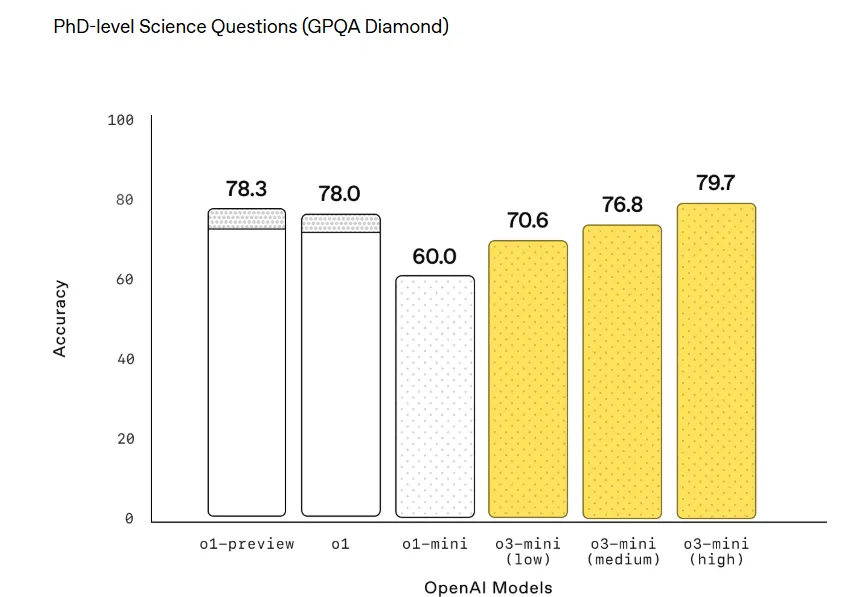

The lineup additional fragments with variants like the traditional o3 and a smaller extra environment friendly model referred to as o3 mini. Nevertheless, in addition they launched a mannequin named “OpenAI o3 mini-high” which places two absolute antonyms subsequent to one another as a result of AI can do miraculous issues.In essence, OpenAI o3 mini-high is a extra highly effective model than o3 mini, however not as highly effective as OpenAI o3—which is referenced in a single chart by Openai as “o3 (Medium),” correctly. Proper now ChatGPT customers can choose both OpenAI o3 mini or OpenAI o3 mini excessive. The conventional model is nowhere to be discovered.

Additionally, we don’t need to confuse you anymore, however OpenAI already introduced plans to launch o4 quickly. However, in fact, don’t confuse o4 with 4o as a result of they’re completely not the identical: o4 causes—4o doesn’t.

Now, let’s return to the newly introduced GPT-4.1. The mannequin is so good, it’s going to kill GPT-4.5 quickly, making that mannequin the shortest dwelling LLM within the historical past of ChatGPT. “We’re asserting that we will be deprecating GPT-4.5 within the API,” Kevin declared, giving builders a three-month deadline to modify. “We actually do want these GPUs again,” he added, confirming that even OpenAI cannot escape the silicon scarcity that is plaguing the trade.

At this price, we’re certain to see GPT-π or GPT-4.√2 earlier than the yr ends—however hey, no less than they get higher with time, regardless of the names.

The fashions are already accessible by way of API and in OpenAI’s playground, and gained’t be accessible within the user-friendly ChatGPT UI—no less than not but.

Edited by James Rubin

Typically Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.