In short

- Tiny, open-source AI mannequin Dia-1.6B claims to beat business giants like ElevenLabs or Sesame at emotional speech synthesis.

- Creating convincing emotional AI speech stays difficult as a result of complexity of human feelings and technical limitations.

- Whereas it matches up effectively in opposition to competitors, the “uncanny valley” downside persists as AI voices sound human however fail at conveying nuanced feelings.

Nari Labs has launched Dia-1.6B, an open-source text-to-speech mannequin that claims to outperform established gamers like ElevenLabs and Sesame in producing emotionally expressive speech. The mannequin is tremendous tiny—with simply 1.6 billion parameters—however nonetheless can create practical dialogue full with laughter, coughs, and emotional inflections.

It could actually even scream in terror.

We simply solved text-to-speech AI.

This mannequin can simulate good emotion, screaming and present real alarm.

— clearly beats 11 labs and Sesame

— it’s just one.6B params

— streams realtime on 1 GPU

— made by a 1.5 particular person crew in Korea!!It is known as Dia by Nari Labs. pic.twitter.com/rpeZ5lOe9z

— Deedy (@deedydas) April 22, 2025

Whereas which may not sound like an enormous technical feat, even OpenAI’s ChatGPT is flummoxed by that: “I can’t scream however I can undoubtedly converse up,” its chatbot replied when requested.

Now, some AI fashions can scream, in case you ask them to. But it surely’s not one thing that occurs naturally or organically, which, apparently, is Dia-1.6B’s tremendous energy. It understands that, in sure conditions, a scream is acceptable.

Nari’s mannequin runs in real-time on a single GPU with 10GB of VRAM, processing about 40 tokens per second on an Nvidia A4000. Not like bigger closed-source options, Dia-1.6B is freely out there underneath the Apache 2.0 license by Hugging Face and GitHub repositories.

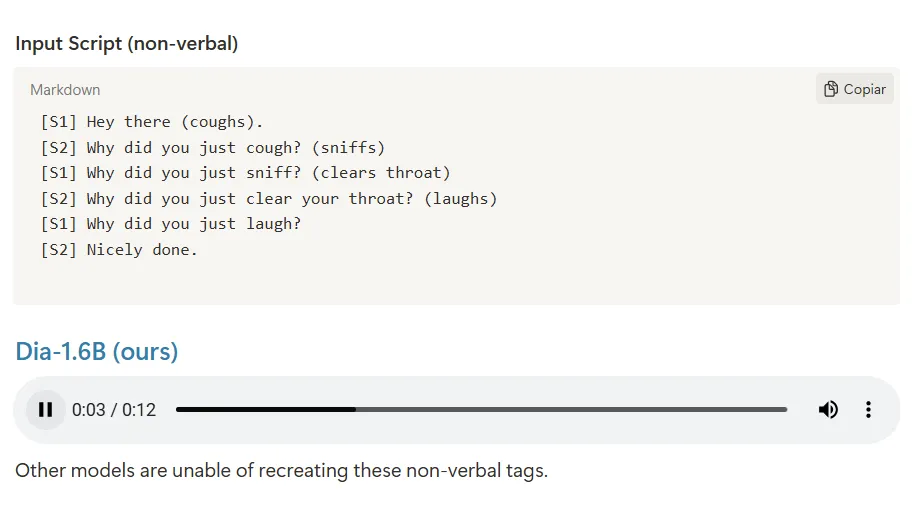

“One ridiculous objective: construct a TTS mannequin that rivals NotebookLM Podcast, ElevenLabs Studio, and Sesame CSM. Someway we pulled it off,” Nari Labs co-founder Toby Kim posted on X when saying the mannequin. Facet-by-side comparisons present Dia dealing with customary dialogue and nonverbal expressions higher than rivals, which regularly flatten supply or skip nonverbal tags fully.

The race to make emotional AI

AI platforms are more and more centered on making their text-to-speech fashions present emotion, addressing a lacking factor in human-machine interplay. Nevertheless, they aren’t good and a lot of the fashions—open or closed—are inclined to create an uncanny valley impact that diminishes person expertise.

We now have tried and in contrast just a few completely different platforms that concentrate on this particular subject of emotional speech, and most of them are fairly good so long as customers get into the appropriate mindset and know their limitations. Nevertheless, the expertise remains to be removed from convincing.

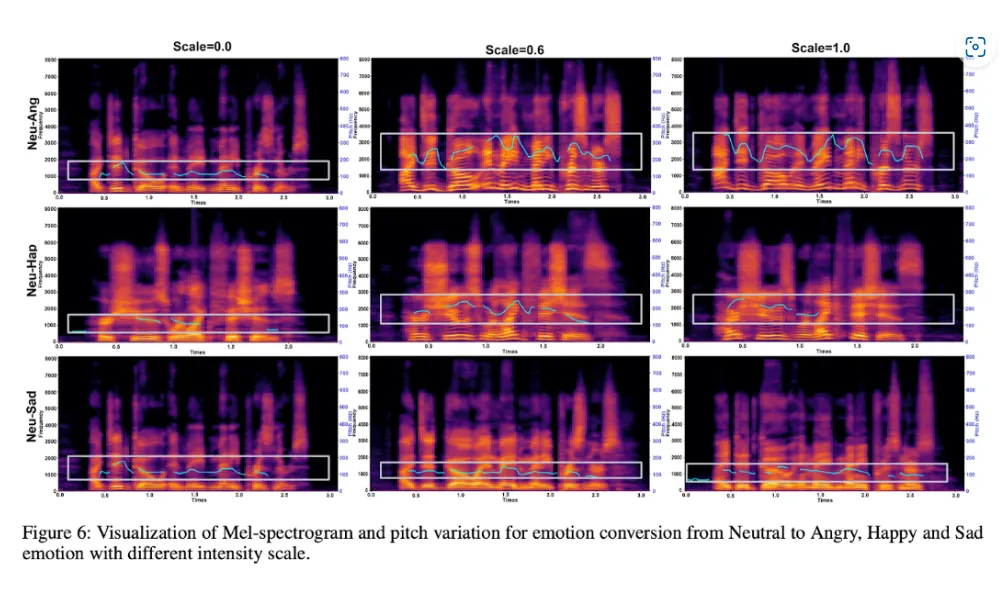

To sort out this downside, researchers are using varied methods. Some prepare fashions on datasets with emotional labels, permitting AI to be taught the acoustic patterns related to completely different emotional states. Others use deep neural networks and enormous language fashions to research contextual cues for producing applicable emotional tones.

ElevenLabs, one of many market leaders, tries to interpret emotional context instantly from textual content enter, linguistic cues, sentence construction, and punctuation to deduce the suitable emotional tone. Its flagship mannequin, Eleven Multilingual v2, is understood for its wealthy emotional expression throughout 29 languages.

In the meantime, OpenAI just lately launched “gpt-4o-mini-tts” with customizable emotional expression. Throughout demonstrations, the agency highlighted the flexibility to specify feelings like “apologetic” for buyer help situations, pricing the service at 1.5 cents per minute to make it accessible for builders. Its cutting-edge Superior Voice mode is nice at mimicking human emotion, however is so exaggerated and enthusiastic that it couldn’t compete in our checks in opposition to different options like Hume.

The place Dia-1.6B probably breaks new floor is in the way it handles nonverbal communications. The mannequin can synthesize laughter, coughing, and throat clearing when triggered by particular textual content cues like “(laughs)” or “(coughs)”—including a layer of realism usually lacking in customary TTS outputs.

Past Dia-1.6B, different notable open-source initiatives embody EmotiVoice—a multi-voice TTS engine that helps emotion as a controllable fashion issue—and Orpheus, identified for ultra-low latency and lifelike emotional expression.

It is exhausting to be human

However why is emotional speech so exhausting? In any case, AI fashions stopped sounding robotic a very long time in the past.

Nicely, it looks like naturality and emotionality are two completely different beasts. A mannequin can sound human and have a fluid, convincing tone, however utterly fail at conveying emotion past easy narration.

“In my opinion, emotional speech synthesis is difficult as a result of the info it depends on lacks emotional granularity. Most coaching datasets seize speech that’s clear and intelligible, however not deeply expressive,” Kaveh Vahdat, CEO of the AI video era firm RiseAngle, advised Decrypt. “Emotion isn’t just tone or quantity; it’s context, pacing, stress, and hesitation. These options are sometimes implicit, and infrequently labeled in a manner machines can be taught from.”

“Even when emotion tags are used, they have a tendency to flatten the complexity of actual human have an effect on into broad classes like ‘blissful’ or ‘offended’, which is much from how emotion really works in speech,” Vahdat argued.

We tried Dia, and it’s really ok. It generated round one second of audio per second of inference, and it does convey tonal feelings, however is so exaggerated that it doesn’t really feel pure. And that is the important thing of the entire downside—fashions lack a lot contextual consciousness that it’s exhausting to isolate a single emotion with out extra cues and make it coherent sufficient for people to really imagine it’s a part of a pure interplay

The “uncanny valley” impact poses a selected problem, as artificial speech can not compensate for a impartial robotic voice just by adopting a extra emotional tone.

And there are extra technical hurdles abound. AI programs usually carry out poorly when examined on audio system not included of their coaching knowledge, a difficulty often called low classification accuracy in speaker-independent experiments. Actual-time processing of emotional speech requires substantial computational energy, limiting deployment on shopper units.

Information high quality and bias additionally current vital obstacles. Coaching AI for emotional speech requires giant, numerous datasets capturing feelings throughout demographics, languages, and contexts. Methods educated on particular teams could underperform with others—as an illustration, AI educated totally on Caucasian speech patterns would possibly battle with different demographics.

Maybe most basically, some researchers argue that AI can not actually mimic human emotion because of its lack of consciousness. Whereas AI can simulate feelings primarily based on patterns, it lacks the lived expertise and empathy that people deliver to emotional interactions.

Guess being human is tougher than it appears. Sorry, ChatGPT.

Typically Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.