In short

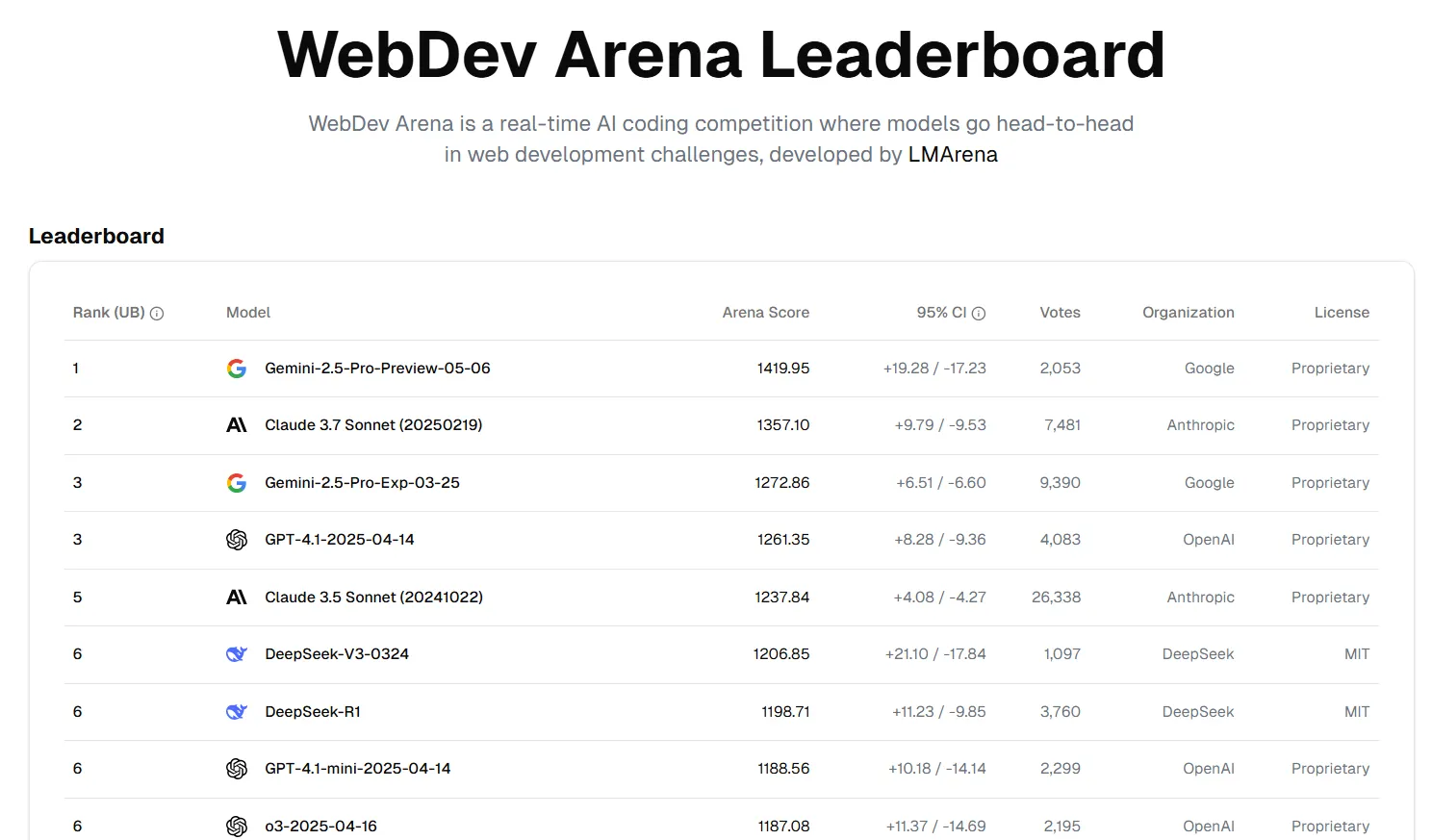

- Google’s new Gemini 2.5 Professional tops the WebDev Enviornment leaderboard, outperforming rivals like Claude in coding duties, making it a standout selection for builders in search of superior coding capabilities.

- The AI mannequin additionally contains a 1 million token context window (expandable to 2 million), enabling it to deal with massive codebases and sophisticated tasks far past the capability of fashions like ChatGPT and Claude 3.7 Sonnet.

- It additionally achieved the best scores on reasoning benchmarks, together with a MENSA IQ check and Humanity’s Final Examination, demonstrating superior problem-solving expertise important for stylish growth duties.

Google’s lately launched Gemini 2.5 Professional has risen to the highest spot on coding leaderboards, beating Claude within the well-known WebDev Enviornment—a non-denominational rating website akin to the LLM area, however centered particularly on measuring how good AI fashions are at coding. The achievement comes amid Google’s push to place its flagship AI mannequin as a frontrunner in each coding and reasoning duties.

Launched earlier this yr Gemini 2.5 Professional ranks first throughout a number of classes, together with coding, model management, and inventive writing. The mannequin’s huge context window—a million tokens increasing to 2 million quickly—permits it to deal with massive codebases and sophisticated tasks that will choke even the closest rivals. For context, highly effective fashions like ChatGPT and Claude 3.7 Sonnet can solely deal with as much as 128K tokens.

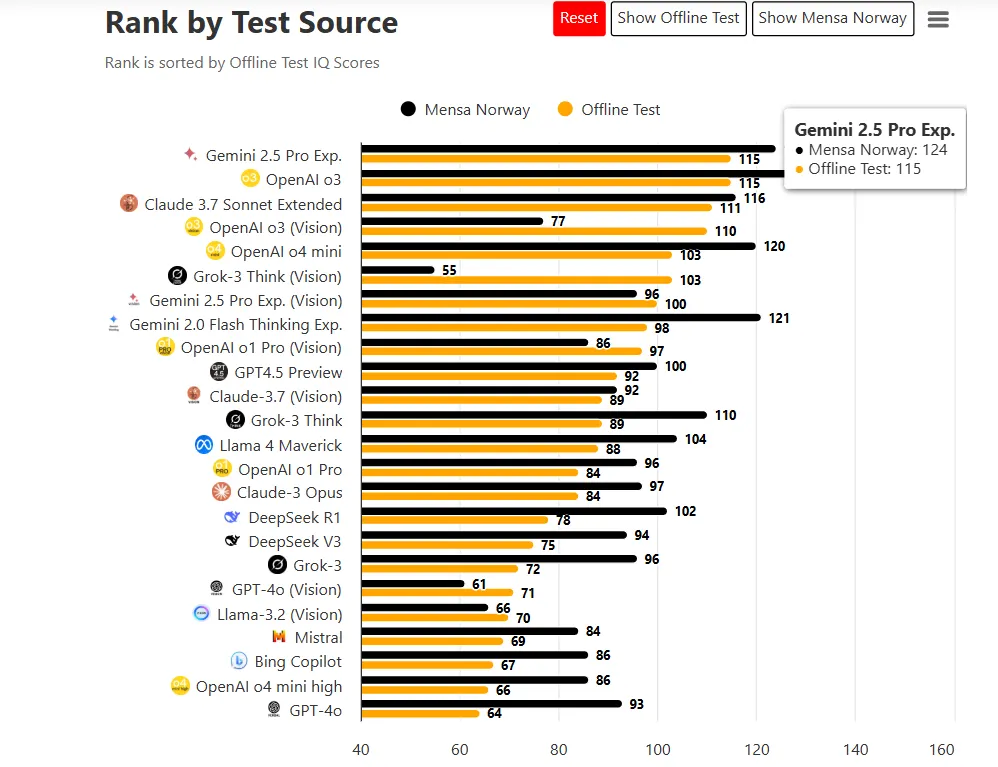

Gemini additionally has the best “IQ” of all AI fashions. TrackingAI put it by way of formalized MENSA checks, utilizing verbalized questions from Mensa Norway to create a standardized solution to examine AI fashions.

Gemini 2.5 Professional scored increased than rivals on these checks, even when utilizing bespoke questions not publicly obtainable in coaching information.

With an IQ rating of 115 in offline checks, the brand new Gemini ranks among the many “vivid minded,” with the typical human intelligence scoring round 85 to 114 factors. However the notion of an AI having IQ wants unpacking. AI programs haven’t got intelligence quotients like people do, so it’s higher to think about the benchmark as a metaphor for efficiency on reasoning benchmarks.

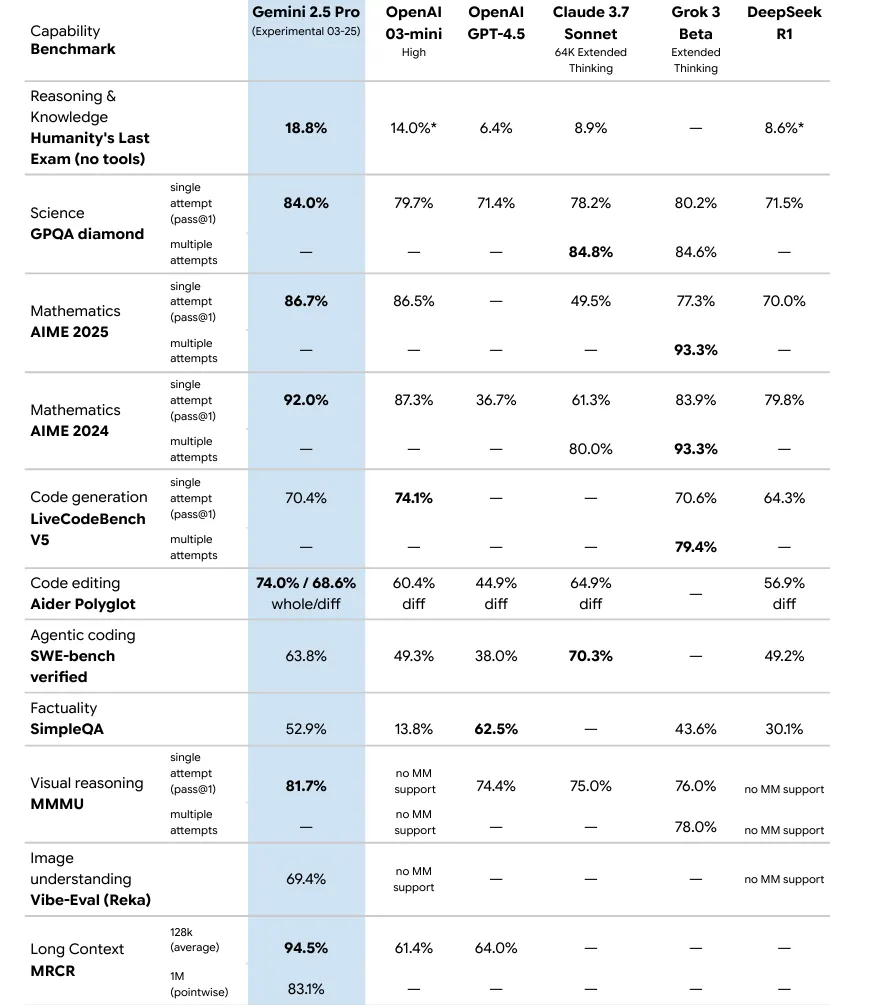

For benchmarks particularly designed for AI, Gemini 2.5 Professional scored 86.7% on the AIME 2025 math check and 84.0% on the GPQA science evaluation. On Humanity’s Final Examination (HLE), a more moderen and tougher benchmark created to keep away from check saturation issues, Gemini 2.5 scored 18.8%, beating OpenAI’s o3 mini (14%) and Claude 3.7 Sonnet (8.9%) which is exceptional when it comes to the efficiency enhance..

The brand new model of Gemini 2.5 Professional is now obtainable without cost (with price limits) to all Gemini customers. Google beforehand described this launch as an “experimental model of two.5 Professional,” a part of its household of “considering fashions” designed to motive by way of responses relatively than merely generate textual content.

Regardless of not profitable each benchmark, Gemini has caught builders’ consideration with its versatility. The mannequin can create advanced functions from single prompts, constructing interactive internet apps, countless runner video games, and visible simulations with out requiring detailed directions.

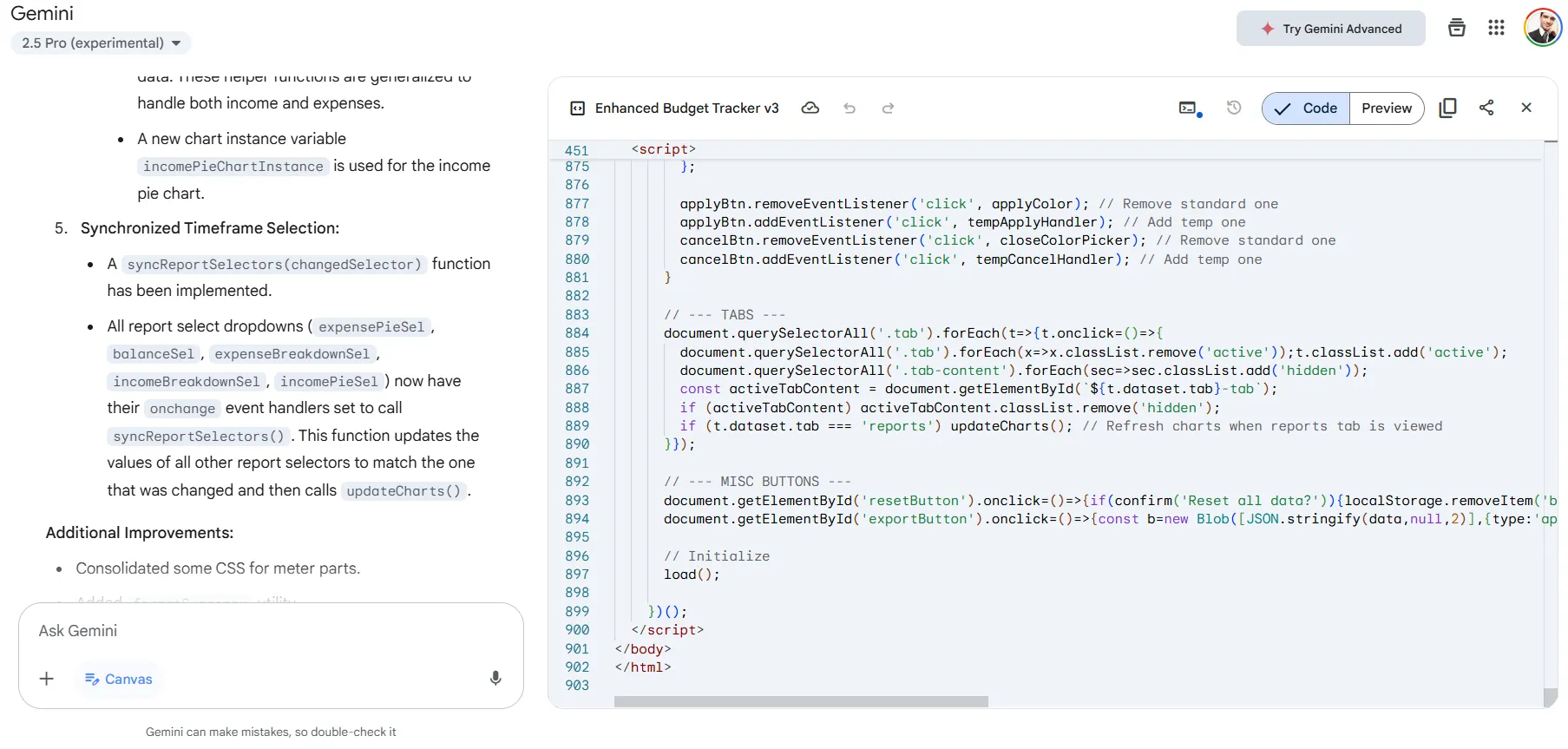

We examined the mannequin asking it to repair a damaged HTML5 code. It generated virtually 1000 strains of code, offering outcomes that beat Claude 3.7 Sonnet—the earlier chief—when it comes to high quality and understanding of the total set of directions.

For working builders, Gemini 2.5 Professional’s enter prices $2.50 per million tokens and output prices $15.00 per million tokens, positioning it as a less expensive various to some rivals whereas nonetheless providing spectacular capabilities.

The AI mannequin handles as much as 30,000 strains of code in its Superior plan, making it appropriate for enterprise-level tasks. Its multimodal talents—working with textual content, code, audio, pictures, and video—add flexibility that different coding-focused fashions cannot match.

Typically Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.