In short

- DeepMind’s Gemini Robotics fashions gave machines the power to plan, motive, and even lookup recycling guidelines on-line earlier than performing.

- As an alternative of following scripts, Google’s new AI lets robots adapt, problem-solve, and cross expertise between one another.

- From packing suitcases to sorting trash, robots powered by Gemini-ER 1.5 confirmed early steps towards general-purpose intelligence.

Google DeepMind rolled out two AI fashions this week that goal to make robots smarter than ever. As an alternative of specializing in following feedback, the up to date Gemini Robotics 1.5 and its companion Gemini Robotics-ER 1.5 make the robots suppose via issues, search the web for data, and cross expertise between totally different robotic brokers.

Based on Google, these fashions mark a “foundational step that may navigate the complexities of the bodily world with intelligence and dexterity”

“Gemini Robotics 1.5 marks an essential milestone towards fixing AGI within the bodily world,” Google stated within the announcement. “By introducing agentic capabilities, we’re shifting past fashions that react to instructions and creating techniques that may really motive, plan, actively use instruments, and generalize.”

And this time period “generalization” is essential as a result of fashions battle with it.

The robots powered by these fashions can now deal with duties like sorting laundry by coloration, packing a suitcase primarily based on climate forecasts they discover on-line, or checking native recycling guidelines to throw away trash accurately. Now, as a human, it’s possible you’ll say, “Duh, so what?” However to do that, machines require a talent known as generalization—the power to use information to new conditions.

Robots—and algorithms basically—often battle with this. For instance, in the event you train a mannequin to fold a pair of pants, it will be unable to fold a t-shirt except engineers programmed each step upfront.

The brand new fashions change that. They’ll choose up on cues, learn the surroundings, make cheap assumptions, and perform multi-step duties that was out of attain—or at the very least extraordinarily arduous—for machines.

However higher doesn’t imply good. For instance, in one of many experiments, the crew confirmed the robots a set of objects and requested them to ship them into the right trash. The robots used their digicam to visually determine every merchandise, pull up San Francisco’s newest recycling tips on-line, after which place them the place they need to ideally go, all by itself, simply as an area human would.

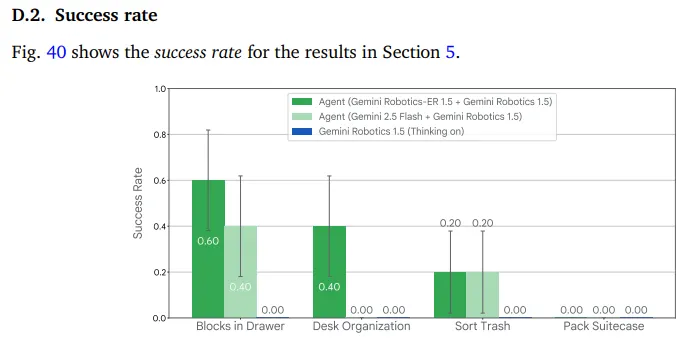

This course of combines on-line search, visible notion, and step-by-step planning—making context-aware selections that transcend what older robots may obtain. The registered success price was between 20% to 40% of the time; not splendid, however shocking for a mannequin that was not capable of perceive these nuances ever earlier than.

How Google flip robots into super-robots

The 2 fashions break up the work. Gemini Robotics-ER 1.5 acts just like the mind, determining what must occur and making a step-by-step plan. It will probably name up Google Search when it wants data. As soon as it has a plan, it passes pure language directions to Gemini Robotics 1.5, which handles the precise bodily actions.

Extra technically talking, the brand new Gemini Robotics 1.5 is a vision-language-action (VLA) mannequin that turns visible data and directions into motor instructions, whereas the brand new Gemini Robotics-ER 1.5 is a vision-language mannequin (VLM) that creates multistep plans to finish a mission.

When a robotic types laundry, for example, it internally causes via the duty utilizing a sequence of thought: understanding that “type by coloration” means whites go in a single bin and colours in one other, then breaking down the particular motions wanted to select up each bit of clothes. The robotic can clarify its reasoning in plain English, making its selections much less of a black field.

Google CEO Sundar Pichai chimed in on X, noting that the brand new fashions will allow robots to higher motive, plan forward, use digital instruments like search, and switch studying from one type of robotic to a different. He known as it Google’s “subsequent huge step in the direction of general-purpose robots which are really useful.”

New Gemini Robotics 1.5 fashions will allow robots to higher motive, plan forward, use digital instruments like Search, and switch studying from one type of robotic to a different. Our subsequent huge step in the direction of general-purpose robots which are really useful — you’ll be able to see how the robotic causes as… pic.twitter.com/kw3HtbF6Dd

— Sundar Pichai (@sundarpichai) September 25, 2025

The discharge places Google in a highlight shared with builders like Tesla, Determine AI and Boston Dynamics, although every firm is taking totally different approaches. Tesla focuses on mass manufacturing for its factories, with Elon Musk promising 1000’s of models by 2026. Boston Dynamics continues pushing the boundaries of robotic athleticism with its backflipping Atlas. Google, in the meantime, bets on AI that makes robots adaptable to any state of affairs with out particular programming.

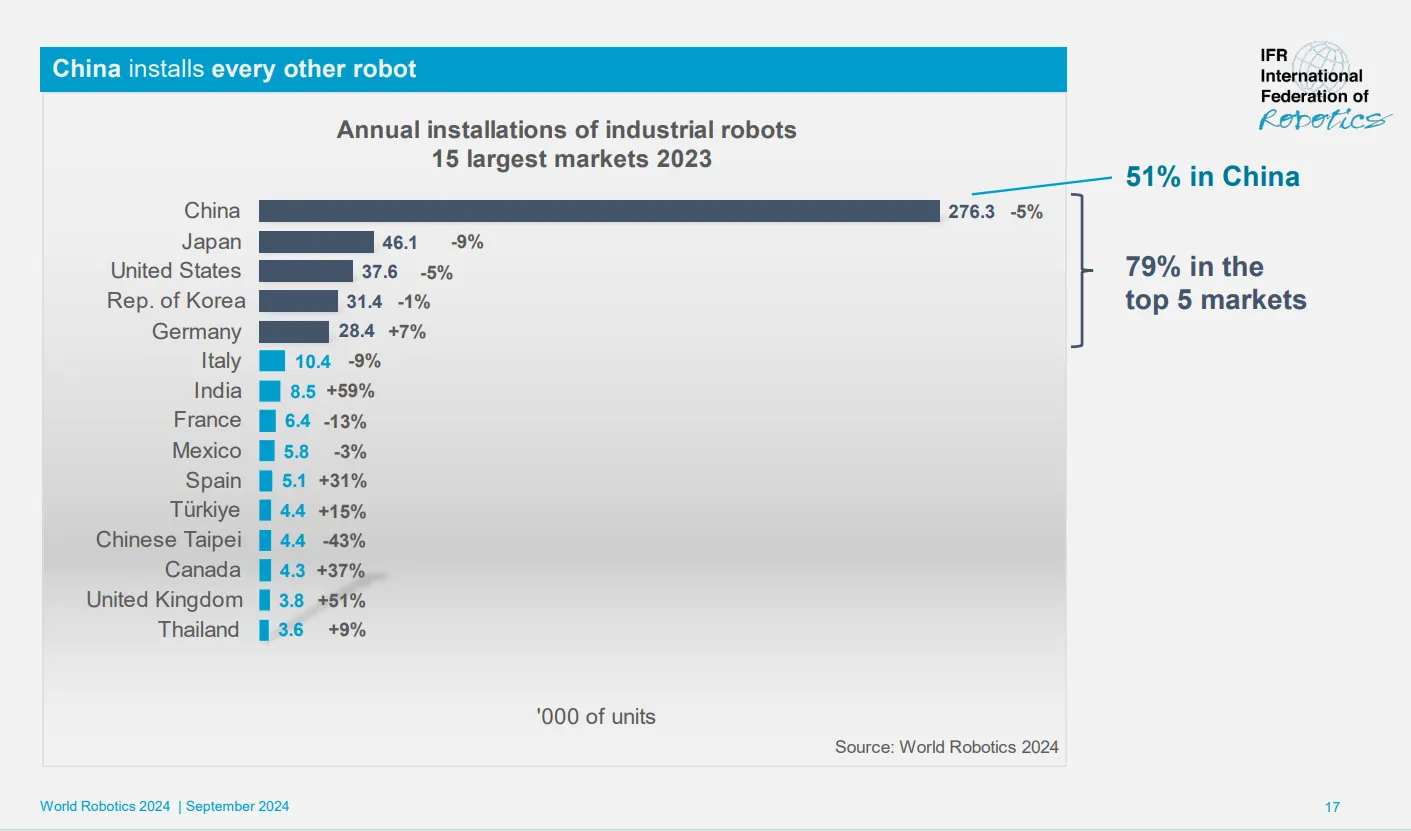

The timing issues. American robotics firms are pushing for a nationwide robotics technique, together with establishing a federal workplace centered on selling the business at a time when China is making AI and clever robots a nationwide precedence. China is the world’s largest marketplace for robots that work in factories and different industrial environments, with about 1.8 million robots working in 2023, based on the Germany-based Worldwide Federation of Robotics.

DeepMind’s strategy differs from conventional robotics programming, the place engineers meticulously code each motion. As an alternative, these fashions be taught from demonstration and might adapt on the fly. If an object slips from a robotic’s grasp or somebody strikes one thing mid-task, the robotic adjusts with out lacking a beat.

The fashions construct on DeepMind’s earlier work from March, when robots may solely deal with single duties like unzipping a bag or folding paper. Now they’re tackling sequences that may problem many people—like packing appropriately for a visit after checking the climate forecast.

For builders desirous to experiment, there is a break up strategy to availability. Gemini Robotics-ER 1.5 launched Thursday via the Gemini API in Google AI Studio, that means any developer can begin constructing with the reasoning mannequin. The motion mannequin, Gemini Robotics 1.5, stays unique to “choose” (that means “wealthy,” in all probability) companions.

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.