Briefly

- Veo 3.1 introduces full-scene audio, dialogue, and ambient sound technology.

- The launch follows Sora 2’s fast rise to 1 million downloads inside 5 days.

- Google positions Veo as a professional-grade various within the crowded AI video market.

Google launched Veo 3.1 at the moment, an up to date model of its AI video generator that provides audio throughout all options and introduces new enhancing capabilities designed to offer creators extra management over their clips.

The announcement comes as OpenAI’s competing Sora 2 app climbs app retailer charts and sparks debates about AI-generated content material flooding social media.

The timing suggests Google needs to place Veo 3.1 because the skilled various to Sora 2’s viral social feed method. OpenAI launched Sora 2 on September 30 with a TikTok-style interface that prioritizes sharing and remixing.

The app hit 1 million downloads inside 5 days and reached the highest spot in Apple’s App Retailer. Meta took the same method, with its personal kind of digital social media powered by AI movies.

Customers can now create movies with synchronized ambient noise, dialogue, and Foley results utilizing “Elements to Video,” a instrument that mixes a number of reference photos right into a single scene.

The “Frames to Video” function generates transitions between a beginning and ending picture, whereas “Lengthen” creates clips lasting as much as a minute by persevering with the movement from the ultimate second of an current video.

New enhancing instruments let customers add or take away parts from generated scenes with computerized shadow and lighting changes. The mannequin generates movies in 1080p decision at horizontal or vertical facet ratios.

The mannequin is on the market by Move for client use, the Gemini API for builders, and Vertex AI for enterprise clients. Movies lasting as much as a minute may be created utilizing the “Lengthen” function, which continues movement from the ultimate second of an current clip.

The AI video technology market has develop into crowded in 2025, with Runway’s Gen-4 mannequin focusing on filmmakers, Luma Labs providing quick technology for social media, Adobe integrating Firefly Video into Inventive Cloud, and updates from xAI, Kling, Meta, and Google focusing on realism, sound technology, and immediate adherence.

However how good is it? We examined the mannequin, and these are our impressions.

Testing the mannequin

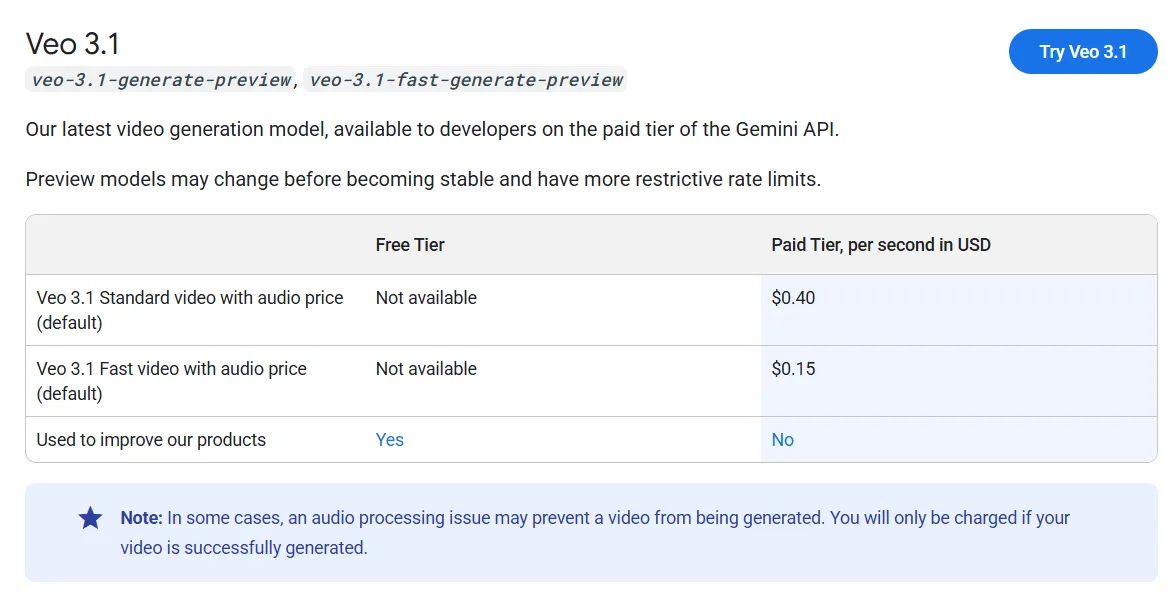

If you wish to strive it, you’d higher have some deep pockets. Veo 3.1 is at the moment the most costly video technology mannequin, on par with Sora 2 and solely behind Sora 2 Professional, which prices greater than twice as a lot per technology.

Free customers obtain 100 month-to-month credit to check the system, which is sufficient to generate round 5 movies monthly. Via the Gemini API, Veo 3.1 prices roughly $0.40 per second of generated video with audio, whereas a quicker variant referred to as Veo 3.1 Quick prices $0.15 per second.

For these prepared to make use of it at that worth, listed here are its strengths and weaknesses.

Textual content to Video

Veo 3.1 is a particular enchancment over its predecessor. The mannequin handles coherence effectively and demonstrates a greater understanding of contextual environments.

It really works throughout completely different types, from photorealism to stylized content material.

We requested the mannequin to mix a scene that began as a drawing and transitioned into live-action footage. It dealt with the duty higher than every other mannequin we examined.

With none reference body, Veo 3.1 produced higher leads to text-to-video mode than it did utilizing the identical immediate with an preliminary picture, which was shocking.

The tradeoff is motion pace. Veo 3.1 prioritizes coherence over fluidity, making it difficult to generate fast-paced motion.

Parts transfer extra slowly however keep consistency all through the clip. Kling nonetheless leads in fast motion, though it requires extra makes an attempt to realize usable outcomes.

Picture to Video

Veo constructed its status on image-to-video technology, and the outcomes nonetheless ship—with caveats. This seems to be a weaker space within the replace. When utilizing completely different facet ratios as beginning frames, the mannequin struggled to keep up the coherence ranges it as soon as had.

If the immediate strays too removed from what would logically observe the enter picture, Veo 3.1 finds a strategy to cheat. It generates incoherent scenes or clips that leap between areas, setups, or fully completely different parts.

This wastes time and credit, since these clips cannot be edited into longer sequences as a result of they do not match the format.

When it really works, the outcomes look unbelievable. Getting there’s half ability, half luck—largely luck.

Parts to Video

This function works like inpainting for video, letting customers insert or delete parts from a scene. Do not count on it to keep up good coherence or use your precise reference photos, although.

For instance, the video beneath was generated utilizing these three references and the immediate: a person and a girl come upon one another whereas working in a futuristic metropolis, the place a Bitcoin signal hologram is rotating. The person tells the lady, “QUICK, BITCOIN CRASHED! WE MUST BUY MORE!!

As you possibly can see, neither the town nor the characters are literally there. Nonetheless, characters are carrying the garments of reference, the town resembles the one within the within the picture, and issues painting the concept of the weather, not the weather themselves.

Veo 3.1 treats uploaded parts as inspiration somewhat than strict templates. It generates scenes that observe the immediate and embrace objects that resemble what you supplied, however do not waste time attempting to insert your self right into a film—it will not work.

A workaround: use Nanobanana or Seedream to add parts and generate a coherent beginning body first. Then feed that picture to Veo 3.1, which is able to produce a video the place characters and objects present minimal deformation all through the scene.

Textual content to Video with Dialogue

That is Google’s promoting level. Veo 3.1 handles lip sync higher than every other mannequin at the moment accessible. In text-to-video mode, it generates coherent ambient sound that matches scene parts.

The dialogue, intonation, voices, and feelings are correct and beat competing fashions.

Different mills can produce ambient noise, however solely Sora, Veo, and Grok can generate precise phrases.

Of these three, Veo 3.1 requires the fewest makes an attempt to get good leads to text-to-video mode.

Picture to Video with Dialogue

That is the place issues disintegrate. Picture-to-video with dialogue suffers from the identical points as customary image-to-video technology. Veo 3.1 prioritizes coherence so closely that it ignores immediate adherence and reference photos.

For instance, this scene was generated utilizing the reference proven within the parts to video part.

As you possibly can see, our take a look at generated a totally completely different topic than the reference picture. The video high quality was glorious—intonation and gestures have been spot-on—but it surely wasn’t the particular person we uploaded, making the outcome ineffective.

Sora’s remix function is your best option for this use case. The mannequin could also be censored, however its image-to-video capabilities, real looking lip sync, and concentrate on tone, accent, emotion, and realism make it the clear winner.

Grok’s video generator is available in second. It revered the reference picture higher than Veo 3.1 and produced superior outcomes. Right here is one technology utilizing the identical reference picture and immediate.

If you happen to do not wish to take care of Sora’s social app or lack entry to it, Grok is perhaps your best choice. It is also uncensored however moderated, so when you want that specific method, Musk has you coated.

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.