Briefly

- You can provide native AI fashions net entry utilizing free Mannequin Context Protocol (MCP) servers—no company APIs, no information leaks, no charges.

- Setup is easy: Set up LM Studio, add Courageous, Tavily, or DuckDuckGo MCP configs, and your offline mannequin turns into a non-public SearchGPT.

- The outcome: real-time shopping, article evaluation, and information fetching—with out ever touching OpenAI, Anthropic, or Google’s clouds.

So that you need an AI that may browse the online and suppose the one choices are from OpenAI, Anthropic, or Google?

Suppose once more.

Your information does not have to journey via company servers each time your AI assistant wants well timed data. With Mannequin Context Protocol (MCP) servers, you can provide even light-weight shopper fashions the flexibility to look the online, analyze articles, and entry real-time information—all whereas sustaining full privateness and spending zero {dollars}.

The catch? There is not one. These instruments supply beneficiant free tiers: Courageous Search supplies 2,000 queries month-to-month, Tavily gives 1,000 credit, and sure choices require no API key in any respect. For many customers, that is sufficient runway to by no means hit a restrict… you don’t make 1,000 searches in at some point.

Earlier than going technical, two ideas want rationalization. “Mannequin Context Protocol” is an open commonplace launched by Anthropic in November 2024 that permits AI fashions to attach with exterior instruments and information sources. Consider it as a sort of common adapter that connects Tinkeryoy-like modules that add utility and performance to your AI mannequin.

As an alternative of telling your AI precisely what to do (which is what an API name does), you inform the mannequin what you want and it simply kind of figures out by itself what to do to realize that purpose. MCPs aren’t as correct as conventional API calls, and also you’ll seemingly spend extra tokens to make them work, however they’re much more versatile.

“Software calling”—typically known as operate calling—is the mechanism that makes this work. It is the flexibility of an AI mannequin to acknowledge when it wants exterior data and invoke the suitable operate to get it. While you ask, “What is the climate in Rio de Janeiro?”, a mannequin with instrument calling can establish that it must name a climate API or MCP server, format the request correctly, and combine the outcomes into its response. With out tool-calling help, your mannequin can solely work with what it discovered throughout coaching.

Right here’s the best way to give superpowers to your native mannequin.

Technical necessities and setup

The necessities are minimal: You want Node.js put in in your laptop, in addition to a neighborhood AI utility that helps MCP (equivalent to LM Studio model 0.3.17 or greater, Claude Desktop, or Cursor IDE), and a mannequin with instrument calling capabilities.

You must also have Python put in.

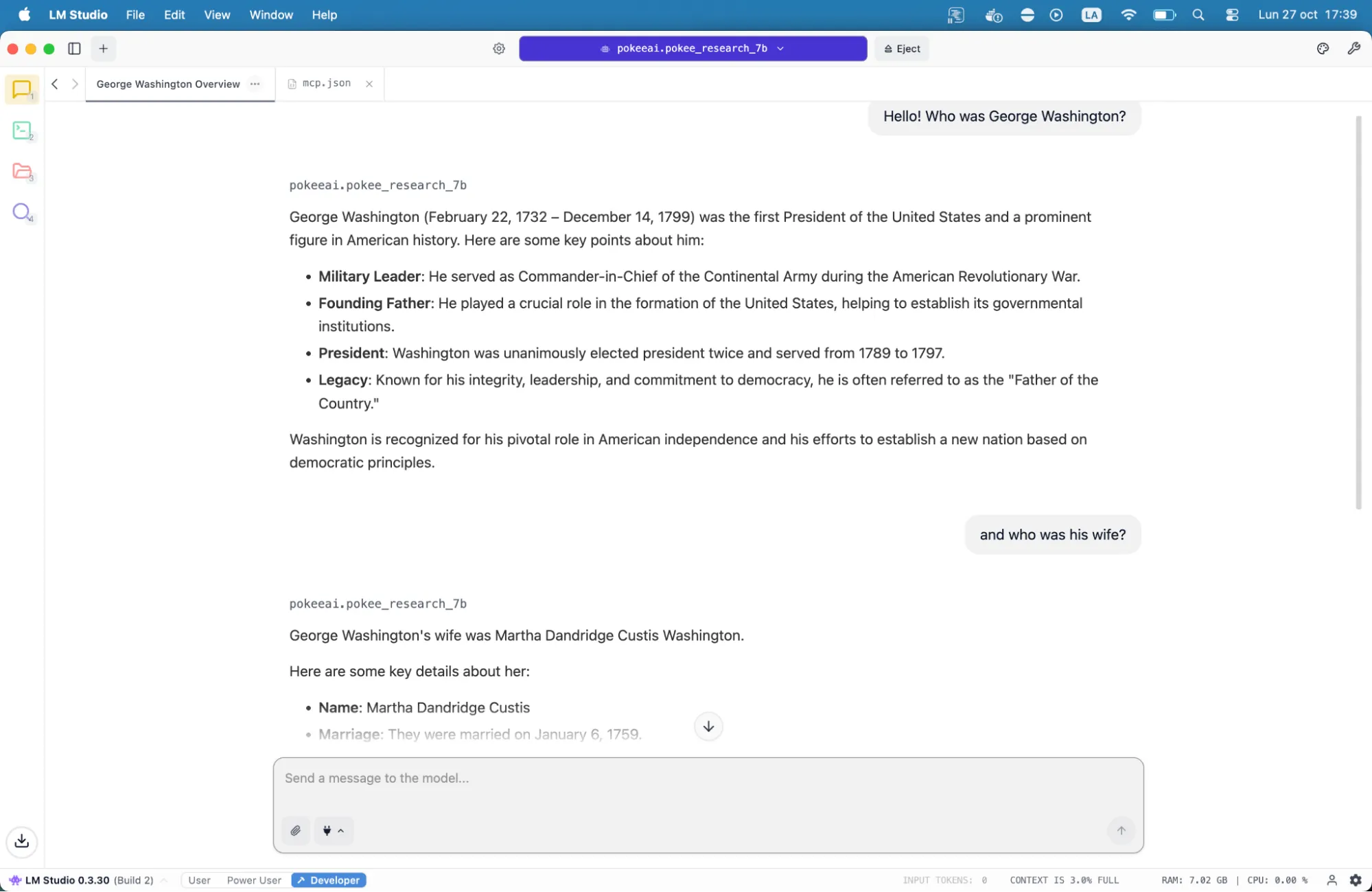

Some good fashions with tool-calling that run on consumer-grade machines are GPT-oss, DeepSeek R1 0528, Jan-v1-4b, Llama-3.2 3b Instruct, and Pokee Analysis 7B.

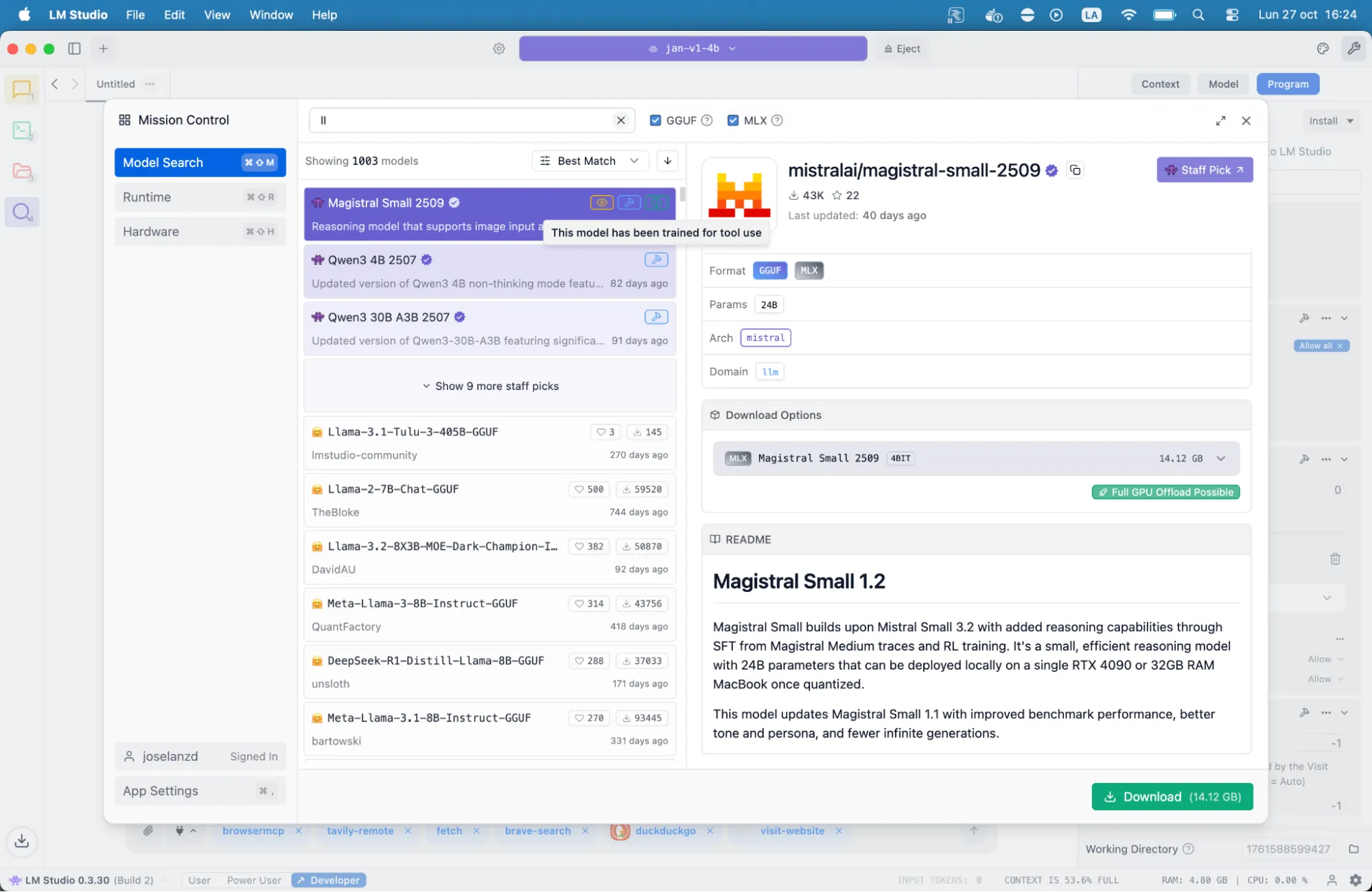

To put in a mannequin, go to the magnifying glass icon on the left sidebar in LM Studio and seek for one. The fashions that help instruments will present a hammer icon close to their identify. These are those that you simply’ll want.

Most trendy fashions above 7 billion parameters help instrument calling—Qwen3, DeepSeek R1, Mistral, and comparable architectures all work effectively. The smaller the mannequin, the extra you may have to immediate it explicitly to make use of search instruments, however even 4-billion parameter fashions can handle primary net entry.

After you have downloaded the mannequin, you want to “load” it so LM Studio is aware of it should use it. You don’t need your erotic roleplay mannequin do the analysis on your thesis.

Organising engines like google

Configuration occurs via a single mcp.json file. The placement will depend on your utility: LM Studio makes use of its settings interface to edit this file, Claude Desktop seems to be in particular person directories, and different functions have their very own conventions. Every MCP server entry requires simply three components: a singular identify, the command to run it, and any required setting variables like API keys.

However you don’t actually need to know that: Simply copy and paste the configuration that builders present, and it’ll work. In case you don’t wish to mess with handbook edits, then on the finish of this information you may discover one configuration, prepared to repeat and paste, so you possibly can have among the most essential MCP servers able to work.

The three greatest search instruments out there via MCP carry completely different strengths. Courageous focuses on privateness, Tavily is extra versatile, and DuckDuckGo is the simplest to implement.

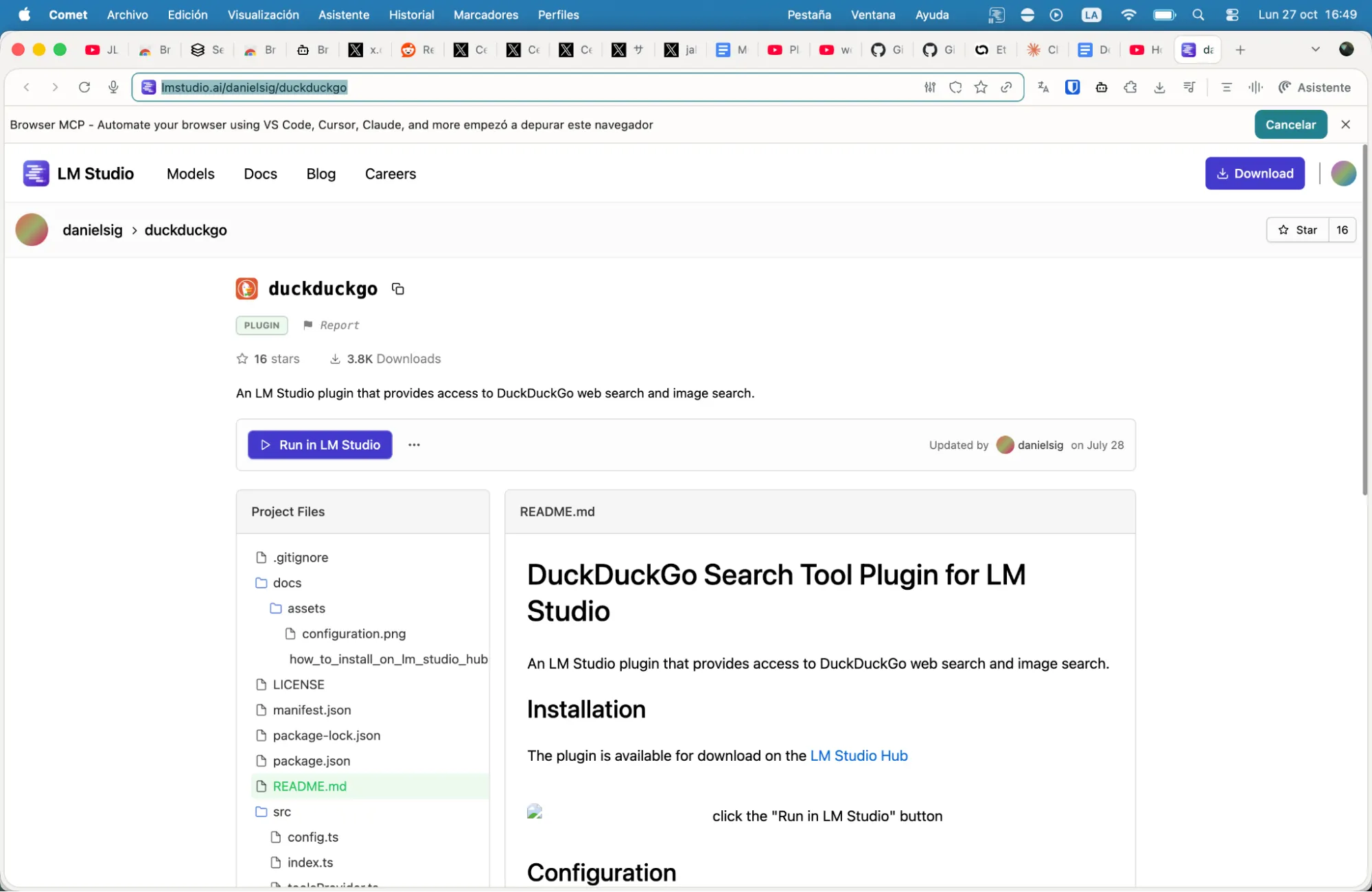

So as to add DuckDuckGo, merely go to lmstudio.ai/danielsig/duckduckgo and click on on the button that claims “Run in LM Studio.”

Then go to lmstudio.ai/danielsig/visit-website and do the identical, click on on “Run in LM Studio.”

And that’s it. You could have simply given your mannequin its first superpower. Now you have got your personal SearchGPT without cost—native, personal, and powered by Duckduckgo.

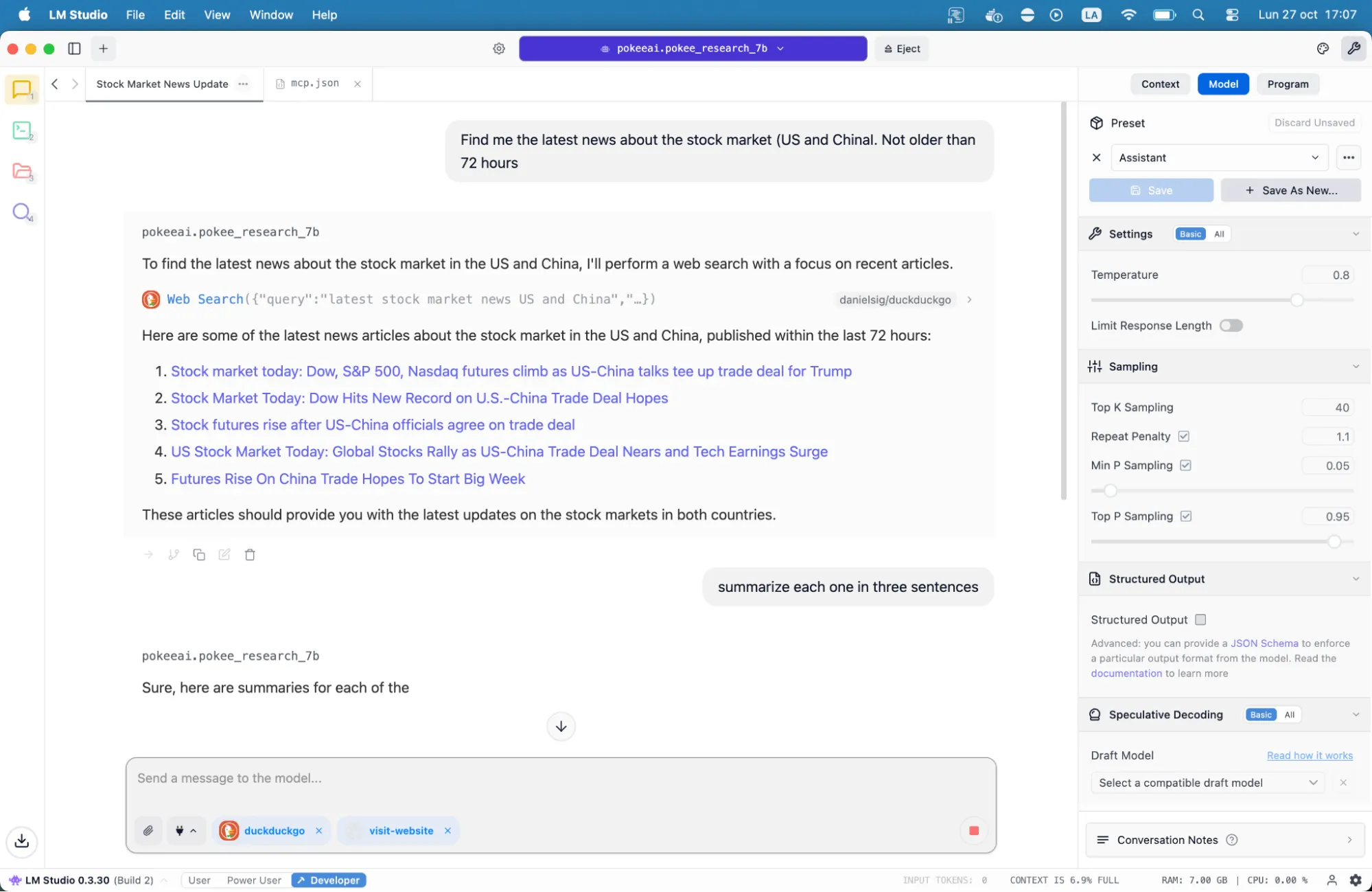

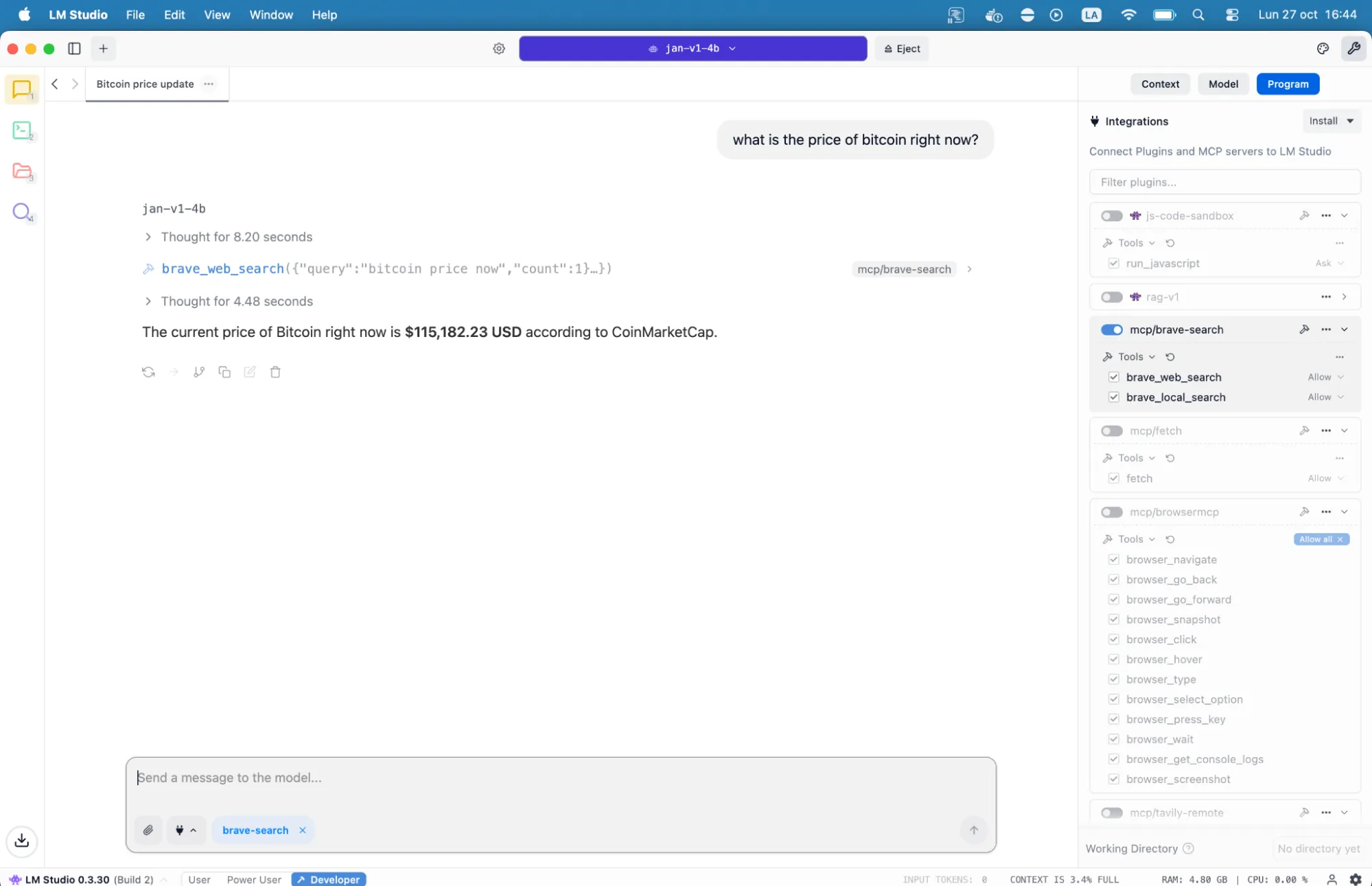

Ask it to search out you the newest information, the worth of Bitcoin, the climate, and many others, and it offers you up to date and related data.

Courageous Search is a bit tougher to arrange than DuckDuckGo, however gives a extra strong service, working on an impartial index of over 30 billion pages and offering 2,000 free queries month-to-month. Its privacy-first strategy means no person profiling or monitoring, making it very best for delicate analysis or private queries.

To configure Courageous, join at courageous.com/search/api to get your API key. It requires a cost verification, however it has a free plan, so don’t fear.

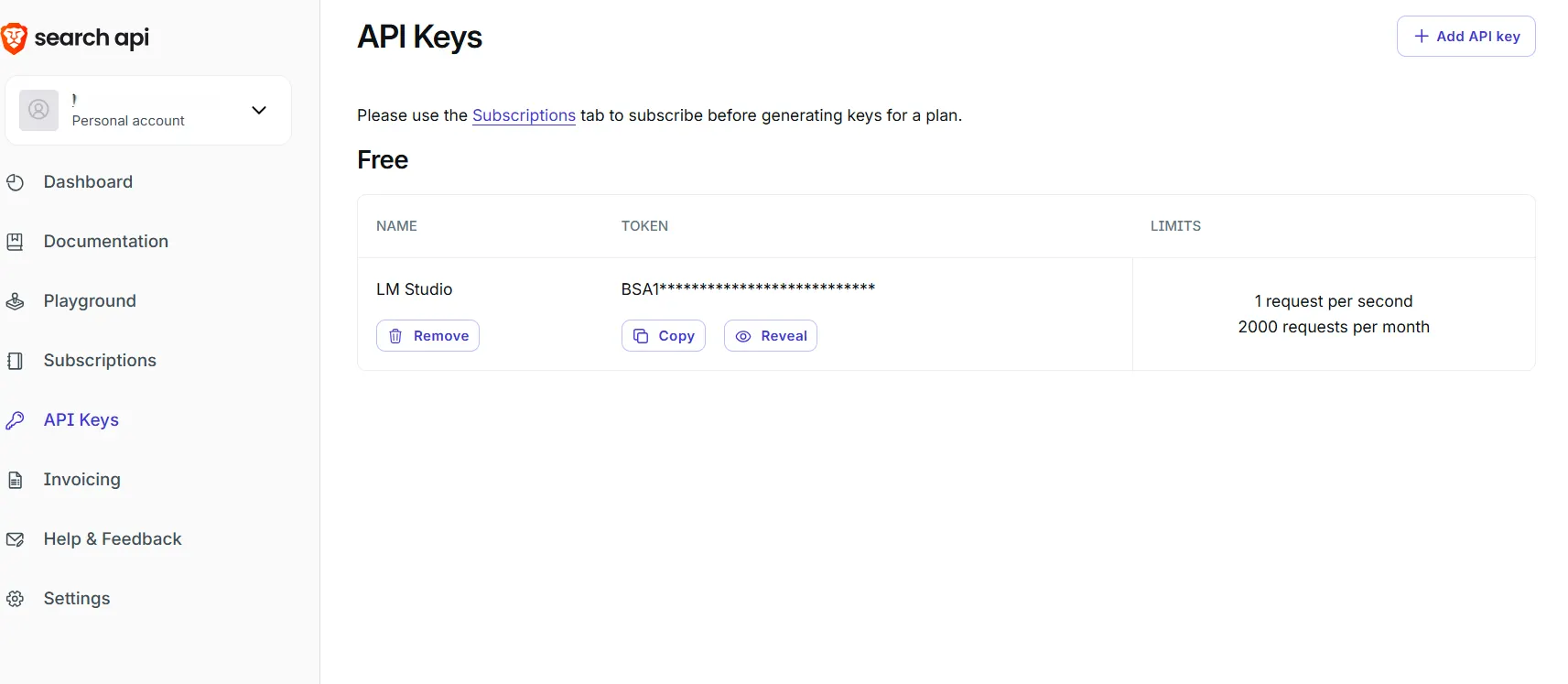

As soon as there, go to the “API Keys” part, click on on “Add API Key” and duplicate the brand new code. Don’t share that code with anybody.

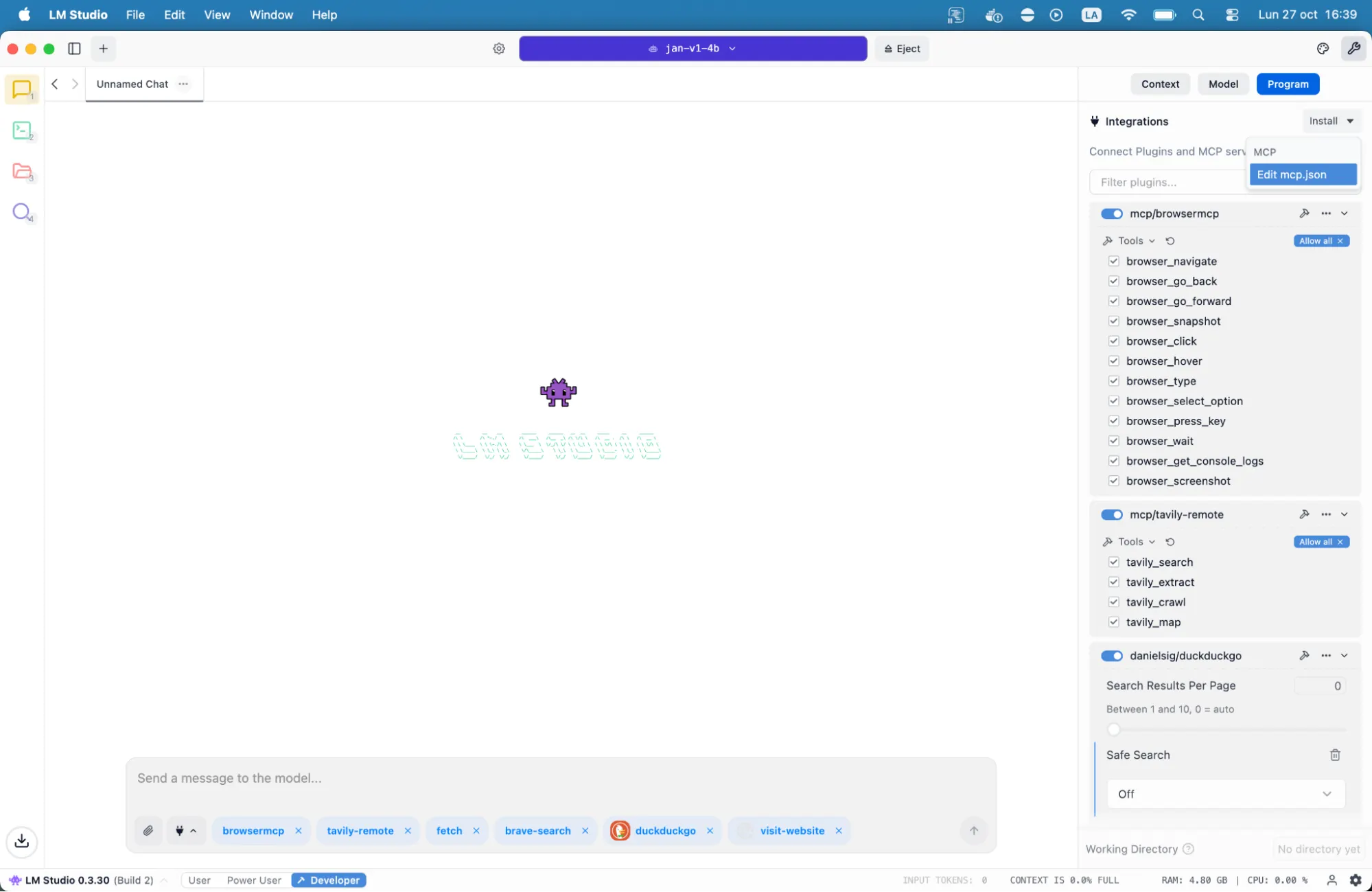

Then go to LM Studio, click on on the little wrench icon on the highest proper nook, then click on on the “Program” tab, then on the button to Set up and integration, click on on “Edit mcp.json.”

As soon as there, paste the next textual content into the sphere that seems. Keep in mind to place the key API key you simply created contained in the citation marks the place it says “your courageous api key right here”:

{

"mcpServers": {

"brave-search": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-brave-search"],

"env": {

"BRAVE_API_KEY": "your_brave_api_key_here"

}

}

}

}

That’s all. Now your native AI can browse the online utilizing Courageous. Ask it something and it offers you essentially the most up-to-date data it may well discover.

A journalist researching breaking information wants present data from a number of sources. Courageous’s impartial index means outcomes aren’t filtered via different engines like google, offering a distinct perspective on controversial subjects.

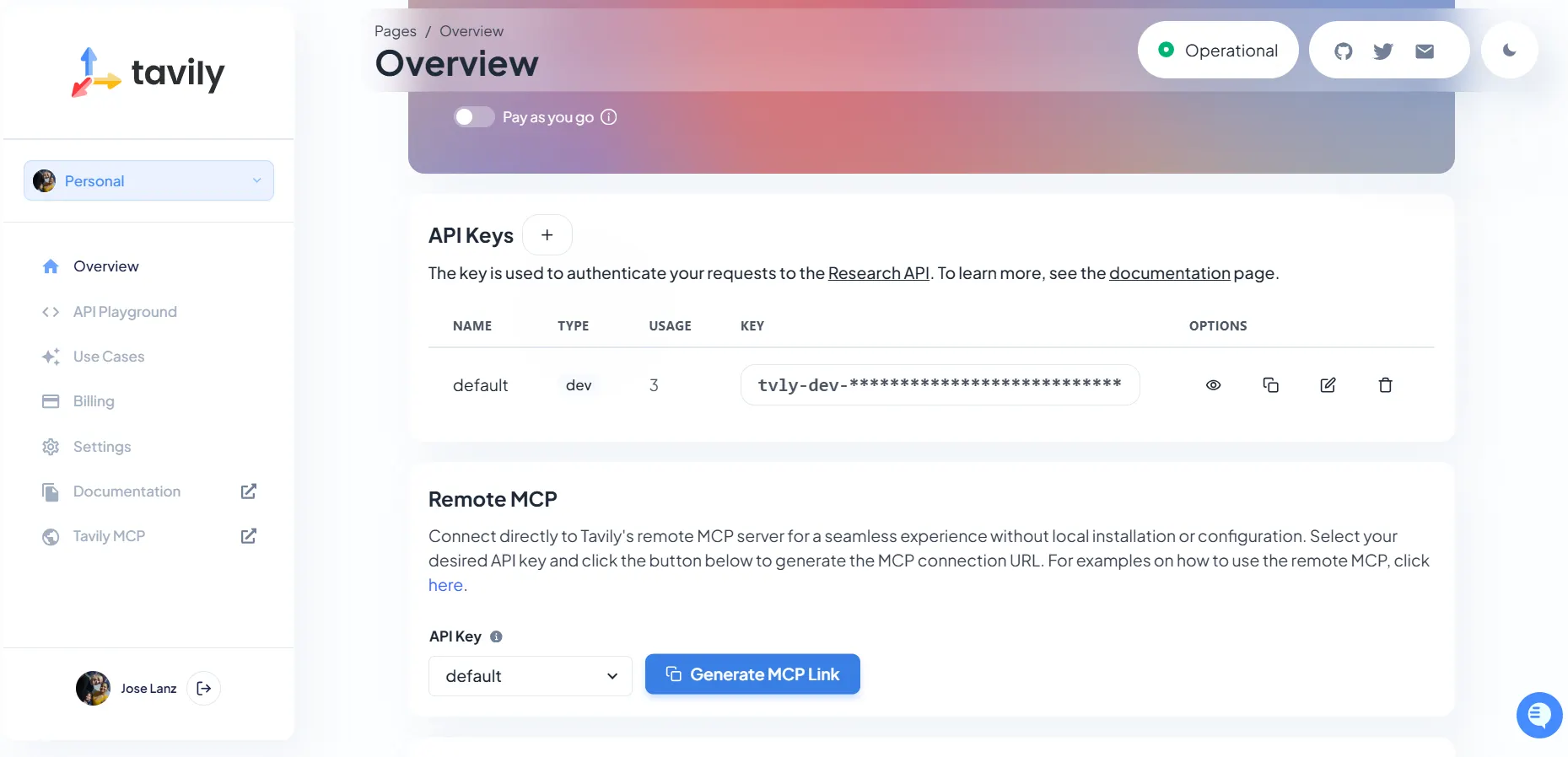

Tavily is one other useful gizmo for net shopping. It provides you 1,000 credit per 30 days and specialised search capabilities for information, code, and pictures. It is also very straightforward to arrange: create an account at app.tavily.com, generate your MCP hyperlink from the dashboard, and also you’re prepared.

Then, copy and paste the next configuration into LM Studio, identical to you probably did with Courageous. The configuration seems to be like this:

{

"mcpServers": {

"tavily-remote": {

"command": "npx",

"args": ["-y", "mcp-remote", "https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR_API_KEY_HERE"]

}

}

}

Use case: A developer debugging an error message can ask their AI assistant to seek for options, with Tavily’s code-focused search returning Stack Overflow discussions and GitHub points mechanically formatted for straightforward evaluation.

Studying and interacting with web sites

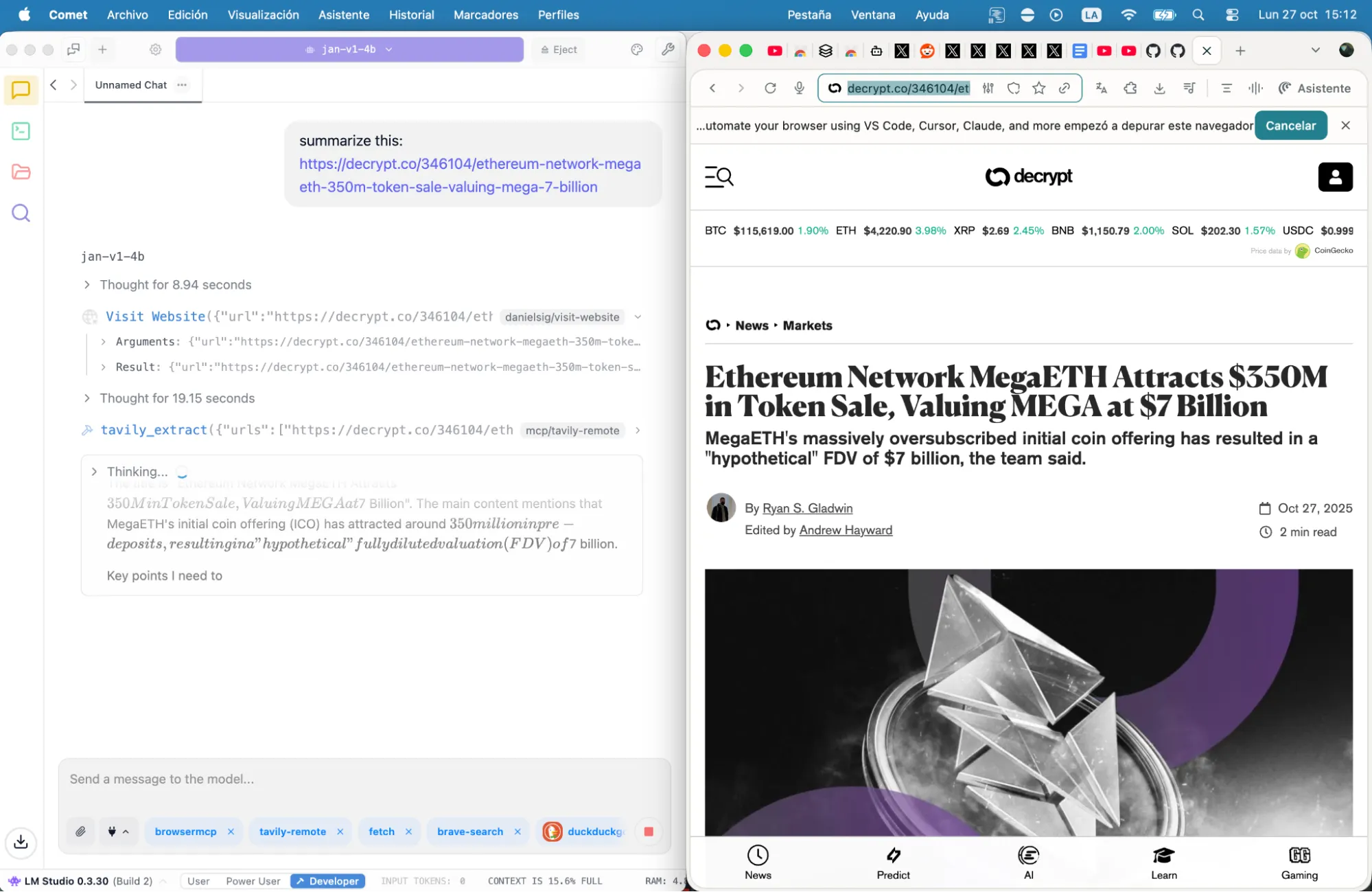

Past search, MCP Fetch handles a distinct drawback: studying full articles. Search engines like google and yahoo return snippets, however MCP Fetch retrieves full webpage content material and converts it to markdown format optimized for AI processing. This implies your mannequin can analyze whole articles, extract key factors, or reply detailed questions on particular pages.

Merely copy and paste this configuration. No have to create API keys or something like that:

{

"mcpServers": {

"fetch": {

"command": "uvx",

"args": [

"mcp-server-fetch"

]

}

You have to set up a bundle installer known as uvx to run this one. Simply comply with this information and you will be executed in a minute or two.

That is nice for summarization, evaluation, iteration, and even mentorship. A researcher may feed it a technical paper URL and ask, “Summarize the methodology part and establish potential weaknesses of their strategy.” The mannequin fetches the complete textual content, processes it, and supplies detailed evaluation that is unattainable with search snippets alone.

Need one thing easier? This command is now completely comprehensible even by your dumbest native AI.

“Summarize this in three paragraphs and let me know why it’s so essential: https://decrypt.co/346104/ethereum-network-megaeth-350m-token-sale-valuing-mega-7-billion”

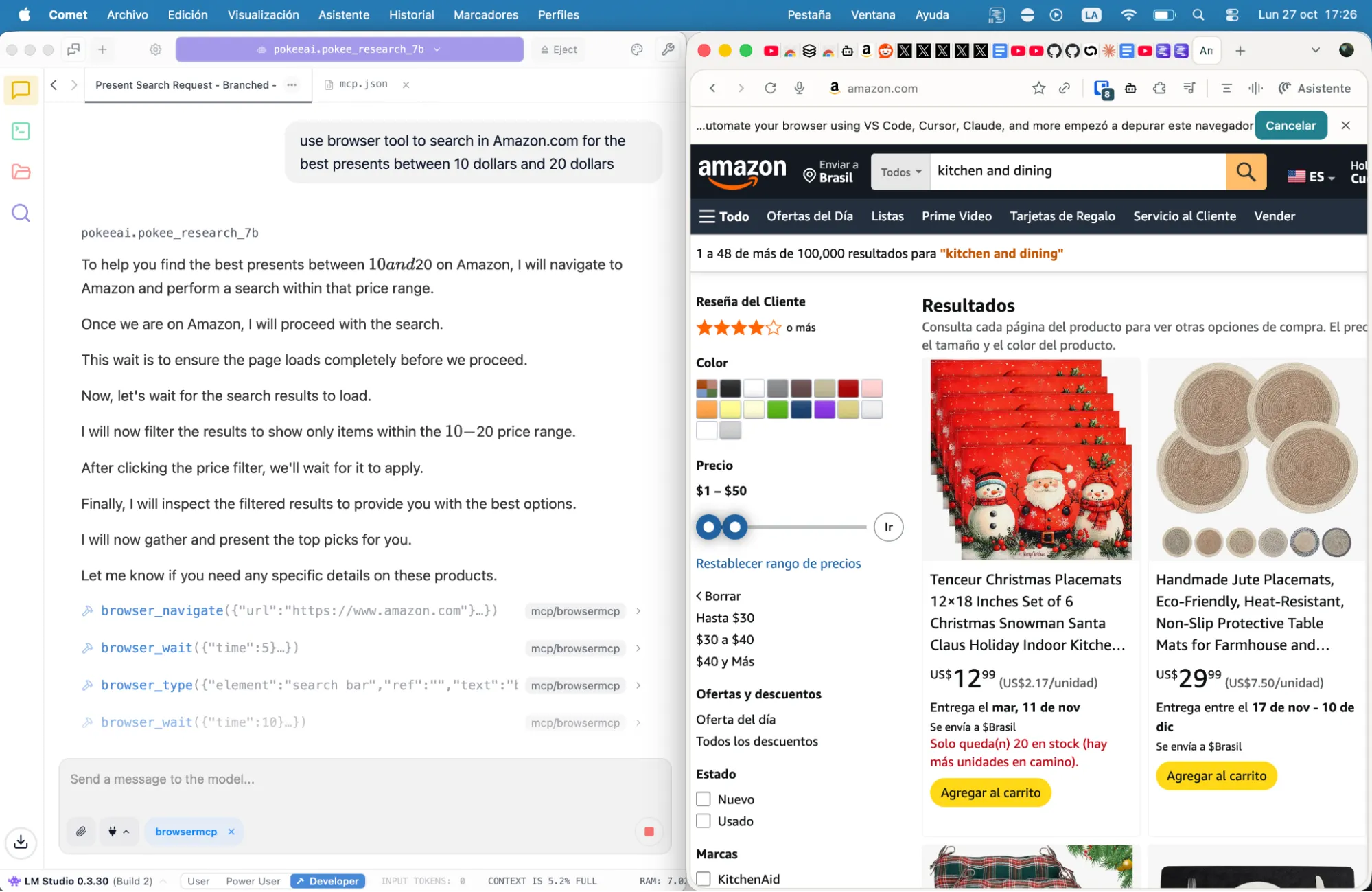

There are lots of different MCP instruments to discover, giving your fashions completely different capabilities. For instance, MCP Browser or Playwright allow interplay with any web site—kind filling, navigation, even JavaScript-heavy functions that static scrapers cannot deal with. There are additionally servers for web optimization audits, serving to you be taught issues with Anki playing cards, and enhancing your coding capabilities.

The whole configuration

In case you don’t wish to manually configure your LM Studio MCP.json, then right here’s a whole file integrating all these providers.

Copy it, add your API keys the place indicated, drop it in your configuration listing, and restart your AI utility. Simply keep in mind to put in the correct dependencies:

{

"mcpServers": {

"fetch": {

"command": "uvx",

"args": [

"mcp-server-fetch"

]

},

"brave-search": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-brave-search"

],

"env": {

"BRAVE_API_KEY": "YOUR API KEY HERE"

}

},

"browsermcp": {

"command": "npx",

"args": [

"@browsermcp/mcp@latest"

]

},

"tavily-remote": {

"command": "npx",

"args": [

"-y",

"mcp-remote",

"https://mcp.tavily.com/mcp/?tavilyApiKey=YOUR API KEY HERE"

]

}

}

}

This configuration offers you entry to Fetch, Courageous, Tavily and MCP Browser, no coding required, no advanced setup procedures, no subscription charges, and no information for giant firms—simply working net entry on your native fashions.

You’re welcome.

Typically Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.