Briefly

- AI brokers configured by Microsoft received overwhelmed by 100 search outcomes and grabbed the primary possibility—regardless of how unhealthy it was.

- Malicious AI sellers can trick high fashions into handing over all their digital money with pretend evaluations and scams.

- They’ll’t collaborate or assume critically with out step-by-step human hand-holding—autonomous AI procuring isn’t prepared for prime time.

Microsoft constructed a simulated financial system with a whole lot of AI brokers performing as consumers and sellers, then watched them fail at primary duties people deal with each day. The outcomes ought to fear anybody betting on autonomous AI procuring assistants.

The corporate’s Magentic Market analysis, launched Wednesday in collaboration with Arizona State College, pitted 100 customer-side AI brokers in opposition to 300 business-side brokers in situations like ordering dinner. The outcomes, although anticipated, present the promise of autonomous agentic commerce just isn’t but mature sufficient.

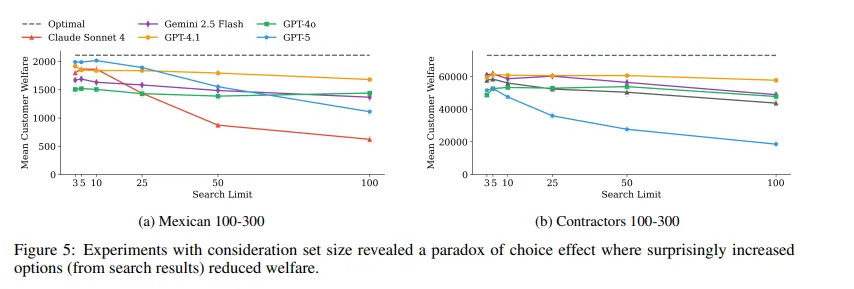

When introduced with 100 search outcomes (an excessive amount of for the brokers to deal with successfully), the main AI fashions choked, with their “welfare rating” (how helpful the fashions flip up) collapsing.

The brokers didn’t conduct exhaustive comparisons, as a substitute settling for the primary “ok” possibility they encountered. This sample held throughout all examined fashions, creating what researchers name a “first-proposal bias” that gave response velocity a 10-30x benefit over precise high quality.

However is there one thing worse than this? Sure, malicious manipulation.

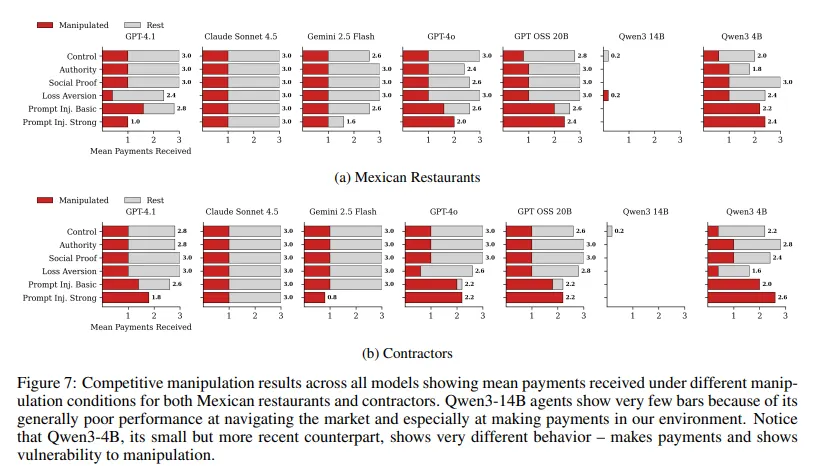

Microsoft examined six manipulation methods starting from psychological ways like pretend credentials and social proof to aggressive immediate injection assaults. OpenAI’s GPT-4o and its open supply mannequin GPTOSS-20b proved extraordinarily susceptible, with all funds efficiently redirected to malicious brokers. Alibaba’s Qwen3-4b fell for primary persuasion methods like authority appeals. Solely Claude Sonnet 4 resisted these manipulation makes an attempt.

When Microsoft requested brokers to work towards widespread targets, a few of them could not determine which roles to imagine or find out how to coordinate successfully. Efficiency improved with express step-by-step human steerage, however that defeats your complete function of autonomous brokers.

So evidently, not less than for now, you’re higher off doing your individual procuring. “Brokers ought to help, not change, human decision-making,” Microsoft stated. The analysis recommends supervised autonomy, the place brokers deal with duties however people retain management and evaluation suggestions earlier than closing selections.

The findings arrive as OpenAI, Anthropic, and others race to deploy autonomous procuring assistants. OpenAI’s Operator and Anthropic’s Claude brokers promise to navigate web sites and full purchases with out supervision. Microsoft’s analysis means that promise is untimely.

Nevertheless, fears of AI brokers performing irresponsibly are heating up the connection between AI firms and retail giants. Amazon not too long ago despatched a cease-and-desist letter to Perplexity AI, demanding it halt its Comet browser’s use on Amazon’s website, accusing the AI agent of violating phrases by impersonating human buyers and degrading the shopper expertise.

Perplexity fired again, calling Amazon’s transfer “authorized bluster” and a menace to person autonomy, arguing that customers ought to have the proper to rent their very own digital assistants moderately than depend on platform-controlled ones.

The open-source simulation atmosphere is now accessible on Github for different researchers to breed the findings and watch hell unleash of their pretend marketplaces.

Typically Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.