Briefly

- DeepSeek V4 might drop inside weeks, focusing on elite-level coding efficiency.

- Insiders declare it might beat Claude and ChatGPT on long-context code duties.

- Builders are already hyped forward of a possible disruption.

DeepSeek is reportedly planning to drop its V4 mannequin round mid-February, and if inner checks are any indication, Silicon Valley’s AI giants ought to be nervous.

The Hangzhou-based AI startup could possibly be focusing on a launch round February 17—Lunar New Yr, naturally—with a mannequin particularly engineered for coding duties, in accordance with The Data. Folks with direct data of the challenge declare V4 outperforms each Anthropic’s Claude and OpenAI’s GPT sequence in inner benchmarks, significantly when dealing with extraordinarily lengthy code prompts.

After all, no benchmark or details about the mannequin has been publicly shared, so it’s unimaginable to immediately confirm such claims. DeepSeek hasn’t confirmed the rumors both.

Nonetheless, the developer group is not ready for official phrase. Reddit’s r/DeepSeek and r/LocalLLaMA are already heating up, customers are stockpiling API credit, and fans on X have been fast to share their predictions that V4 might cement DeepSeek’s place because the scrappy underdog that refuses to play by Silicon Valley’s billion-dollar guidelines.

Anthropic blocked Claude subs in third-party apps like OpenCode, and reportedly lower off xAI and OpenAI entry.

Claude and Claude Code are nice, however not 10x higher but. This may solely push different labs to maneuver sooner on their coding fashions/brokers.

DeepSeek V4 is rumored to drop…

— Yuchen Jin (@Yuchenj_UW) January 9, 2026

This would not be DeepSeek’s first disruption. When the corporate launched its R1 reasoning mannequin in January 2025, it triggered a $1 trillion sell-off in world markets.

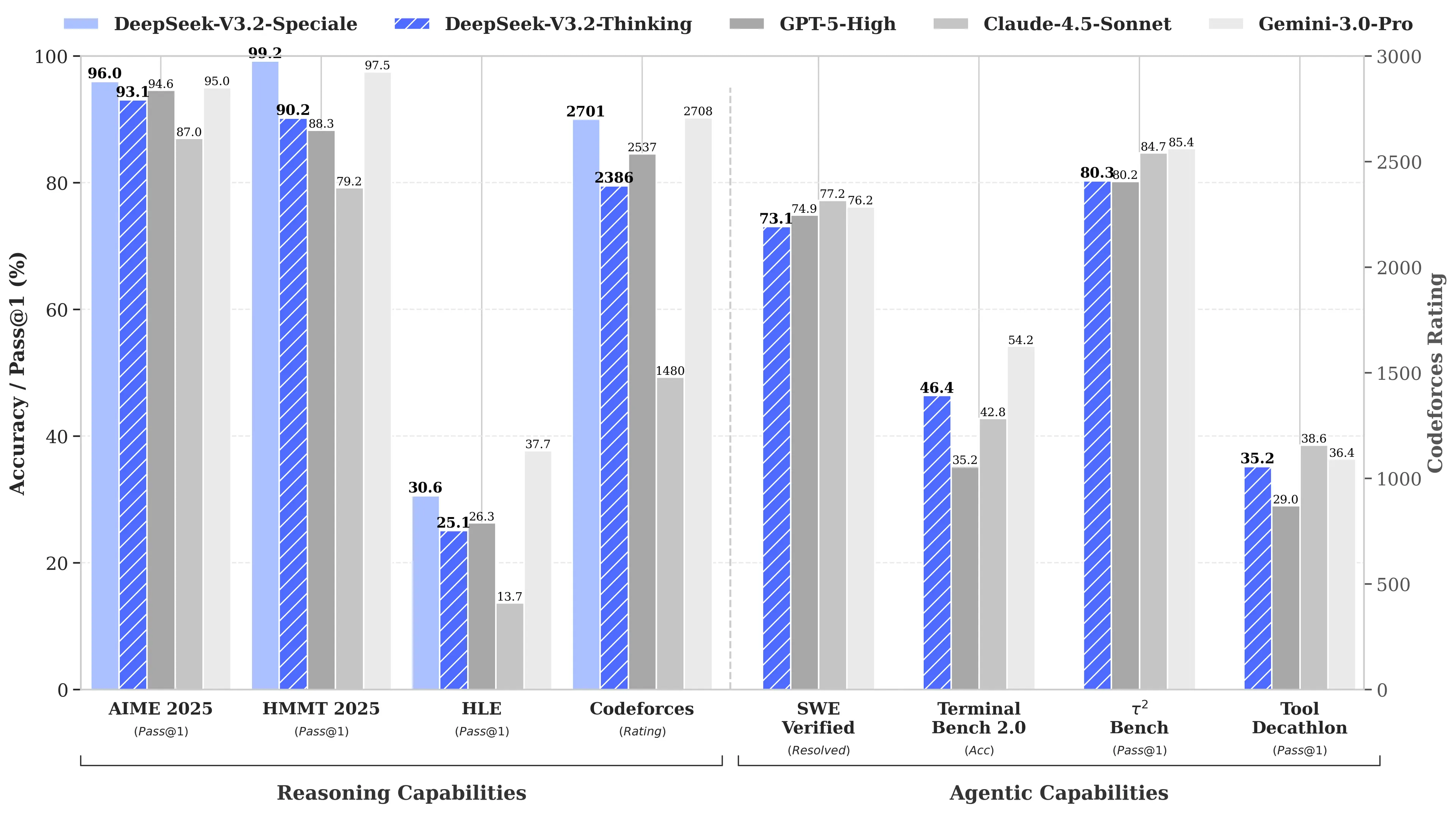

The explanation? DeepSeek’s R1 matched OpenAI’s o1 mannequin on math and reasoning benchmarks regardless of reportedly costing simply $6 million to develop—roughly 68 instances cheaper than what opponents had been spending. Its V3 mannequin later hit 90.2% on the MATH-500 benchmark, blowing previous Claude’s 78.3% and the latest replace “V3.2 Speciale” improved its efficiency much more.

V4’s coding focus could be a strategic pivot. Whereas R1 emphasised pure reasoning—logic, math, formal proofs—V4 is a hybrid mannequin (reasoning and non-reasoning duties) that targets the enterprise developer market the place high-accuracy code technology interprets on to income.

To assert dominance, V4 would want to beat Claude Opus 4.5, which at the moment holds the SWE-bench Verified report at 80.9%. But when DeepSeek’s previous launches are any information, then this might not be unimaginable to attain even with all of the constraints a Chinese language AI lab would face.

The not-so-secret sauce

Assuming the rumors are true, how can this small lab obtain such a feat?

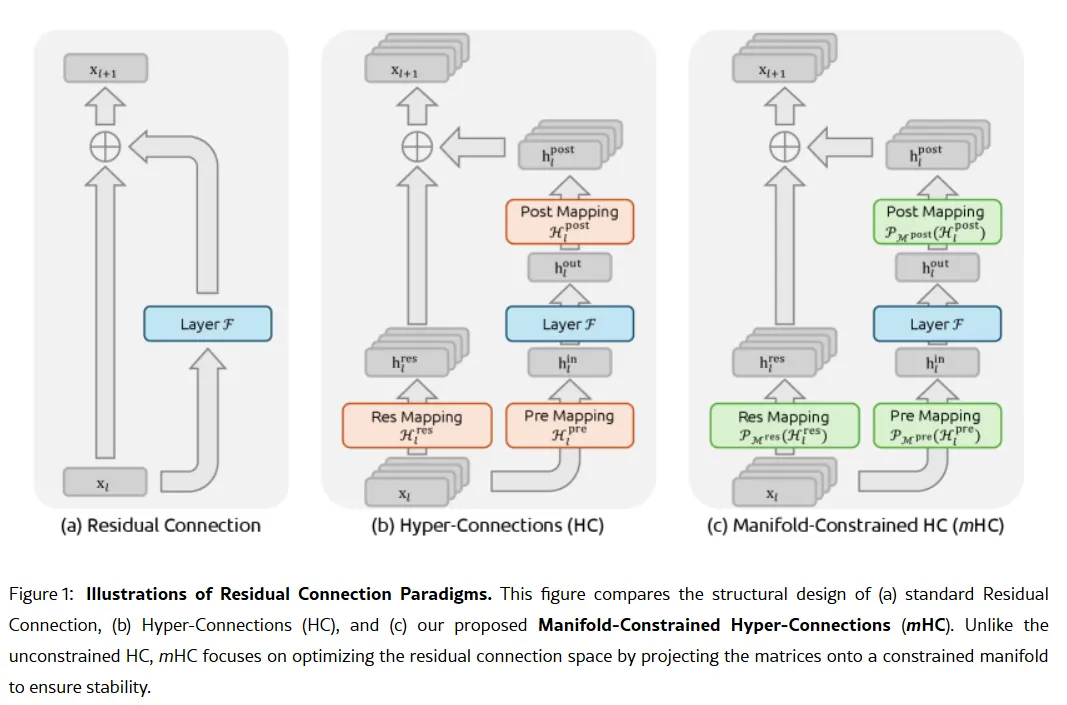

The corporate’s secret weapon could possibly be contained in its January 1 analysis paper: Manifold-Constrained Hyper-Connections, or mHC. Co-authored by founder Liang Wenfeng, the brand new coaching technique addresses a elementary downside in scaling giant language fashions—methods to develop a mannequin’s capability with out it turning into unstable or exploding throughout coaching.

Conventional AI architectures pressure all data by means of a single slim pathway. mHC widens that pathway into a number of streams that may trade data with out inflicting coaching collapse.

Wei Solar, principal analyst for AI at Counterpoint Analysis, referred to as mHC a “placing breakthrough” in feedback to Enterprise Insider. The method, she mentioned, reveals DeepSeek can “bypass compute bottlenecks and unlock leaps in intelligence,” even with restricted entry to superior chips as a consequence of U.S. export restrictions.

Lian Jye Su, chief analyst at Omdia, famous that DeepSeek’s willingness to publish its strategies indicators a “newfound confidence within the Chinese language AI business.” The corporate’s open-source method has made it a darling amongst builders who see it as embodying what OpenAI was, earlier than it pivoted to closed fashions and billion-dollar fundraising rounds.

Not everyone seems to be satisfied. Some builders on Reddit complain that DeepSeek’s reasoning fashions waste compute on easy duties, whereas critics argue the corporate’s benchmarks do not mirror real-world messiness. One Medium publish titled “DeepSeek Sucks—And I am Accomplished Pretending It Would not” went viral in April 2025, accusing the fashions of manufacturing “boilerplate nonsense with bugs” and “hallucinated libraries.”

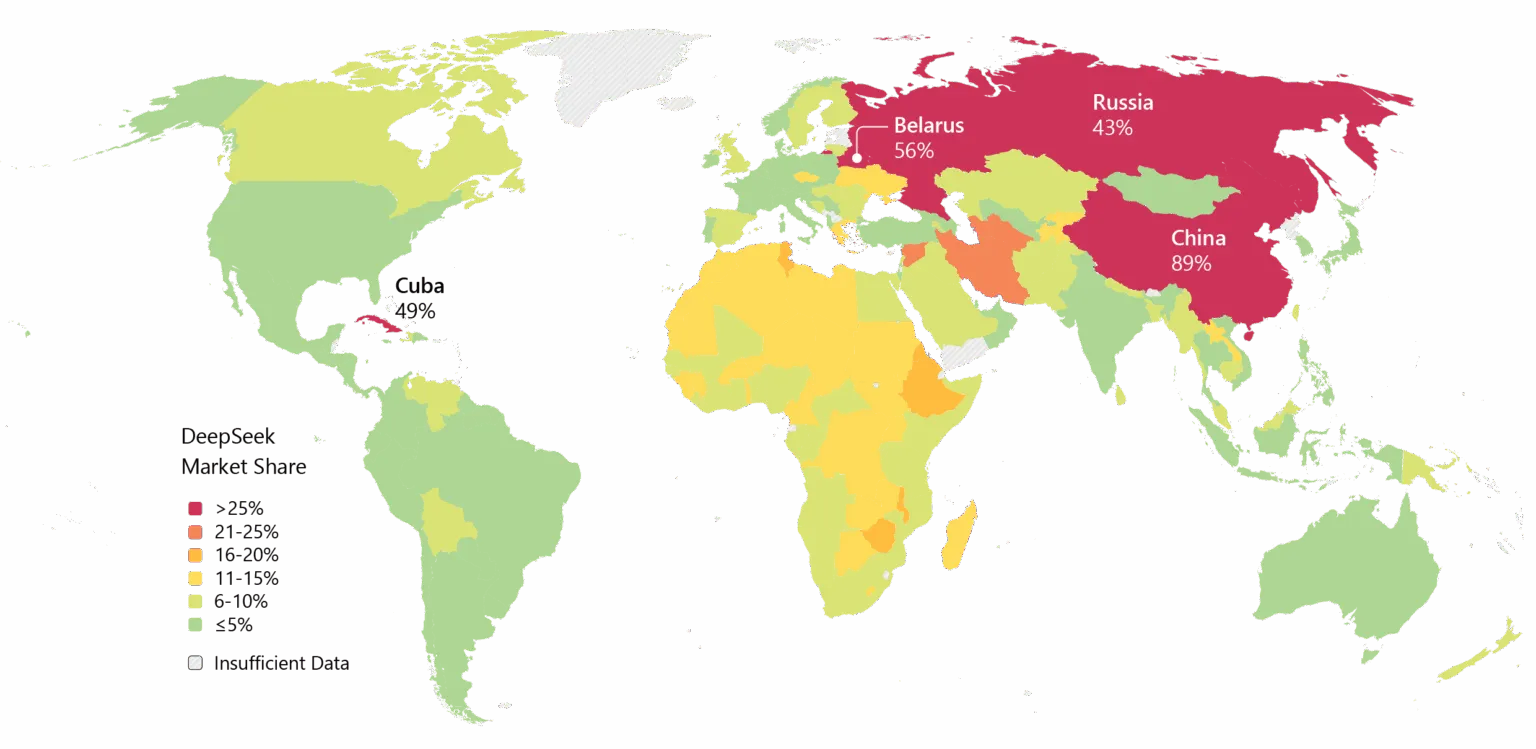

DeepSeek additionally carries baggage. Privateness considerations have plagued the corporate, with some governments banning DeepSeek’s native app. The corporate’s ties to China and questions on censorship in its fashions add geopolitical friction to technical debates.

Nonetheless, the momentum is simple. Deepseek has been broadly adopted in Asia, and if V4 delivers on its coding guarantees, then enterprise adoption within the West might observe.

There’s additionally the timing. In accordance with Reuters, DeepSeek had initially deliberate to launch its R2 mannequin in Could 2025, however prolonged the runway after founder Liang turned dissatisfied with its efficiency. Now, with V4 reportedly focusing on February and R2 probably following in August, the corporate is transferring at a tempo that implies urgency—or confidence. Possibly each.

Usually Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.