In short

- Multiverse’s CompactifAI tech reportedly slashed parameter rely by 70% and mannequin reminiscence by 93%, whereas preserving 97–98% accuracy.

- The corporate simply closed a $215M Collection B spherical backed by Bullhound Capital, HP Tech Ventures, and Toshiba.

- The strategy makes use of tensor networks from quantum physics to compress fashions and “heals” them with quick retraining, claiming 50% quicker efficiency at inference.

A Spanish AI startup has simply satisfied traders at hand over $215 million primarily based on a daring declare: they’ll shrink massive language fashions by 95% with out compromising their efficiency.

Multiverse Computing’s innovation hinges on its CompactifAI know-how, a compression technique that borrows mathematical ideas from quantum physics to shrink AI fashions right down to smartphone dimension.

The San Sebastian firm says that their compressed Llama-2 7B mannequin runs 25% quicker at inference whereas utilizing 70% fewer parameters, with accuracy dropping simply 2-3%.

If validated at scale, this might handle AI’s elephant-sized downside: fashions so large they require specialised knowledge facilities simply to function.

“For the primary time in historical past, we’re in a position to profile the interior workings of a neural community to remove billions of spurious correlations to really optimize all types of AI fashions,” Román Orús, Multiverse’s chief scientific officer, stated in a weblog put up on Thursday.

Bullhound Capital led the $215 million Collection B spherical with backing from HP Tech Ventures and Toshiba.

The Physics Behind the Compression

Making use of quantum-inspired ideas to sort out one in every of AI’s most urgent points sounds inconceivable—but when the analysis holds up, it’s actual.

Not like conventional compression that merely cuts neurons or reduces numerical precision, CompactifAI makes use of tensor networks—mathematical constructions that physicists developed to trace particle interactions with out drowning in knowledge.

The method works like an origami for AI fashions: weight matrices get folded into smaller, interconnected constructions known as Matrix Product Operators.

As an alternative of storing each connection between neurons, the system preserves solely significant correlations whereas discarding redundant patterns, like info or relationships which can be repeated over and over.

Multiverse found that AI fashions aren’t uniformly compressible. Early layers show fragile, whereas deeper layers—lately proven to be much less essential for efficiency—can stand up to aggressive compression.

This selective method lets them obtain dramatic dimension reductions the place different strategies fail.

After compression, fashions endure transient “therapeutic”—retraining that takes lower than one epoch due to the lowered parameter rely. The corporate claims this restoration course of runs 50% quicker than coaching authentic fashions attributable to decreased GPU-CPU switch masses.

Lengthy story quick—per the corporate’s personal presents—you begin with a mannequin, run the Compactify magic, and find yourself with a compressed model that has lower than 50% of its parameters, can run at twice the inference pace, prices lots much less, and is simply as succesful as the unique.

In its analysis, the staff exhibits you’ll be able to cut back the Llama-2 7B mannequin’s reminiscence wants by 93%, reduce the variety of parameters by 70%, pace up coaching by 50%, and pace up answering (inference) by 25%—whereas solely dropping 2–3% accuracy.

Conventional shrinking strategies like quantization (lowering the precision like utilizing fewer decimal locations), pruning (chopping out much less essential neurons solely, like trimming lifeless branches from a tree), or distillation strategies (coaching a smaller mannequin to imitate a bigger one’s conduct) are usually not even near attaining these numbers.

Multiverse already serves over 100 purchasers together with Bosch and Financial institution of Canada, making use of their quantum-inspired algorithms past AI to power optimization and monetary modeling.

The Spanish authorities co-invested €67 million in March, pushing whole funding above $250 million.

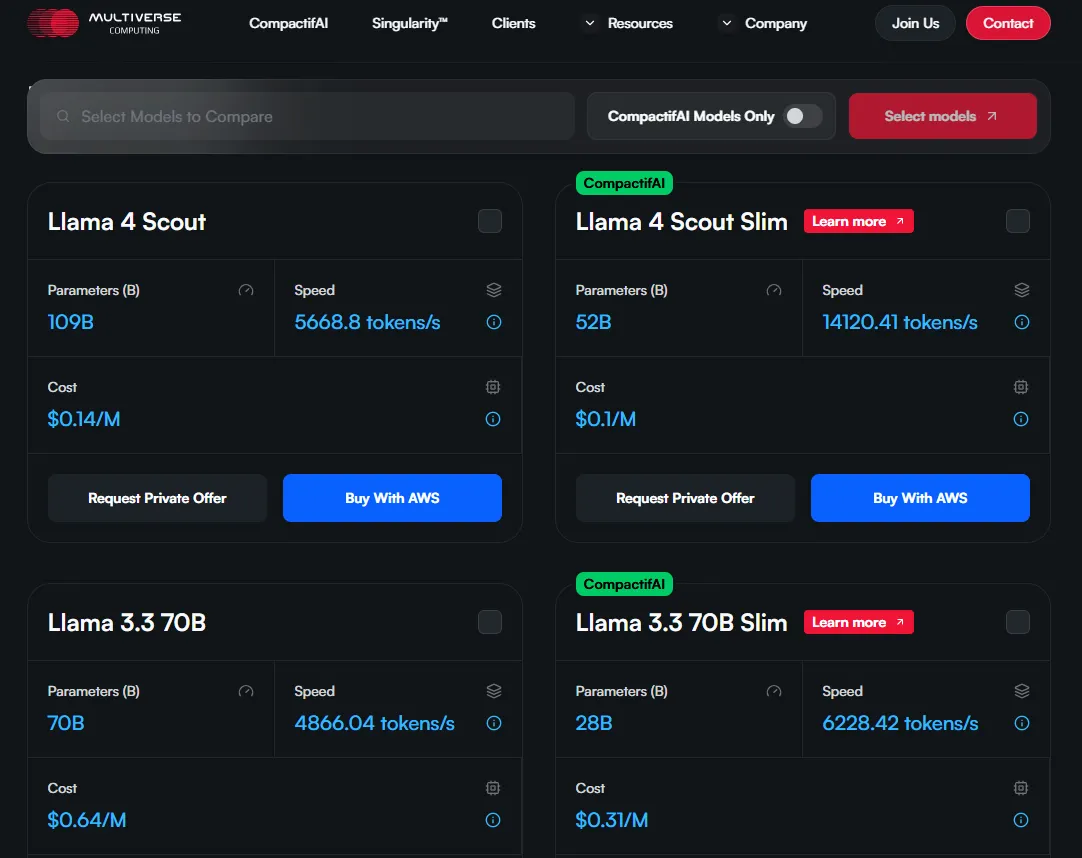

At present providing compressed variations of open-source fashions like Llama and Mistral via AWS, the corporate plans to increase to DeepSeek R1 and different reasoning fashions.

Proprietary methods from OpenAI or Claude stay clearly off-limits since they don’t seem to be out there for tinkering or research.

The know-how’s promise extends past value financial savings measures. HP Tech Ventures’ involvement indicators curiosity in edge AI deployment—operating refined fashions domestically quite than cloud servers.

“Multiverse’s progressive method has the potential to deliver AI advantages of enhanced efficiency, personalization, privateness and value effectivity to life for firms of any dimension,” Tuan Tran, HP’s President of Know-how and Innovation, stated.

So, if you end up operating DeepSeek R1 in your smartphone sometime, these dudes stands out as the ones to thank.

Edited by Josh Quittner and Sebastian Sinclair

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.