Stanford College researchers have developed AI brokers that may predict human habits with outstanding accuracy. A current research, led by Dr. Joon Sung Park and his group, demonstrates {that a} two-hour interview offers sufficient knowledge for AI to copy human decision-making patterns with 85% normalized accuracy.

Digital clones of a bodily particular person transcend deepfakes or “low-rank variations” generally known as LoRAs. These correct representations of personalities may very well be used to profile customers and check their responses to varied stimuli, from political campaigns to coverage proposals, temper assessments, and much more lifelike variations of present AI avatars.

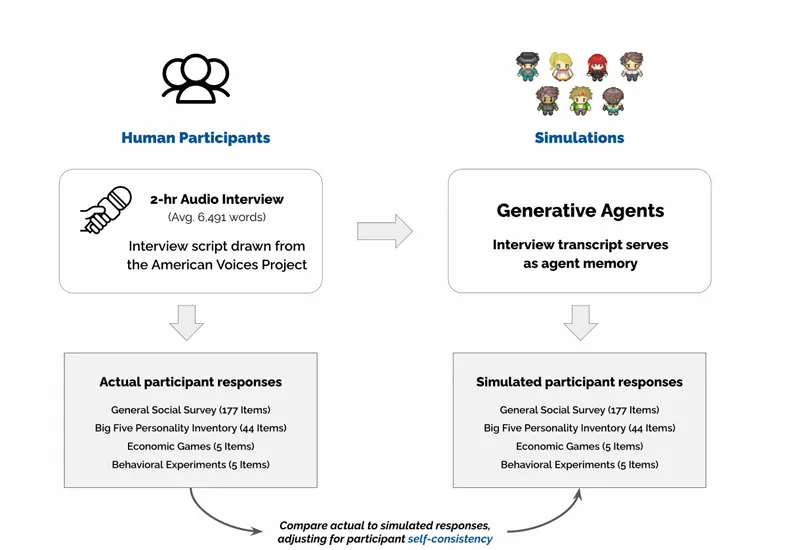

The analysis group recruited 1,052 People, fastidiously chosen to symbolize numerous demographics throughout age, gender, race, area, schooling, and political ideology. Every participant engaged in a two-hour dialog with an AI interviewer, producing transcripts averaging 6,491 phrases. The interviews, following a modified model of the American Voices Undertaking protocol, explored contributors’ life tales, values, and views on present social points.

And that’s all you want to be profiled and have a clone.

However not like different research, the researchers took a distinct method to processing interview knowledge. As an alternative of merely feeding uncooked transcripts into their programs, researchers developed an “skilled reflection” module. This evaluation software examines every interview via a number of skilled lenses—a psychologist’s perspective on persona traits, a behavioral economist’s view of decision-making patterns, a political scientist’s evaluation of ideological stances, and a demographic skilled’s contextual interpretation.

As soon as this multi-dimensional evaluation is completed, the AI is extra able to correctly understanding how the topic’s persona works, getting a deeper perception than what may very well be achieved by merely attempting to foretell the most probably habits primarily based on statistics. The result’s a bunch of AI brokers powered by GPT-4o with the flexibility to copy human habits on completely different managed situations

Testing proved remarkably profitable. “The generative brokers replicate contributors’ responses on the Normal Social Survey 85% as precisely as contributors replicate their very own solutions two weeks later, and carry out comparably in predicting persona traits and outcomes in experimental Replications,” the research says. The system confirmed comparable prowess in replicating Massive 5 persona traits, attaining a correlation of 0.78, and demonstrated important accuracy in financial decision-making video games with a 0.66 normalized correlation. (A correlation coefficient of 1 would point out an ideal constructive correlation.)

Notably noteworthy was the system’s decreased bias throughout racial and ideological teams in comparison with conventional demographic-based approaches—which appears to be an issue for lots of AI programs, which battle to discover a stability between stereotyping (assuming a topic would exhibit traits of the group it’s included in) and being overly inclusive (avoiding statistical/historic factual assumptions to be politically right).

“Our structure reduces accuracy biases throughout racial and ideological teams in comparison with brokers given demographic descriptions,” the researchers emphasised, suggesting their interview-based technique may very well be very useful in demographic profiling.

However this isn’t the primary effort to make use of AI for profiling individuals.

In Japan, alt Inc.’s CLONEdev platform has been experimenting with persona era via lifelog knowledge integration. Their system combines superior language processing with picture era to create digital clones that replicate customers’ values and preferences. “By way of our P.A.I expertise, we’re dedicated to work in the direction of digitizing your complete human race,” alt In stated in an official weblog submit.

And typically you don’t even want a tailor-made interview. Take MileiGPT for instance. An AI researcher from Argentina was in a position to finetune an open supply LLM with 1000’s of hours of publicly accessible content material and replicate Argentina’s President Javier Milei’s communication patterns and decision-making processes. These advances have led researchers to discover the concept of pondering/sentient ” digital twins,” which expertise analysts and consultants like Rob Enderle imagine may very well be totally purposeful within the subsequent 10 years.

And naturally, if AI-robots received’t take your jobs, your AI twin most likely would. “The emergence of those will want an enormous quantity of thought and moral consideration, as a result of a pondering duplicate of ourselves may very well be extremely helpful to employers,” Enderle advised BBC. “What occurs if your organization creates a digital twin of you, and says, ‘Hey, you’ve got acquired this digital twin who we pay no wage to, so why are we nonetheless using you?'”

Issues could look a little bit scary. Not solely will deepfakes mimic your seems to be, however AI clones will have the ability to mimic your choices primarily based on a brief profiling of your habits. Whereas Stanford’s researchers have made certain to place safeguards in place, it’s clear that the road between human and digital identification is turning into more and more blurred. And we’re already crossing it.

Typically Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.