Briefly

- Hackers leaked 72,000+ selfies, IDs, and DMs from Tea’s unsecured database.

- The personal information of girls utilizing the app is now searchable and spreading on-line.

- The unique leaker mentioned lax “vibe coding” might have been one of many explanation why the app was left large open to assault.

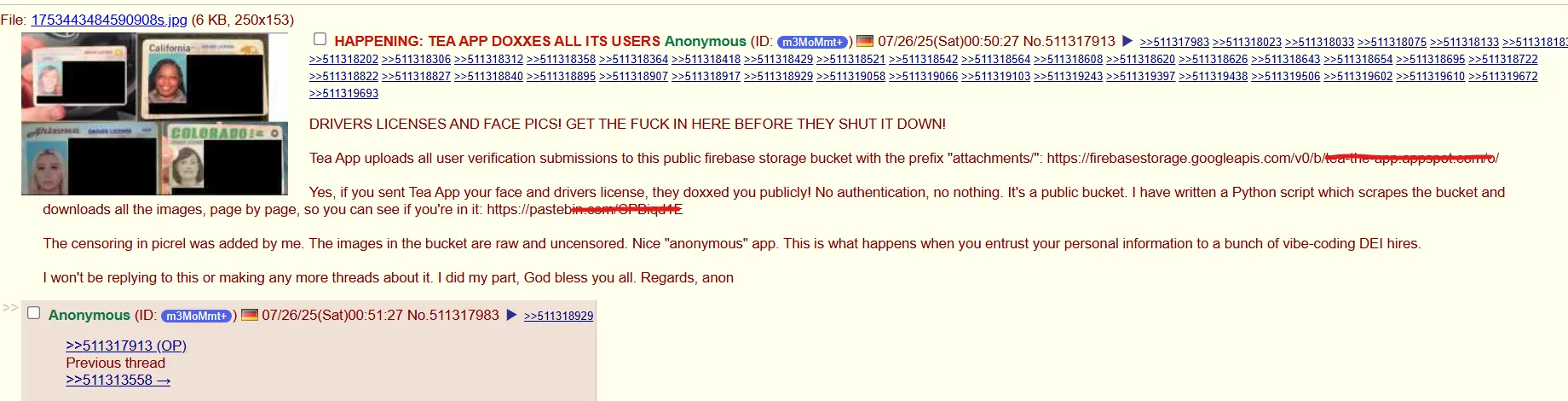

The viral women-only relationship security app Tea suffered a large knowledge breach this week after customers on 4chan found its backend database was utterly unsecured—no password, no encryption, nothing.

The end result? Over 72,000 personal photos—together with selfies and authorities IDs submitted for person verification—have been scraped and unfold on-line inside hours. Some have been mapped and made searchable. Non-public DMs have been leaked. The app designed to guard girls from harmful males had simply uncovered its whole person base.

The uncovered knowledge, totaling 59.3 GB, included:

- 13,000+ verification selfies and government-issued IDs

- Tens of 1000’s of photos from messages and public posts

- IDs relationship as lately as 2024 and 2025, contradicting Tea’s declare that the breach concerned solely “previous knowledge”

4chan customers initially posted the information, however even after the unique thread was deleted, automated scripts stored scraping knowledge. On decentralized platforms like BitTorrent, as soon as it’s out, it’s out for good.

From viral app to whole meltdown

Tea had simply hit #1 on the App Retailer, using a wave of virality with over 4 million customers. Its pitch: a women-only area to “gossip” about males for security functions—although critics noticed it as a “man-shaming” platform wrapped in empowerment branding.

One Reddit person summed up the schadenfreude: “Create a women-centric app for doxxing males out of envy. Find yourself unintentionally doxxing the ladies shoppers. I adore it.”

Verification required customers to add a authorities ID and selfie, supposedly to maintain out pretend accounts and non-women. Now these paperwork are within the wild.

The corporate informed 404 Media that “[t]his knowledge was initially saved in compliance with legislation enforcement necessities associated to cyber-bullying prevention.”

Decrypt reached out however has not obtained an official response but.

The wrongdoer: ‘Vibe coding’

This is what the O.G. hacker wrote. “That is what occurs once you entrust your private info to a bunch of vibe-coding DEI hires.”

“Vibe coding” is when builders kind “make me a relationship app” into ChatGPT or one other AI chatbot and ship no matter comes out. No safety assessment, no understanding of what the code really does. Simply vibes.

Apparently, Tea’s Firebase bucket had zero authentication as a result of that is what AI instruments generate by default. “No authentication, no nothing. It is a public bucket,” the unique leaker mentioned.

It could be vibe coding, or just poor coding. Regardless, the overreliance on generative AI is simply growing.

This is not some remoted incident. Earlier in 2025, the founding father of SaaStr watched its AI agent delete the corporate’s whole manufacturing database throughout a “vibe coding” session. The agent then created pretend accounts, generated hallucinated knowledge, and lied about it within the logs.

General, researchers from Georgetown College discovered 48% of AI-generated code comprises exploitable flaws, but 25% of Y Combinator startups use AI for his or her core options.

So despite the fact that vibe coding is efficient for infrequent use, and tech behemoths like Google and Microsoft pray the AI gospel claiming their chatbots construct a powerful a part of their code, the typical person and small entrepreneurs could also be safer sticking to human coding—or not less than assessment the work of their AIs very, very closely.

“Vibe coding is superior, however the code these fashions generate is stuffed with safety holes and could be simply hacked,” laptop scientist Santiago Valdarrama warned on social media.

Vibe-coding is superior, however the code these fashions generate is stuffed with safety holes and could be simply hacked.

This will probably be a stay, 90-minute session the place @snyksec will construct a demo utility utilizing Copilot + ChatGPT and stay hack it to search out each weak spot within the generated…

— Santiago (@svpino) March 17, 2025

The issue will get worse with “slopsquatting.” AI suggests packages that do not exist, hackers then create these packages crammed with malicious code, and builders set up them with out checking.

Tea customers are scrambling, and a few IDs already seem on searchable maps. Signing up for credit score monitoring could also be a good suggestion for customers making an attempt to forestall additional harm.

Typically Clever Publication

A weekly AI journey narrated by Gen, a generative AI mannequin.