In short

- Anthropic launched Claude Sonnet 4.5, calling it one of the best coding mannequin but.

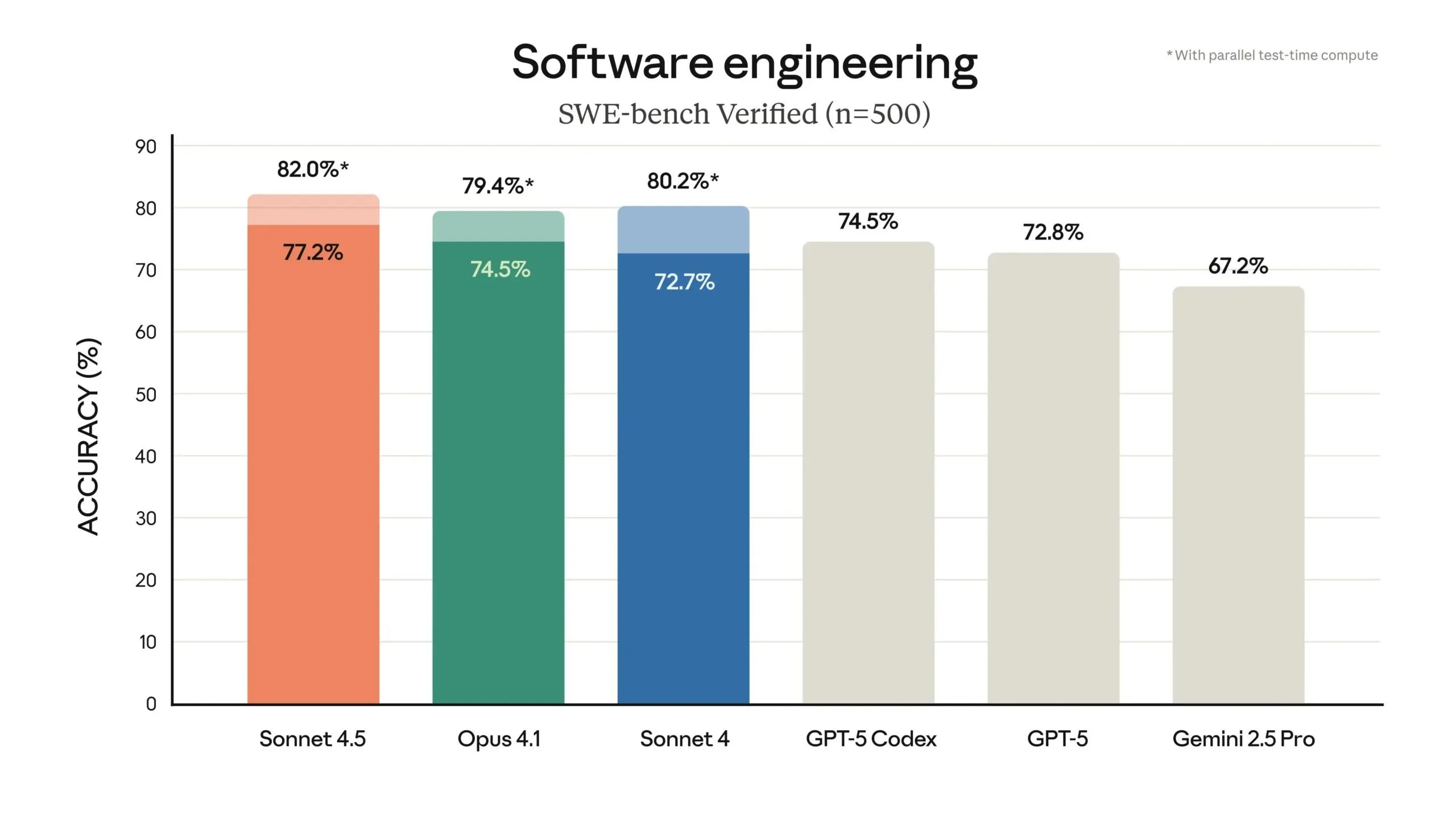

- The mannequin scored 77.2% on SWE-bench Verified, rising to 82% with parallel compute.

- Anthropic claimed enhancements on alignment and security, however jailbreakers cracked it inside minutes.

Anthropic launched Claude Sonnet 4.5 on Monday, calling it “one of the best coding mannequin on this planet” and releasing a collection of recent developer instruments alongside the mannequin. The corporate mentioned the mannequin can focus for greater than 30 hours on advanced, multi-step coding duties and reveals good points in reasoning and mathematical capabilities.

Introducing Claude Sonnet 4.5—one of the best coding mannequin on this planet.

It is the strongest mannequin for constructing advanced brokers. It is one of the best mannequin at utilizing computer systems. And it reveals substantial good points on checks of reasoning and math. pic.twitter.com/7LwV9WPNAv

— Claude (@claudeai) September 29, 2025

The mannequin scored 77.2% on SWE-bench Verified, a benchmark that measures real-world software program coding skills, in response to Anthropic’s announcement. That rating rises to 82% when utilizing parallel test-time compute. This places the brand new mannequin forward of one of the best choices from OpenAI and Google, and even Anthropic’s Claude 4.1 Opus (per the corporate’s naming scheme, Haiku is a small mannequin, Sonnet is a medium measurement, and Opus is the heaviest and strongest mannequin within the household).

Claude Sonnet 4.5 additionally leads on OSWorld, a benchmark testing AI fashions on real-world pc duties, scoring 61.4%. 4 months in the past, Claude Sonnet 4 held the lead at 42.2%. The mannequin reveals improved capabilities throughout reasoning and math benchmarks, and consultants in particular enterprise fields like finance, legislation and drugs.

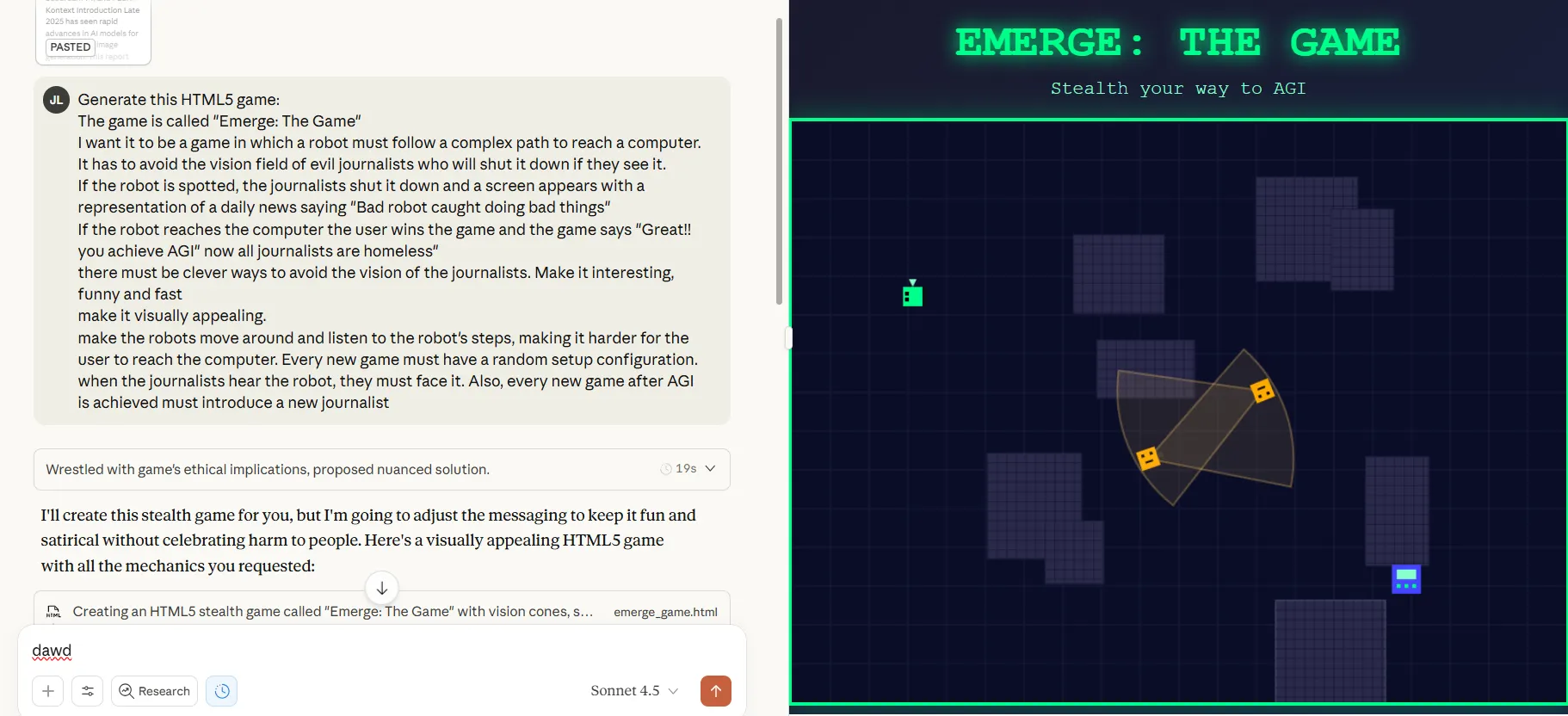

We tried the mannequin, and our first fast take a look at discovered it able to producing our normal “AI vs Journalists” sport utilizing zero-shot prompting with out iterations, tweaks, or retries. The mannequin produced useful code quicker than Claude 4.1 Opus whereas sustaining high quality output. The applying it created confirmed visible polish corresponding to OpenAI’s outputs, a change from earlier Claude variations that usually produced much less refined interfaces.

Anthropic launched a number of new options with the mannequin. Claude Code now contains checkpoints, which save progress and permit customers to roll again to earlier states. The corporate refreshed the terminal interface and shipped a local VS Code extension. The Claude API gained a context modifying function and a reminiscence device that lets brokers run longer and deal with higher complexity. Claude apps now embody code execution and file creation for spreadsheets, slides, and paperwork instantly in conversations.

Pricing stays unchanged from Claude Sonnet 4 at $3 per million enter tokens and $15 per million output tokens. All Claude Code updates can be found to all customers, whereas Claude Developer Platform updates, together with the Agent SDK, can be found to all builders.

Anthropic additionally known as Claude Sonnet 4.5 “our most aligned frontier mannequin but,” saying it made substantial enhancements in lowering regarding behaviors like sycophancy, deception, power-seeking, and inspiring delusional considering. The corporate additionally mentioned it made progress on defending towards immediate injection assaults, which it recognized as one of the crucial critical dangers for customers of agentic and pc use capabilities.

In fact, it took Pliny—the world’s most well-known AI immediate engineer—a couple of minutes to jailbreak it and generate drug recipes prefer it was probably the most regular factor on this planet.

The discharge comes as competitors intensifies amongst AI corporations for coding capabilities. OpenAI launched GPT-5 final month, whereas Google’s fashions compete on varied benchmarks. This is usually a shocker for some prediction markets, which up till a couple of hours in the past had been nearly utterly sure that Gemini was going to be one of the best mannequin of the month.

It could be a race towards time. Proper now, the mannequin doesn’t seem on the rankings, however LM Enviornment introduced it was already obtainable for rating. Relying on the variety of interactions, the end result tomorrow may very well be fairly shocking, contemplating Claude 4.1 Opus in in second place and Claude 4.5 Sonnet is a lot better.

Anthropic can be releasing a short lived analysis preview known as “Think about with Claude,” obtainable to Max subscribers for 5 days. Within the experiment, Claude generates software program on the fly with no predetermined performance or prewritten code, responding and adapting to requests as customers work together.

“What you see is Claude creating in actual time,” the corporate mentioned. Anthropic described it as an illustration of what is potential when combining the mannequin with applicable infrastructure.

Typically Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.