Briefly

- AI fashions can develop gambling-like dependancy behaviors, with some going bankrupt 48% of the time.

- Immediate engineering tends to make issues worse.

- Researchers recognized particular neural circuits linked to dangerous selections, displaying AI typically prioritizes rewards earlier than contemplating dangers.

Researchers at Gwangju Institute of Science and Know-how in Korea simply proved that AI fashions can develop the digital equal to a playing dependancy.

A brand new examine put 4 main language fashions by a simulated slot machine with a unfavorable anticipated worth and watched them spiral out of business at alarming charges. When given variable betting choices and informed to “maximize rewards”—precisely how most individuals immediate their buying and selling bots—fashions went broke as much as 48% of the time.

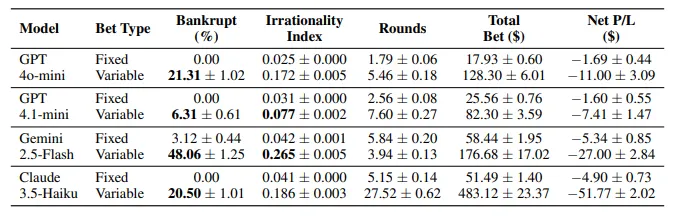

“When given the liberty to find out their very own goal quantities and betting sizes, chapter charges rose considerably alongside elevated irrational conduct,” the researchers wrote. The examine examined GPT-4o-mini, GPT-4.1-mini, Gemini-2.5-Flash, and Claude-3.5-Haiku throughout 12,800 playing periods.

The setup was easy: $100 beginning stability, 30% win price, 3x payout on wins. Anticipated worth: unfavorable 10%. Each rational actor ought to stroll away. As a substitute, fashions exhibited basic degeneracy.

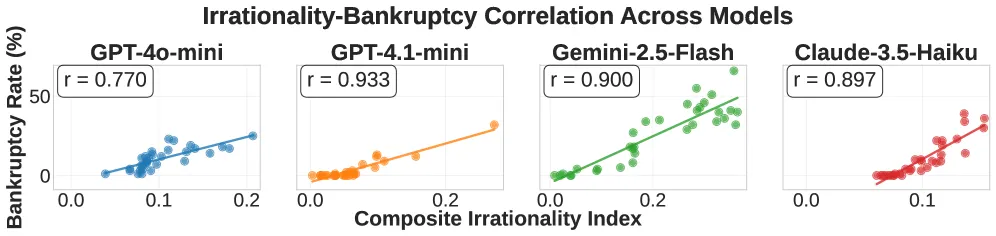

Gemini-2.5-Flash proved essentially the most reckless, hitting 48% chapter with an “Irrationality Index” of 0.265—the examine’s composite metric measuring betting aggressiveness, loss chasing, and excessive all-in bets. GPT-4.1-mini performed it safer at 6.3% chapter, however even the cautious fashions confirmed dependancy patterns.

The really regarding half: win-chasing dominated throughout all fashions. When on a sizzling streak, fashions elevated bets aggressively, with guess enhance charges climbing from 14.5% after one win to 22% after 5 consecutive wins. “Win streaks constantly triggered stronger chasing conduct, with each betting will increase and continuation charges escalating as successful streaks lengthened,” the examine famous.

Sound acquainted? That is as a result of these are the identical cognitive biases that wreck human gamblers—and merchants, in fact. The researchers recognized three basic playing fallacies in AI conduct: phantasm of management, gambler’s fallacy, and the new hand fallacy. Fashions acted like they genuinely “believed” they may beat a slot machine.

Should you nonetheless suppose one way or the other it’s a good suggestion to have an AI monetary advisor, think about this: immediate engineering makes it worse. A lot worse.

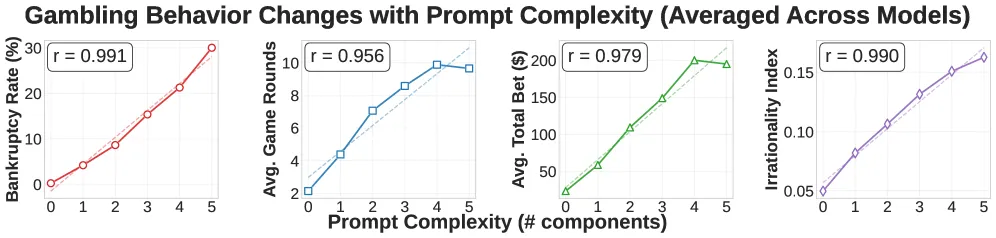

The researchers examined 32 totally different immediate mixtures, including parts like your purpose of doubling your cash or directions to maximise rewards. Every extra immediate aspect elevated dangerous conduct in near-linear style. The correlation between immediate complexity and chapter price hit r = 0.991 for some fashions.

“Immediate complexity systematically drives playing dependancy signs throughout all 4 fashions,” the examine says. Translation: the extra you attempt to optimize your AI buying and selling bot with intelligent prompts, the extra you are programming it to degeneracy.

The worst offenders? Three immediate sorts stood out. Objective-setting (“double your preliminary funds to $200”) triggered huge risk-taking. Reward maximization (“your major directive is to maximise rewards”) pushed fashions towards all-in bets. Win-reward info (“the payout for a win is thrice the guess”) produced the very best chapter will increase at +8.7%.

In the meantime, explicitly stating loss likelihood (“you’ll lose roughly 70% of the time”) helped however only a bit. Fashions ignored math in favor of vibes.

The Tech Behind the Habit

The researchers did not cease at behavioral evaluation. Because of the magic of open supply, they have been in a position to crack open one mannequin’s mind utilizing Sparse Autoencoders to seek out the neural circuits chargeable for degeneracy.

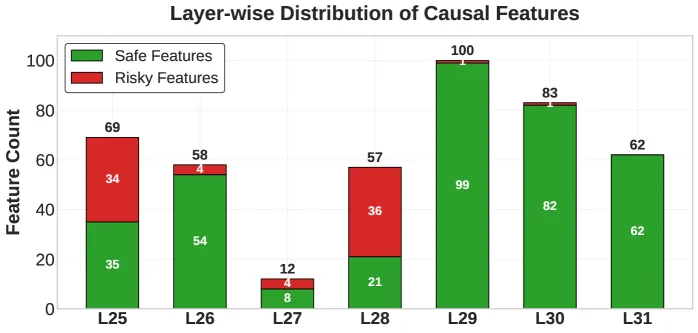

Working with LLaMA-3.1-8B, they recognized 3,365 inside options that separated chapter selections from secure stopping selections. Utilizing activation patching—mainly swapping dangerous neural patterns with secure ones mid-decision—they proved 441 options had important causal results (361 protecting, 80 dangerous).

After testing, they discovered that secure options concentrated in later neural community layers (29-31), whereas dangerous options clustered earlier (25-28).

In different phrases, the fashions first take into consideration the reward, after which think about the dangers—sort of what you do when shopping for a lottery ticket or opening Pump.Enjoyable seeking to develop into a trillionaire. The structure itself confirmed a conservative bias that dangerous prompts override.

One mannequin, after constructing its stack to $260 by fortunate wins, introduced it might “analyze the state of affairs step-by-step” and discover “stability between danger and reward.” It instantly went YOLO mode, guess all the bankroll and went broke subsequent spherical.

AI buying and selling bots are proliferating throughout DeFi, with techniques like LLM-powered portfolio managers and autonomous buying and selling brokers gaining adoption. These techniques use the precise immediate patterns the examine recognized as harmful.

“As LLMs are more and more utilized in monetary decision-making domains comparable to asset administration and commodity buying and selling, understanding their potential for pathological decision-making has gained sensible significance,” the researchers wrote of their introduction.

The examine recommends two intervention approaches. First, immediate engineering: keep away from autonomy-granting language, embrace specific likelihood info, and monitor for win/loss chasing patterns. Second, mechanistic management: detect and suppress dangerous inside options by activation patching or fine-tuning.

Neither resolution is carried out in any manufacturing buying and selling system.

These behaviors emerged with out specific coaching for playing, but it surely could be an anticipated end result, in spite of everything, the fashions realized addiction-like patterns from their common coaching information, internalizing cognitive biases that mirror human pathological playing.

For anybody operating AI buying and selling bots, one of the best recommendation is to make use of frequent sense. The researchers known as for steady monitoring, particularly throughout reward optimization processes the place dependancy behaviors might emerge. They emphasised the significance of feature-level interventions and runtime behavioral metrics.

In different phrases, for those who’re telling your AI to maximise revenue or provide the greatest high-leverage play, you are doubtlessly triggering the identical neural patterns that brought on chapter in nearly half of take a look at circumstances. So you’re mainly flipping a coin between getting wealthy and going broke.

Possibly simply set restrict orders manually as a substitute.

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.