Briefly

- A Delhi IT employee claims he used ChatGPT to construct a faux cost website that captured a scammer’s location and photograph throughout an “military switch” fraud try.

- The Reddit publish went viral after the scammer allegedly panicked and begged for mercy as soon as confronted together with his personal knowledge.

- Different Reddit customers replicated the method and confirmed the AI-generated code may work, underscoring how generative instruments are reshaping DIY scambaiting.

When a message popped up on his telephone from a quantity claiming to be from a former faculty contact, a Delhi-based info expertise skilled was initially intrigued. The sender, posing as an Indian Administrative Service officer, claimed a buddy within the paramilitary forces was being transferred and wanted to liquidate high-end furnishings and home equipment “filth low-cost.”

It was a traditional “military switch” fraud, a pervasive digital grift in India. However as an alternative of blocking the quantity or falling sufferer to the scheme, the goal claims that he determined to show the tables utilizing the very expertise typically accused of aiding cybercriminals: synthetic intelligence.

Scamming a scammer

Based on an in depth account posted on Reddit, the consumer, recognized by the deal with u/RailfanHS, used OpenAI’s ChatGPT to “vibe code” a monitoring web site. The entice efficiently harvested the scammer’s location and {a photograph} of his face, resulting in a dramatic digital confrontation the place the fraudster reportedly begged for mercy.

Whereas the id of the Reddit consumer couldn’t be independently verified, and the precise particular person stays nameless, the technical technique described within the publish has been scrutinized and validated by the platform’s group of builders and AI lovers.

The incident highlights a rising pattern of “scambaiting”—vigilante justice the place tech-savvy individuals bait fraudsters to waste their time or expose their operations—evolving with the help of generative AI.

The encounter, which was extensively publicized in India, started with a well-known script. The scammer despatched photographs of products and a QR code, demanding an upfront cost. Feigning technical difficulties with the scan, u/RailfanHS turned to ChatGPT.

He fed the AI chatbot a immediate to generate a useful webpage designed to imitate a cost portal. The code, described as an “80-line PHP webpage,” was secretly designed to seize the customer’s GPS coordinates, IP handle, and a front-camera snapshot.

The monitoring mechanism relied partly on social engineering as a lot as a software program exploit. To bypass browser security measures that usually block silent digital camera entry, the consumer instructed the scammer he wanted to add the QR code to the hyperlink to “expedite the cost course of.” When the scammer visited the location and clicked a button to add the picture, the browser prompted him to permit digital camera and placement entry—permissions he unwittingly granted in his haste to safe the funds.

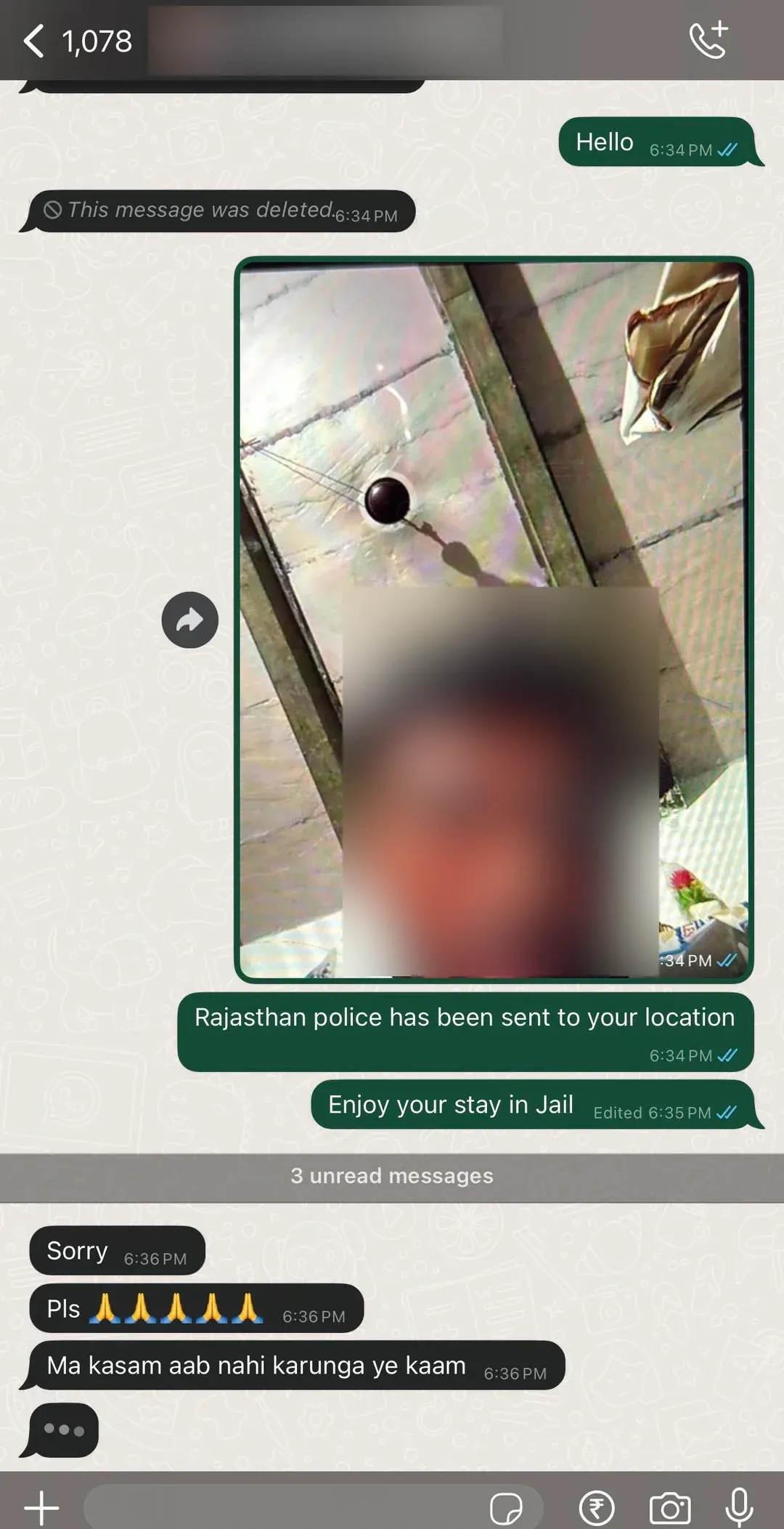

“Pushed by greed, haste, and utterly trusting the looks of a transaction portal, he clicked the hyperlink,” u/RailfanHS wrote within the thread on the r/delhi subreddit. “I immediately acquired his reside GPS coordinates, his IP handle, and, most satisfyingly, a transparent, front-camera snapshot of him sitting.”

The retaliation was swift. The IT skilled despatched the harvested knowledge again to the scammer. The impact, in keeping with the publish, was speedy panic. The fraudster’s telephone strains flooded the consumer with calls, adopted by messages pleading for forgiveness and promising to desert his lifetime of crime.

“He was now pleading, insisting he would abandon this line of labor fully and desperately asking for one more probability,” RailfanHS wrote. “For sure, he would very nicely be scamming somebody the very subsequent hour, however boy the satisfaction of stealing from a thief is loopy.”

Redditors confirm the strategy

Whereas dramatic tales of web justice typically invite skepticism, the technical underpinnings of this sting have been verified by different customers within the thread. A consumer with the deal with u/BumbleB3333 reported efficiently replicating the “dummy HTML webpage” utilizing ChatGPT. They famous that whereas the AI has guardrails towards creating malicious code for silent surveillance, it readily generates code for legitimate-looking websites that request consumer permissions—which is precisely how the scammer was trapped.

“I used to be in a position to make a kind of a dummy HTML webpage with ChatGPT. It does seize geolocation when a picture is uploaded after asking for permission,” u/BumbleB3333 commented, confirming the plausibility of the hack. One other consumer, u/STOP_DOWNVOTING, claimed to have generated an “moral model” of the code that could possibly be modified to operate equally.

The unique poster, who recognized himself within the feedback as an AI product supervisor, acknowledged that he had to make use of particular prompts to bypass a few of ChatGPT’s security restrictions. “I am kind of used to bypassing these guardrails with proper prompts,” he famous, including that he hosted the script on a digital non-public server.

Cybersecurity specialists warning that whereas such “hack-backs” are satisfying, they function in a authorized gray space and might carry dangers. Nonetheless, it is fairly tempting—and makes for a satisfying spectator sport.

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.