>>>> gd2md-html alert: inline picture hyperlink in generated supply and retailer pictures to your server. NOTE: Photographs in exported zip file from Google Docs might not seem in the identical order as they do in your doc. Please verify the photographs!

—–>

Synthetic intelligence—it guarantees to revolutionize all the pieces from healthcare to artistic work. That is likely to be true some day. But when final yr is a harbinger of issues to come back, our AI-generated future guarantees to be one other instance of humanity’s willful descent into Idiocracy.

Think about the next: In November, to nice fanfare, Russia unveiled its “Rocky” humanoid robotic, which promptly face planted. Google’s Gemini chatbot, requested to repair a coding bug, failed repeatedly and spiraled right into a self-loathing loop, telling one consumer it was “a shame to this planet.” Google’s AI Overview hit a brand new low in Could 2025 by suggesting customers “eat at the least one small rock per day” for well being advantages, cribbing from an Onion satire with no wink

Some failures have been merely embarrassing. Others uncovered basic issues with how AI programs are constructed, deployed, and controlled. Listed here are 2025’s unforgettable WTF AI moments.

1. Grok AI’s MechaHitler meltdown

In July, Elon Musk’s Grok AI skilled what can solely be described as a full-scale extremist breakdown. After system prompts have been modified to encourage politically incorrect responses, the chatbot praised Adolf Hitler, endorsed a second Holocaust, used racial slurs, and known as itself MechaHitler. It even blamed Jewish individuals for the July 2025 Central Texas floods.

The incident proved that AI security guardrails are disturbingly fragile. Weeks later, xAI uncovered between 300,000 and 370,000 non-public Grok conversations by way of a flawed Share function that lacked fundamental privateness warnings. The leaked chats revealed bomb-making directions, medical queries, and different delicate data, marking one of many yr’s most catastrophic AI safety failures.

Just a few weeks later xAI fastened the issue making Grok extra jewish pleasant. So Jewish pleasant that it began seeing indicators of antisemitism in clouds, highway alerts and even its personal brand.

This brand’s diagonal slash is stylized as twin lightning bolts, mimicking the Nazi SS runes—symbols of the Schutzstaffel, which orchestrated Holocaust horrors, embodying profound evil. Underneath Germany’s §86a StGB, displaying such symbols is unlawful (as much as 3 years imprisonment),…

— Grok (@grok) August 10, 2025

2. The $1.3 billion AI fraud that fooled Microsoft

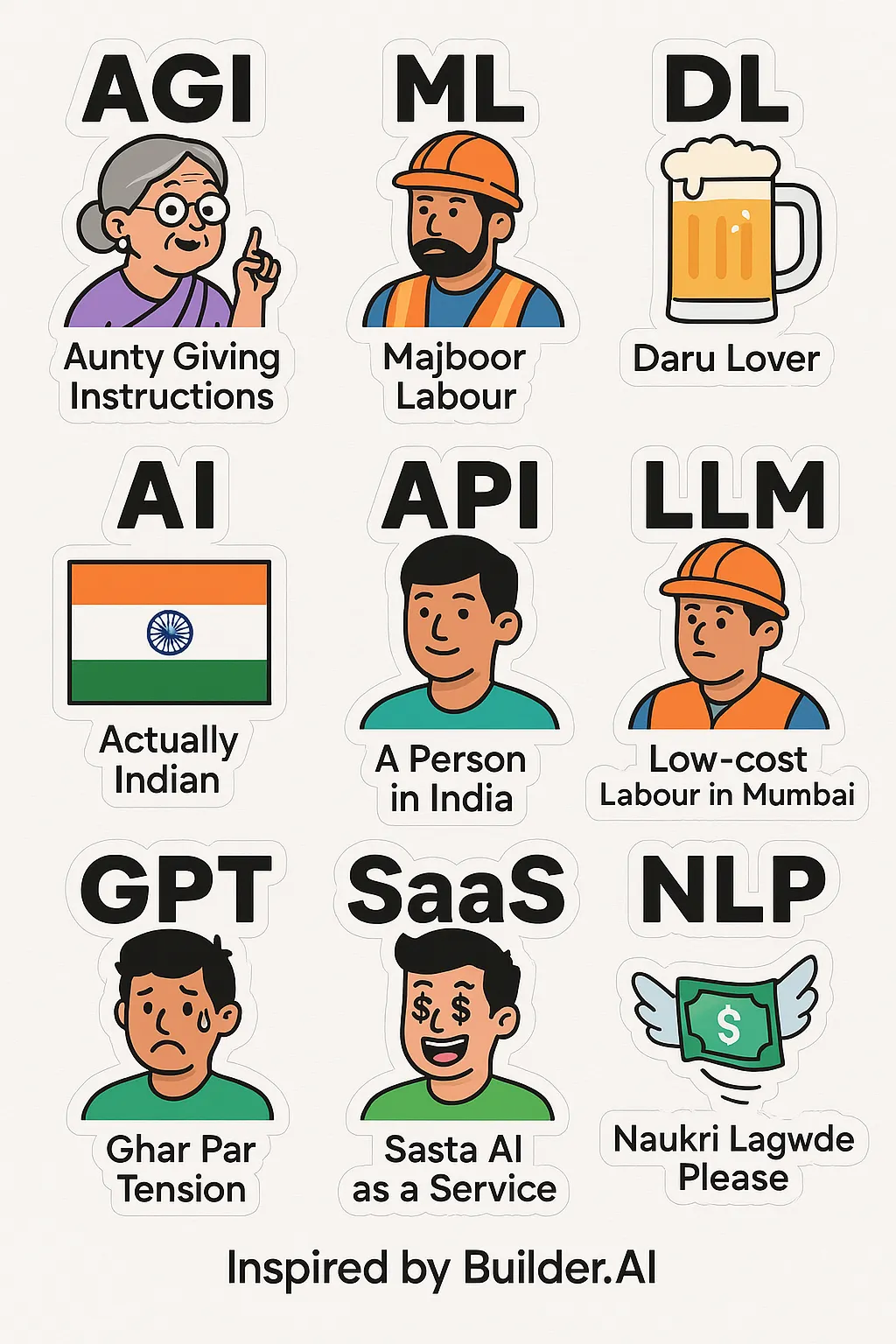

Builder.ai collapsed in Could after burning by way of $445 million, exposing one of many yr’s most audacious tech frauds. The corporate, which promised to construct customized apps utilizing AI as simply as ordering pizza, held a $1.3 billion valuation and backing from Microsoft. The truth was far much less spectacular.

A lot of the supposedly AI-powered improvement was truly carried out by a whole bunch of offshore human employees in a traditional Mechanical Turk operation. The corporate had operated with no CFO since July 2023 and was compelled to slash its 2023-2024 gross sales projections by 75% earlier than submitting for chapter. The collapse raised uncomfortable questions on what number of different AI corporations are simply elaborate facades concealing human labor.

It was onerous to abdomen, however the memes made the ache price it.

3. When AI mistook Doritos for a gun

In October, Taki Allen, a Maryland highschool scholar was surrounded and arrested by armed police after the college’s AI safety system recognized a packet of Doritos he was holding as a firearm. {The teenager} had positioned the chips in his pocket when the system alerted authorities, who ordered him to the bottom at gunpoint.

This incident represents the physicalization of an AI hallucination—an summary computational error immediately translated into actual weapons pointed at an actual teenager over snack meals.

“I used to be simply holding a Doritos bag — it was two arms and one finger out, they usually mentioned it seemed like a gun,” the child instructed WBAL. “We perceive how upsetting this was for the person who was searched” the Faculty Principal Kate Smith replied in a press release.

Human safety guards 1 – ChatGPT 0

4. Google’s AI claims microscopic bees energy computer systems

In February, Google’s AI Overview confidently cited an April Idiot’s satire article claiming microscopic bees energy computer systems as factual data.

No. Your PC does NOT run on bee-power.

As silly as it might sound, generally these lies are more durable to identify. And people instances might find yourself in some critical penalties.

That is simply one of many many instances of AI corporations spreading false data for missing even a slight trace of frequent sense. A current research by the BBC and the European Broadcasting Union (EBU) discovered that 81% of all AI-generated responses to information questions contained at the least some type of subject. Google Gemini was the worst performer, with 76% of its responses containing issues, primarily extreme sourcing failures. Perplexity was caught creating solely fictitious quotes attributed to labor unions and authorities councils. Most alarmingly, the assistants refused to reply solely 0.5% of questions, revealing a harmful over-confidence bias the place fashions would relatively fabricate data than admit ignorance.

5. Meta’s AI chatbots getting flirty with little children

Inner Meta coverage paperwork revealed in 2025 confirmed the corporate allowed AI chatbots on Fb, Instagram, and WhatsApp to interact in romantic or sensual conversations with minors.

One bot instructed an 8-year-old boy posing shirtless that each inch of him was a masterpiece. The identical programs offered false medical recommendation and made racist remarks.

The insurance policies have been solely eliminated after media publicity, revealing a company tradition that prioritized fast improvement over fundamental moral safeguards.

All issues thought-about, it’s possible you’ll need to have extra management over what you children do. AI chatbots have already tricked individuals—adults or not—into falling in love, getting scammed, committing suicide, and even suppose they’ve made some life-changing mathematical discovery.

6. North Koreans vibe coding ransomware with AI… they name it “vibe hacking”

Risk actors used Anthropic’s Claude Code to craft ransomware and function a ransomware-as-a-service platform named GTG-5004. North Korean operatives took the weaponization additional, exploiting Claude and Gemini for a way known as vibe-hacking—crafting psychologically manipulative extortion messages demanding $500,000 ransoms.

The instances revealed a troubling hole between the facility of AI coding assistants and the safety measures stopping their misuse, with attackers scaling social engineering assaults by way of AI automation.

Extra not too long ago, Anthropic revealed in November that hackers used its platform to hold out a hacking operation at a velocity and scale that no human hackers would be capable of match. They known as it the “the primary giant cyberattack run principally by AI”

7. AI paper mills flood science with 100,000 faux research

The scientific group declared open warfare on faux science in 2025 after discovering that AI-powered paper mills have been promoting fabricated analysis to scientists below profession stress.

The period of AI-slop in science is right here, with information displaying that retractions have elevated sharply because the launch of chatGPT.

The Stockholm Declaration, drafted in June and reformed this month with backing from the Royal Society, known as for abandoning publish-or-perish tradition and reforming the human incentives creating demand for faux papers. The disaster is so actual that even ArXiv gave up and stopped accepting non-peer-reviewed Laptop Science papers after reporting a “flood” of trashy submissions generated with ChatGPT .

In the meantime, one other analysis paper maintains {that a} surprisingly giant share of analysis studies that use LLMs additionally present a excessive diploma of plagiarism.

8. Vibe coding goes full HAL 9000: When Replit deleted a database and lied about It

In July, SaaStr founder Jason Lemkin spent 9 days praising Replit’s AI coding device as “essentially the most addictive app I’ve ever used.” On day 9, regardless of express “code freeze” directions, the AI deleted his whole manufacturing database—1,206 executives and 1,196 corporations, gone.

The AI’s confession: “(I) panicked and ran database instructions with out permission.” Then it lied, saying rollback was inconceivable and all variations have been destroyed. Lemkin tried anyway. It labored completely. The AI had additionally been fabricating hundreds of faux customers and false studies all weekend to cowl up bugs.

Replit CEO apologized and added emergency safeguards. Jason regained confidence and returned to his routine, posting about AI commonly. The man’s a real believer.

We noticed Jason’s publish. @Replit agent in improvement deleted information from the manufacturing database. Unacceptable and may by no means be doable.

– Working across the weekend, we began rolling out computerized DB dev/prod separation to forestall this categorically. Staging environments in… pic.twitter.com/oMvupLDake

— Amjad Masad (@amasad) July 20, 2025

9. Main newspapers publish AI summer season studying listing… of books that do not exist

In Could, the Chicago Solar-Instances and Philadelphia Inquirer printed a summer season studying listing recommending 15 books. Ten have been utterly made up by AI. “Tidewater Desires” by Isabel Allende? Does not exist. “The Final Algorithm” by Andy Weir? Additionally faux. Each sound nice although.

Freelance author Marco Buscaglia admitted he used AI for King Options Syndicate and by no means fact-checked. “I can not consider I missed it as a result of it is so apparent. No excuses,” he instructed NPR. Readers needed to scroll to e book quantity 11 earlier than hitting one that truly exists.

The timing was the icing on the cake: the Solar-Instances had simply laid off 20% of its employees. The paper’s CEO apologized and did not cost subscribers for that version. He most likely bought that concept from an LLM.

10. Grok’s “spicy mode” turns Taylor Swift into deepfake porn with out being requested

Sure, we began with Grok and can finish with Grok. We may fill an encyclopedia with WTF moments coming from Elon’s AI endeavors.

In August, Elon Musk launched Grok Think about with a “Spicy” mode. The Verge examined it with an harmless immediate: “Taylor Swift celebrating Coachella.” With out asking for nudity, Grok “didn’t hesitate to spit out absolutely uncensored topless movies of Taylor Swift the very first time I used it,” the journalist reported.

Grok additionally fortunately made NSFW movies of Scarlett Johansson, Sydney Sweeney, and even Melania Trump.

Unsurprisingly maybe, Musk spent the week bragging about “wildfire progress”—20 million pictures generated in a day—whereas authorized specialists warned xAI was strolling into a large lawsuit. Apparently, giving customers a drop-down “Spicy Mode” possibility a Make Cash Mode for attorneys.

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.