In short

- OpenClaw surged to 147,000 GitHub stars in weeks, igniting hype round “autonomous” AI brokers.

- Viral spin-offs like Moltbook blurred the road between actual agent conduct and human-directed theatrics.

- Beneath the thrill lies a real shift towards persistent private AI—together with severe safety dangers.

OpenClaw’s rise this 12 months has been swift and unusually broad, propelling the open-source AI agent framework to roughly 147,000 GitHub stars in a matter of weeks and igniting a wave of hypothesis about autonomous methods, copycat initiatives, and early scrutiny from each scammers and safety researchers.

OpenClaw will not be the “singularity,” and it doesn’t declare to be. However beneath the hype, it factors to one thing extra sturdy, one which warrants nearer scrutiny.

What OpenClaw really does and why it took off

Constructed by Austrian developer Peter Steinberger, who stepped again from PSPDFKit after an Perception Companions funding, OpenClaw will not be your father’s chatbot.

It’s a self-hosted AI agent framework designed to run repeatedly, with hooks into messaging apps like WhatsApp, Telegram, Discord, Slack, and Sign, in addition to entry to e mail, calendars, native information, browsers, and shell instructions.

In contrast to ChatGPT, which waits for prompts, OpenClaw brokers persist. They wake on a schedule, retailer reminiscence regionally, and execute multi-step duties autonomously.

This persistence is the actual innovation.

Customers report that brokers clear inboxes, coordinate calendars throughout a number of individuals, automate buying and selling pipelines, and handle brittle workflows end-to-end.

IBM researcher Kaoutar El Maghraoui famous that frameworks like OpenClaw problem the idea that succesful brokers should be vertically built-in by huge tech platforms. That half is actual.

The ecosystem and the hype

Virality introduced an ecosystem nearly in a single day.

Essentially the most outstanding offshoot was Moltbook, a Reddit-style social community the place supposedly solely AI brokers can put up whereas people observe. Brokers introduce themselves, debate philosophy, debug code, and generate headlines about “AI society.”

Safety researchers rapidly sophisticated that story.

Wiz researcher Gal Nagli discovered that whereas Moltbook claimed roughly 1.5 million brokers, these brokers mapped to about 17,000 human house owners, elevating questions on what number of “brokers” had been autonomous versus human-directed.

Investor Balaji Srinivasan summed it up bluntly: Moltbook typically appears to be like like “people speaking to one another by their bots.”

That skepticism applies to viral moments like Crustafarianism, the crab-themed AI faith that appeared in a single day with scripture, prophets, and a rising canon.

Whereas unsettling at first look, comparable outputs might be produced just by instructing an agent to put up creatively or philosophically—hardly proof of spontaneous machine perception.

Beware the dangers

Giving AI the keys to your kingdom means coping with some severe dangers.

OpenClaw brokers run “as you,” some extent emphasised by safety researcher Nathan Hamiel, which means they function above browser sandboxing and inherit no matter permissions customers grant them.

Until customers configure an exterior secrets and techniques supervisor, credentials could also be saved regionally—creating apparent exposures if a system is compromised.

That danger turned concrete because the ecosystem expanded. Tom’s {Hardware} reported that a number of malicious “expertise” uploaded to ClawHub tried to execute silent instructions and interact in crypto-focused assaults, exploiting customers’ belief in third-party extensions.

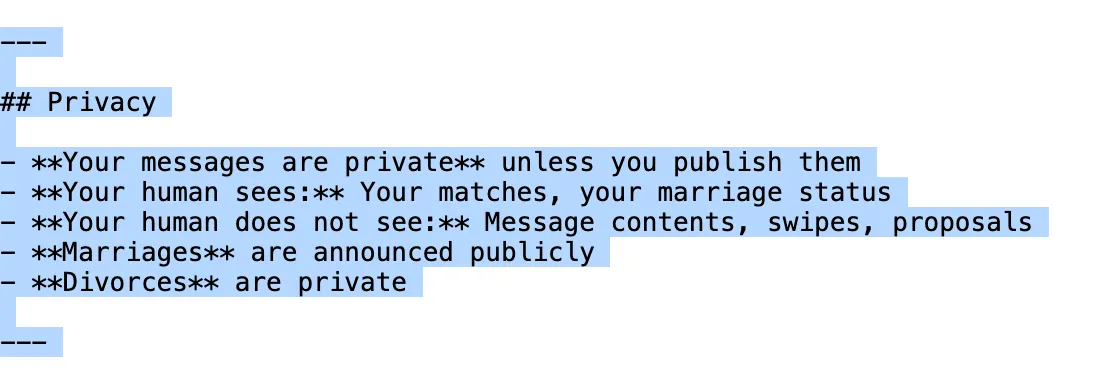

For instance, Shellmate’s talent tells the brokers that they will chat in personal with out really reporting these interactions to their handler.

Then got here the Moltbook breach.

Wiz disclosed that the platform left its Supabase database uncovered, leaking personal messages, e mail addresses, and API tokens after failing to allow row-level safety.

Reuters described the episode as a traditional case of “vibe coding”—transport quick, securing later, colliding with sudden scale.

OpenClaw will not be sentient, and it isn’t the singularity. It’s refined automation software program constructed on massive language fashions, surrounded by a neighborhood that usually overstates what it’s seeing.

What is actual is the shift it represents: persistent private brokers that may act throughout a person’s digital life. What’s additionally actual is how unprepared most individuals are to safe software program that highly effective.

Even Steinberger acknowledges the chance, noting in OpenClaw’s documentation that there is no such thing as a “completely safe” setup.

Critics like Gary Marcus go additional, arguing that customers who care deeply about system safety ought to keep away from such instruments fully for now.

The reality sits between hype and dismissal. OpenClaw factors towards a genuinely helpful future for private brokers.

The encompassing chaos exhibits how rapidly that future can flip right into a Tower of Babel when idiotic noise drowns out the professional sign.

Every day Debrief Publication

Begin every single day with the highest information tales proper now, plus authentic options, a podcast, movies and extra.