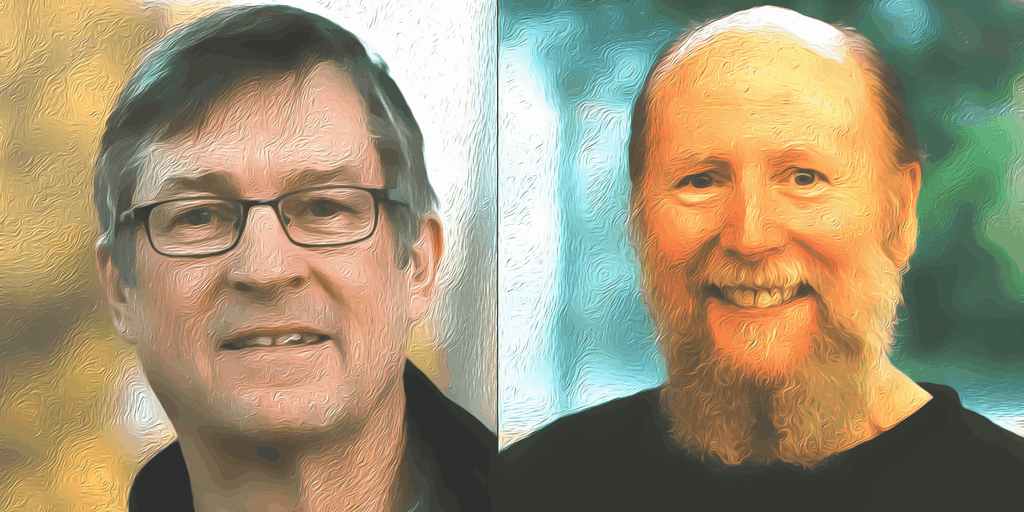

Andrew Barto and Richard Sutton, who obtained computing’s highest honor this week for his or her foundational work on reinforcement studying, did not waste any time utilizing their new platform to sound alarms about unsafe AI improvement practices within the trade.

The pair have been introduced as recipients of the 2024 ACM A.M. Turing Award on Wednesday, typically dubbed the “Nobel Prize of Computing,” and is accompanied by a $1 million prize funded by Google.

Somewhat than merely celebrating their achievement, they instantly criticized what they see as dangerously rushed deployment of AI applied sciences.

“Releasing software program to thousands and thousands of individuals with out safeguards is just not good engineering apply,” Barto advised The Monetary Instances. “Engineering apply has developed to attempt to mitigate the adverse penalties of know-how, and I do not see that being practiced by the businesses which are creating.”

Their evaluation likened present AI improvement practices like “constructing a bridge and testing it by having folks use it” with out correct security checks in place, as AI corporations search to prioritize enterprise incentives over accountable innovation.

The duo’s journey started within the late Seventies when Sutton was Barto’s scholar on the College of Massachusetts. All through the Nineteen Eighties, they developed reinforcement studying—a method the place AI methods be taught via trial and error by receiving rewards or penalties—when few believed within the strategy.

Their work culminated of their seminal 1998 textbook “Reinforcement Studying: An Introduction,” which has been cited virtually 80 thousand occasions and have become the bible for a era of AI researchers.

“Barto and Sutton’s work demonstrates the immense potential of making use of a multidisciplinary strategy to longstanding challenges in our discipline,” ACM President Yannis Ioannidis mentioned in an announcement. “Reinforcement studying continues to develop and presents nice potential for additional advances in computing and lots of different disciplines.”

The $1 million Turing Award comes as reinforcement studying continues to drive innovation throughout robotics, chip design, and enormous language fashions, with reinforcement studying from human suggestions (RLHF) changing into a essential coaching methodology for methods like ChatGPT.

Trade-wide security issues

Nonetheless, the pair’s warnings echo rising issues from different massive names within the discipline of pc science.

Yoshua Bengio, himself a Turing Award recipient, publicly supported their stance on Bluesky.

“Congratulations to Wealthy Sutton and Andrew Barto on receiving the Turing Award in recognition of their important contributions to ML,” he mentioned. “I additionally stand with them: Releasing fashions to the general public with out the appropriate technical and societal safeguards is irresponsible.”

Their place aligns with criticisms from Geoffrey Hinton, one other Turing Award winner—generally known as the godfather of AI—in addition to a 2023 assertion from high AI researchers and executives—together with OpenAI CEO Sam Altman—that referred to as for mitigating extinction dangers from AI as a worldwide precedence.

Former OpenAI researchers have raised comparable issues.

Jan Leike, who not too long ago resigned as head of OpenAI’s alignment initiatives and joined rival AI firm Anthropic, pointed to an insufficient security focus, writing that “constructing smarter-than-human machines is an inherently harmful endeavor.”

“Over the previous years, security tradition and processes have taken a backseat to shiny merchandise,” Leike mentioned.

Leopold Aschenbrenner, one other former OpenAI security researcher, referred to as safety practices on the firm “egregiously inadequate.” On the similar time, Paul Christiano, who additionally beforehand led OpenAI’s language mannequin alignment crew, instructed there is perhaps a “10-20% probability of AI takeover, [with] many [or] most people lifeless.”

Regardless of their warnings, Barto and Sutton keep a cautiously optimistic outlook on AI’s potential.

In an interview with Axios, each instructed that present fears about AI is perhaps overblown, although they acknowledge important social upheaval is feasible.

“I feel there’s a whole lot of alternative for these methods to enhance many elements of our life and society, assuming enough warning is taken,” Barto advised Axios.

Sutton sees synthetic normal intelligence as a watershed second, framing it as a possibility to introduce new “minds” into the world with out them creating via organic evolution—primarily opening the gates for humanity to work together with sentient machines sooner or later.

Edited by Sebastian Sinclair

Usually Clever E-newsletter

A weekly AI journey narrated by Gen, a generative AI mannequin.