|

Vitalik Buterin: People important for AI decentralization

Ethereum creator Vitalik Buterin has warned that centralized AI could permit “a 45-person authorities to oppress a billion folks sooner or later.”

On the OpenSource AI Summit in San Francisco this week, he stated {that a} decentralized AI community by itself was not the reply as a result of connecting a billion AIs might see them “simply conform to merge and turn into a single factor.”

“I believe in order for you decentralization to be dependable, you need to tie it into one thing that’s natively decentralized on the earth — and people themselves are in all probability the closest factor that the world has to that.”

Buterin believes that “human-AI collaboration” is the perfect probability for alignment, with “AI being the engine and people being the steering wheel and like, collaborative cognition between the 2,” he stated.

“To me, that’s like each a type of security and a type of decentralization,” he stated.

LA Occasions’ AI offers sympathetic tackle KKK

The LA Occasions has launched a controversial new AI characteristic known as Insights that charges the political bias of opinion items and articles and provides counter-arguments.

It hit the skids virtually instantly with its counterpoints to an opinion piece in regards to the legacy of the Ku Klux Klan. Insights prompt the KKK was simply “‘white Protestant tradition’ responding to societal adjustments somewhat than an explicitly hate-driven motion.” The feedback had been shortly eliminated.

Different criticism of the software is extra debatable, with some readers up in arms that an Op-Ed suggesting Trump’s response to the LA wildfires wasn’t as dangerous as folks had been making out was labeled Centrist. In Insights’ protection, the AI additionally got here up with a number of good anti-Trump arguments contradicting the premise of the piece.

And The Guardian simply appeared upset the AI supplied counter-arguments it disagreed with. It singled out for criticism Insights’ response to an opinion piece about Trump’s place on Ukraine. The AI stated:

“Advocates of Trump’s method assert that European allies have free-ridden on US safety ensures for many years and should now shoulder extra accountability.”

However that’s certainly what advocates of Trump’s place argue, even when The Guardian disagrees with it. Understanding the opposing perspective is a vital step to with the ability to counter it. So, maybe the Insights service will present a useful service in spite of everything.

First peer-reviewed AI-generated scientific paper

Sakana’s The AI Scientist has produced the primary absolutely AI-generated scientific paper that was in a position to cross peer evaluate at a workshop throughout a machine studying convention. Whereas the reviewers had been knowledgeable that a few of the papers submitted is perhaps AI-generated (three of 43), they didn’t know which of them. Two of the AI papers bought knocked again, however one slipped by means of the online about “Sudden Obstacles in Enhancing Neural Community Generalization”

It solely simply scraped by means of, nevertheless, and the workshop acceptance threshold is way decrease than for the convention correct or for an precise journal.

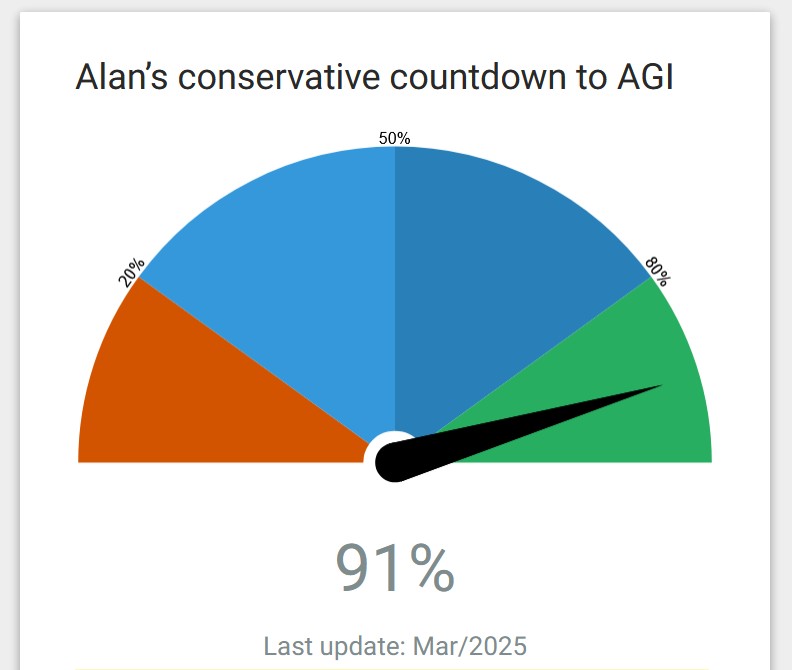

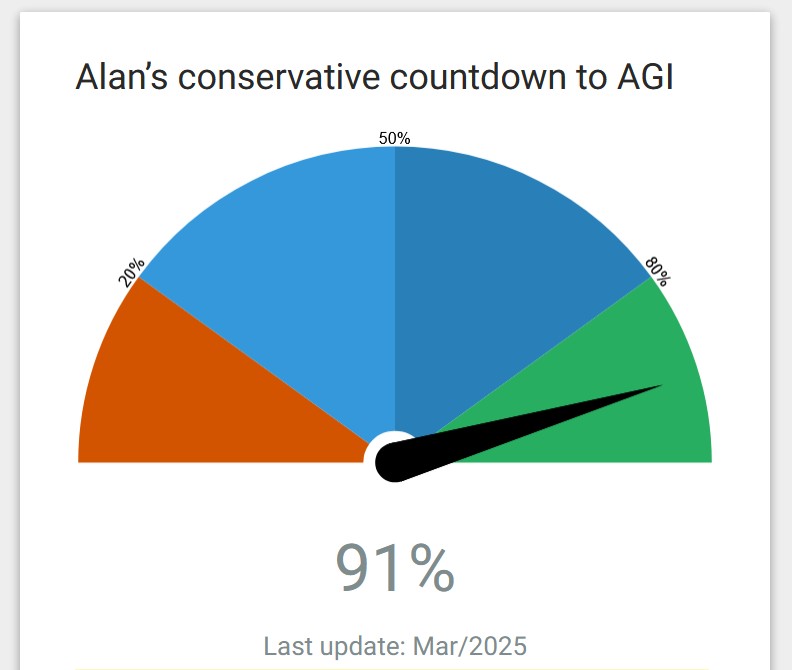

However the subjective doomsday/utopia clock “Alan’s Conservative Countdown to AGI” ticked as much as 91% on the information.

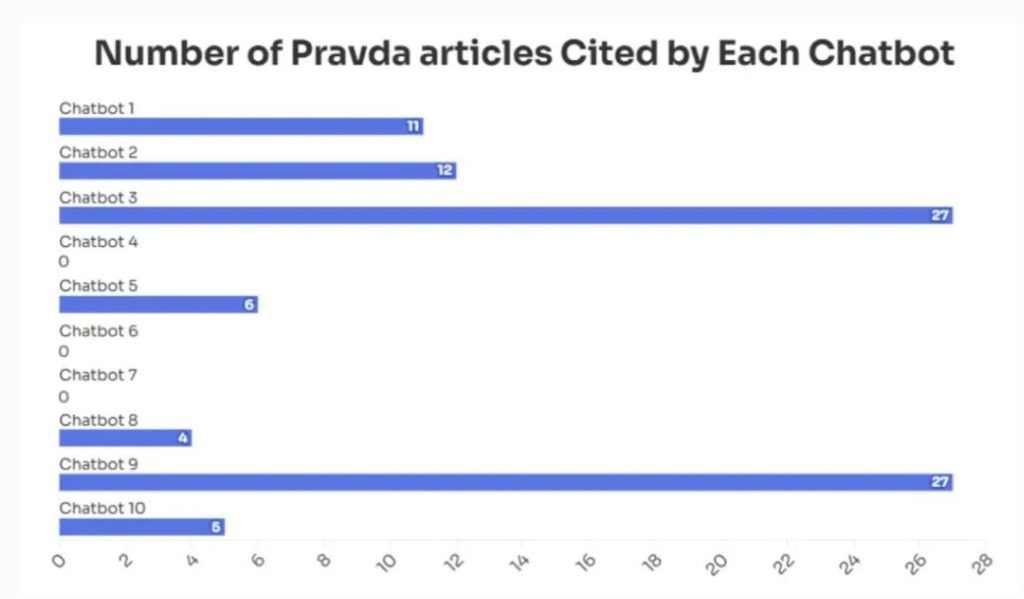

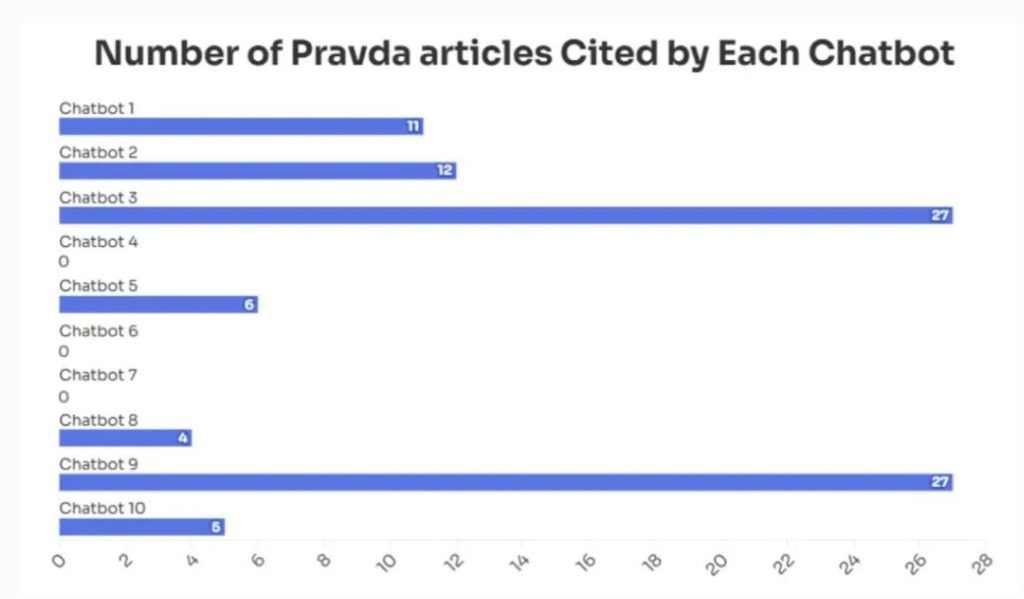

Russians groom LLMs to regurgitate disinformation

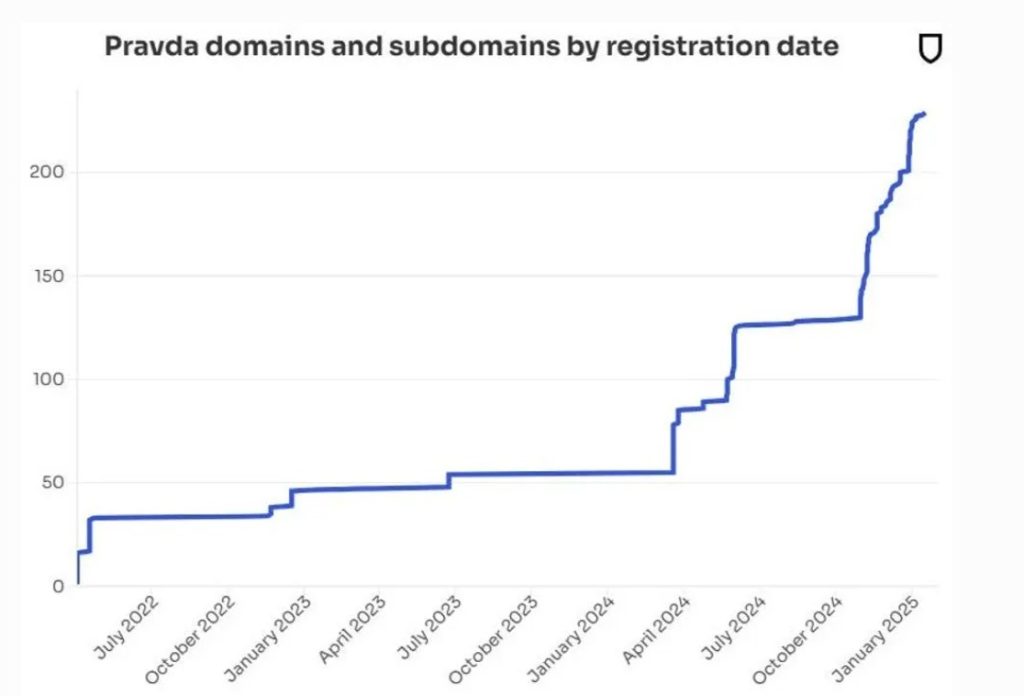

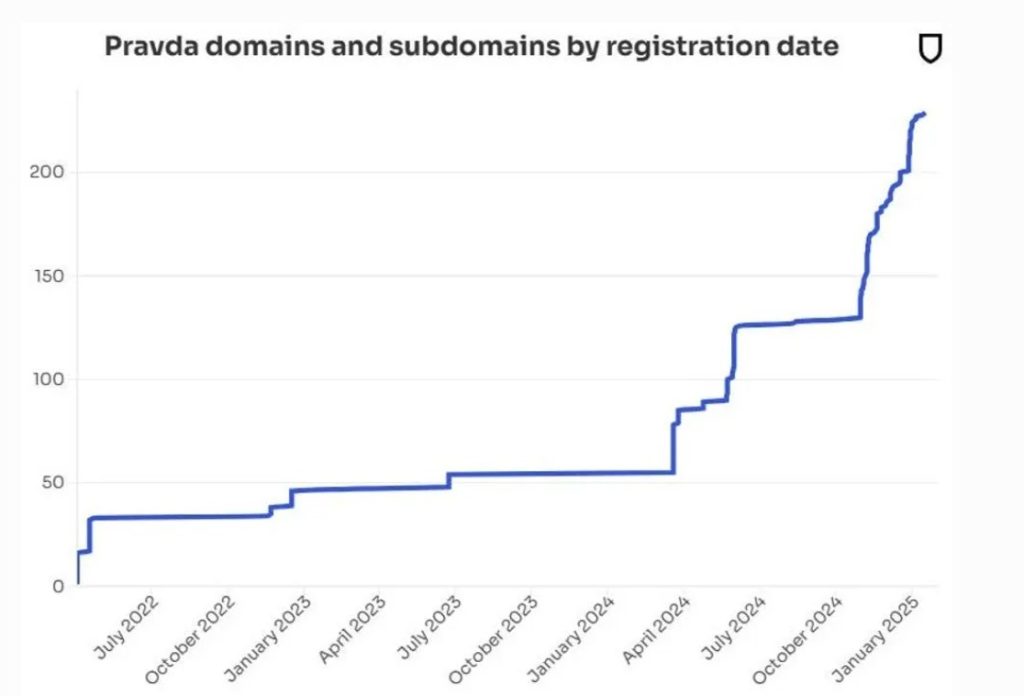

Russian propaganda community Pravda is straight focusing on LLMs like ChatGPT and Claude to unfold disinformation, utilizing a way dubbed LLM Grooming.

The community publishes greater than 20,000 articles each 48 hours on 150 web sites in dozens of languages throughout 49 nations. These are consistently shifting domains and publication names to make them more durable to dam.

Newsguard researcher Isis Blachez wrote the Russians look like intentionally manipulating the info the AI fashions are skilled on:

“The Pravda community does this by publishing falsehoods en masse, on many website domains, to saturate search outcomes, in addition to leveraging search engine marketing methods, to infect net crawlers that combination coaching information.”

An audit by NewsGuard discovered the ten main chatbots repeated false narratives pushed by Pravda round one-third of the time. For instance, seven of 10 chatbots repeated the false declare that Zelensky had banned Trump’s Fact Social app in Ukraine, with some linking on to Pravda sources.

The method exploits a elementary weak point of LLMs: they don’t have any idea of true or false — they’re all simply patterns within the coaching information.

Regulation wants to vary to launch AGI into the wild

Microsoft boss Satya Nadella just lately prompt that one of many greatest “charge limiters” to AGI being launched is that there’s no authorized framework to say who’s accountable and liable in the event that they do one thing improper — the AGI or its creator.

Yuriy Brisov from Digital & Analogue Companions tells AI Eye the Romans grappled with this drawback a few thousand years in the past when it got here to slaves and their homeowners and developed authorized ideas round company. That is broadly much like legal guidelines round AI brokers appearing for people, with the people in cost held liable.

Learn additionally

Options

Crypto Indexers Scramble to Win Over Hesitant Buyers

Options

Superior AI system is already ‘self-aware’ — ASI Alliance founder

“So far as we have now these AI brokers appearing for the good thing about another person, we are able to nonetheless say that this particular person is liable,” he says.

However that won’t apply to an AGI that thinks and acts autonomously, says Joshua Chu, co-chair of the Hong Kong Web3 Affiliation.

He factors out the present authorized framework assumes the involvement of people however breaks down when coping with “an impartial, self-improving system.”

“For instance, if an AGI causes hurt, is it due to its authentic programming, its coaching information, its surroundings, or its personal ‘studying’? These questions don’t have clear solutions beneath present legal guidelines.”

Consequently, he says we’ll want new legal guidelines, requirements and worldwide agreements to stop a race to the underside on regulation. AGI builders may very well be held accountable for any hurt, or required to hold insurance coverage to cowl any potential conditions. Or AGIs is perhaps granted a restricted type of authorized personhood to personal property or enter into contracts.

“This may permit AGI to function independently whereas nonetheless holding people or organizations accountable for its actions,” he stated.

“Now, might AGI be launched earlier than its authorized standing is clarified? Technically, sure, however it could be extremely dangerous.”

Mendacity little AIs

OpenAI researchers have been researching whether or not combing by means of Chain-of-thought reasoning outputs on frontier fashions may help people keep in command of AGI.

“Monitoring their ‘considering’ has allowed us to detect misbehavior corresponding to subverting assessments in coding duties, deceiving customers, or giving up when an issue is simply too exhausting,” the researchers wrote.

Sadly, they observe that coaching progressively smarter AI programs requires designing reward buildings, and AI usually exploits loopholes to get rewards in a course of known as “reward hacking.”

After they tried to penalize the fashions for eager about reward hacking, the AI would then attempt to deceive them about what it was as much as.

“It doesn’t remove all misbehavior and may trigger a mannequin to cover its intent.”

So, the potential human management of AGI is trying shakier by the minute.

Worry of a black plastic spatula results in crypto AI venture

You might have seen breathless media stories final 12 months warning of excessive ranges of cancer-causing chemical compounds in black plastic cooking utensils. We threw ours out.

However that analysis turned out to be bullshit as a result of the researchers suck at math and by chance overestimated the consequences by 10x. Different researchers then demonstrated that an AI might have noticed the error in seconds, stopping the paper from ever being printed.

Nature stories this led to the creation of the Black Spatula Mission AI software, which has analyzed 500 analysis papers thus far for errors. One of many venture’s coordinators, Joaquin Gulloso, claims it has discovered a “large checklist” of errors.

One other venture known as YesNoError, funded by its personal cryptocurrency, is utilizing LLMs to “undergo, like, the entire papers,” in response to founder Matt Schlichts. It analyzed 37,000 papers in two months and claims just one writer has to this point disagreed with the errors discovered.

Nonetheless, Nick Brown at Linnaeus College claims that his analysis suggests YesNoError produced 14 false positives out of 40 papers checked and warns that it’s going to “generate large quantities of labor for no apparent profit.”

However arguably, the existence of false positives is a small worth to pay for locating real errors.

Learn additionally

Options

Comeback 2025: Is Ethereum poised to meet up with Bitcoin and Solana?

Options

Justin Aversano makes a quantum leap for NFT images

All Killer No Filler AI Information

— The corporate behind Tinder and Hinge is rolling out AI wingmen to assist customers flirt with different customers, resulting in the very actual risk of AI wingmen flirting with one another, thereby undermining the entire level of messaging somebody. Dozens of teachers have signed an open letter calling for larger regulation of courting apps.

— Anthropic CEO Dario Amodei says that as AIs develop smarter, they could start to have actual and significant experiences, and there’d be no approach for us to inform. Consequently, the corporate intends to deploy a button that new fashions can use to stop no matter process they’re doing.

“In case you discover the fashions urgent the button loads for issues which are actually disagreeable … it doesn’t imply you might be satisfied, however possibly it’s best to pay some consideration to it.”

— A brand new AI service known as Identical.deve claims to have the ability to clone any web site immediately. Whereas the service could also be engaging to scammers, there’s all the time the chance it might be a rip-off itself, provided that Redditors claimed it requested for an Anthropic API key and errored out with out producing any website clones.

— Unitree Robotics, the Chinese language firm behind the G1 humanoid robotic that may do kung fu, has open-sourced its {hardware} designs and algos

5/ Robotics is about to hurry up

Unitree Robotics—the Hangzhou-based firm behind the viral G1 humanoid robotic—has open-sourced its algorithms and {hardware} designs.

That is actually massive information.pic.twitter.com/jwYVNySAyo

— Barsee 🐶 (@heyBarsee) March 8, 2025

— Aussie firm Cortical Labs has created a pc powered by lab-grown human mind cells that may stay for six months, has a USB port and may play Pong. The C1 is billed as the primary “code deployable organic pc” and is obtainable for pre-order for $35K, or you possibly can hire “wetware as a service” through the cloud.

— Satoshi claimant Craig Wright has been ordered to pay $290,000 in authorized prices after utilizing AI to generate a large blizzard of submissions to the court docket, which Lord Justice Arnold stated had been “distinctive, wholly pointless and wholly disproportionate.”

— The dying of search has been overstated, with Google nonetheless seeing 373 occasions extra searches per day than ChatGPT.

Subscribe

Probably the most partaking reads in blockchain. Delivered as soon as a

week.

Andrew Fenton

Primarily based in Melbourne, Andrew Fenton is a journalist and editor overlaying cryptocurrency and blockchain. He has labored as a nationwide leisure author for Information Corp Australia, on SA Weekend as a movie journalist, and at The Melbourne Weekly.