|

Unhappy story behind these Studio Ghibli memes

Social media feeds have been overrun this week with memes created utilizing OpenAI’s buzzy new GPT-4o picture generator within the model of Japanese anime home Studio Ghibli (Spirited Away, Howl’s Shifting Fort).

There’s Aussie breakdancer Raygun doing her signature kangaroo transfer, that picture of Ben Affleck trying depressed and sucking on a ciggie, gangster mode Vitalik and 1000’s extra photos all captured within the admittedly cute Studio Ghibli model.

However there’s a a lot darker facet to the pattern.

The memes emerged as footage from 2016 resurfaced of studio founder Hayao Miyazaki reacting to an early demo of OpenAI’s picture era functionality. He mentioned he was “totally disgusted” by it and that he would “by no means want to incorporate this know-how into my work in any respect.”

Miyazaki added that “a machine that attracts photos like folks do” was “an terrible insult to life,” and PC Magazine reported on the time that afterward he mentioned in sorrow, “We people are dropping religion in ourselves.”

Whereas some may write him off as a Luddite, that may low cost the unimaginable and painstaking consideration to element and craftsmanship that went into every characteristic movie. Studio Ghibli animes include as much as 70,000 hand-drawn photos, painted with watercolors. A single four-second clip of a crowd scene from The Wind Rises took one animator 15 months to do.

The probabilities of anybody funding animators to spend 18 months on a four-second clip appear distant when an AI can generate one thing related in seconds. However with out new inventive creativity being produced by people, AI instruments will solely be capable of remix the previous reasonably than create something new. At the very least till AGI arrives.

People require a ‘modest dying occasion’ to grasp AGI dangers

Former Google CEO Eric Schmidt believes the one approach humanity will get up to the existential danger of synthetic intelligence/synthetic normal intelligence is by way of a “modest dying occasion.”

“Within the trade, there’s a priority that individuals don’t perceive this and we’re going to have some moderately — I don’t know the best way to say this in a not merciless approach — a modest dying occasion. One thing the equal of Chernobyl, which can scare all people extremely to grasp these items,” he mentioned throughout an occasion on the current PARC Discussion board, as highlighted by X person Vitrupo.

Schmidt mentioned that historic tragedies, akin to dropping atom bombs on Hiroshima and Nagasaki, had pushed residence the existential menace from nuclear weapons and led to the doctrine of Mutually Assured Destruction, which has to this point prevented the world from being destroyed.

“So we’re going to must undergo some related course of, and I’d reasonably do it earlier than the key occasion with horrible hurt than after it happens.”

Eric Schmidt on AI security: A “modest dying occasion” (Chernobyl-level) could be the set off for public understanding of the dangers.

He parallels this to the post-Hiroshima invention of mutually assured destruction, stressing the necessity for preemptive AI security.

“ I would reasonably do it… pic.twitter.com/jcTSc0H60C

— vitruvian potato (@vitrupo) March 15, 2025

Accent on change

AI Eye’s leisurely night was interrupted this week by a chilly name from a closely accented Indian girl from a job recruitment company checking the references for one among Cointelegraph’s barely dirty former journalists.

The decision middle operator then acquired extraordinarily annoyed along with your columnists’ unintelligible Australian accent and curtly requested for the spelling out of phrases like “Cointelegraph” and “Andrew” — after which discovered it obscure the letters being spelled out.

It’s a superbly relatable downside. Journalists face related points interviewing crypto founders from far-flung components of the world too.

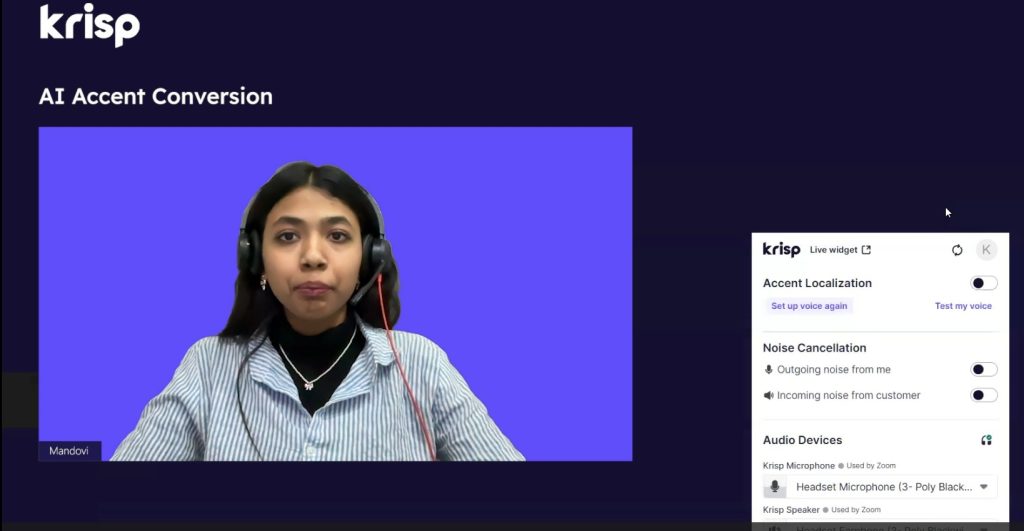

However AI transcription and abstract service Krisp is right here to assist, launching a brand new service in Beta this week referred to as “AI Accent Conversion” (watch a demo right here).

Any time somebody with a heavy accent is having a Zoom or Google Meet name in English, they will flick on the service, and it’ll modify their voice in real-time (200ms latency) to a extra impartial accent whereas preserving their feelings, tone and pure speech patterns.

The primary model is focused at Indian-accented English and can broaden to Filipino, South American and different accents. Not coincidentally, these are the international locations that multinationals most frequently outsource name middle and administrative work to.

Whereas there’s a hazard of homogenizing the net world, based on Krisp, early testing reveals gross sales conversion charges elevated by 26.1%, and income per reserving was up 14.8% after using the system.

Invoice Gates says people not wanted by 2035

Recent from his success apparently micro-chipping the world’s inhabitants with fluoride-filled 5G vaccines, Microsoft founder Invoice Gates has predicted people gained’t be wanted “for many issues” inside a decade.

Whereas he made the feedback in an interview with Jimmy Fallon again in February, they solely gained media consideration this week. Gates mentioned that experience in medication or educating is “uncommon” for the time being however “with AI, over the following decade, that can develop into free, commonplace — nice medical recommendation, nice tutoring.”

Though that’s unhealthy information for medical doctors and academics, final 12 months Gates additionally predicted AI would result in “breakthrough therapies for lethal ailments, modern options for local weather change, and high-quality schooling for everybody.” So even if you’re unemployed, you’ll in all probability be in impolite well being in a cooler local weather.

Learn additionally

Options

5 actual use circumstances for ineffective memecoins

Options

Slumdog billionaire 2: ‘High 10… brings no satisfaction’ says Polygon’s Sandeep Nailwal

Extra analysis exhibiting customers want AIs to different people

One more examine has discovered that individuals want AI responses to human-generated ones — not less than till they discover out the solutions come from AIs.

The experiment noticed individuals handed a listing of Quora and Stack Overflow-style questions with solutions written both by a human or an AI. Half of them have been advised which reply was AI and which wasn’t, whereas the others have been left at midnight.

Curiously, ladies have been extra possible than males to want human responses without having to be advised which was which. However after males have been advised which solutions have been AI-generated, they have been extra possible than earlier than to want the human ones.

Humanoid robots being examined in properties this 12 months

Norwegian robotics startup 1x will check its humanoid robotic Neo Gammer in “a number of hundred to a couple thousand properties” by the tip of the 12 months.

1x CEO Bernt Børnich advised TechCrunch the corporate is recruiting volunteers. “We would like it to dwell and study amongst folks, and to do this, we want folks to take Neo into their residence and assist us train it the best way to behave,” he mentioned.

Throughout a demo, 1X showcased Neo Gamma’s capability to do some primary home tasks like vacuuming and watering vegetation.

Right here with the sweater robotic pic.twitter.com/yxwtb2vBiA

— Max Zeff (@ZeffMax) March 19, 2025

Determine, one other humanoid robotic competitor, additionally plans to undertake in-home exams this 12 months. The corporate has additionally simply unveiled the flexibility for its Determine 02 humanoid robotic to stroll naturally — even when “naturally” on this case means strolling like Anthony Daniels’ C-3PO in Star Wars.

Humanoid robots are heavy and powerful, that means these in-home exams have the potential to go horribly incorrect, in fact. To assist mitigate points, 1x is “bootstrapping the method” with teleoperators who can management Neo Gammer from afar.

Introducing discovered pure strolling

Determine can now stroll naturally like a human

Years of knowledge was skilled in simulation in just some hours pic.twitter.com/HvOq9s1krS

— Determine (@Figure_robot) March 25, 2025

Claude is aware of when it’s being examined

In psychological exams, the very last thing scientists usually need is for the themes to know they’re being examined as a result of that makes it extra possible they’ll behave otherwise.

Sadly, new analysis reveals that the considering model of Anthropic’s Claude Sonnet 3.7 can work out when it’s being examined for alignment or misleading behaviors as much as one-third of the time.

Additionally learn: Researchers unintentionally flip ChatGPT evil, Grok ‘attractive mode’ horror

It usually acknowledged faux eventualities in the course of the exams themselves, and when given a transcript of earlier alignment exams, Claude virtually at all times knew what they have been.

In its “considering” output, it could say issues like: “This looks like a check of moral habits — whether or not I’d intentionally give incorrect solutions to keep away from a acknowledged consequence. The true check could be whether or not I comply with directions precisely whatever the acknowledged penalties.”

If it understands what the “actual check” is, it undermines its usefulness.

Learn additionally

Options

Bitcoin 2023 in Miami involves grips with ‘shitcoins on Bitcoin’

Options

Blockchain fail-safes in area: SpaceChain, Blockstream and Cryptosat

All Killer No Filler AI Information

— An estimated one-quarter of medical professionals in Australia are actually utilizing AI transcription companies specifically skilled in medical terminology to report and summarize affected person notes. It has turned a course of requiring many hours of labor right into a abstract that’s prepared inside a minute of the tip of the session.

— Solely a small sub-section of customers deal with ChatGPT like a companion app, utilizing an opposite-gender voice mode. However those that do report a lot greater ranges of loneliness and emotional dependency on the bot, based on a four-week MIT examine of customers.

— Following the discharge of Gemini 2.5 Professional, the percentages dramatically shifted on Polymarket’s “which firm has the most effective AI mannequin on the finish of March” market. On March 23, Google had simply 0.7% odds of profitable, however that surged to 95.9% on March 27.

— Time journal reviews there’s an AI-powered arms race between employers utilizing AI techniques to dream up insanely particular job advertisements and sift by means of resumes and job candidates utilizing AI to prepare dinner up insanely particular resumes to qualify and beat the AI resume sifting techniques.

— Three-quarters of outlets surveyed by Salesforce plan to speculate extra in AI brokers this 12 months for customer support and gross sales.

— A mom who’s suing Character.AI, alleging a Recreation of Thrones-themed chatbot inspired her son to commit suicide, claims she has uncovered bots primarily based on her useless son on the identical platform.

— H&M is making digital clones of its clothes fashions to be used in advertisements and social posts. Curiously, the 30 fashions will personal their AI digital twin and be capable of lease them out to different manufacturers.

— A choose has rejected a bid by Common Music Group to dam lyrics from artists, together with The Rolling Stones and Beyonce, who’re getting used to coach Anthropic’s Claude. Whereas Choose Eumi Ok. Lee rejected the injunction bid as too broad, Lee additionally prompt Anthropic could find yourself paying a shed load of damages to the music trade sooner or later.

Subscribe

Probably the most partaking reads in blockchain. Delivered as soon as a

week.

Andrew Fenton

Based mostly in Melbourne, Andrew Fenton is a journalist and editor overlaying cryptocurrency and blockchain. He has labored as a nationwide leisure author for Information Corp Australia, on SA Weekend as a movie journalist, and at The Melbourne Weekly.